Category: AI

-

Unlocking the Potential of Artificial Intelligence

Artificial Intelligence (AI) is a branch of computer science that aims to create intelligent machines capable of performing tasks typically requiring human intelligence. These tasks include learning, problem-solving, understanding natural language, and recognizing patterns. AI systems analyze large amounts of data, make decisions, and improve their performance over time. There are two main types of…

-

Exploring Social Media Sentiment: Understanding Online Emotions

Social media sentiment analysis is a technique that uses natural language processing, text analysis, and computational linguistics to systematically identify, extract, quantify, and study emotional states and subjective information from social media data. This process enables businesses and organizations to understand public opinions, attitudes, and emotions towards their brand, products, or services. By analyzing social…

-

Revolutionizing Image Recognition with AlexNet

Image recognition is a fundamental aspect of artificial intelligence (AI) that enables computers to analyze and interpret visual information from digital images or videos. This technology has significantly impacted numerous sectors, including healthcare, automotive, and retail industries. A pivotal advancement in image recognition was the introduction of AlexNet, a deep convolutional neural network that substantially…

-

Unlocking Potential: Top Machine Learning Consulting Firms

Machine learning consulting firms are specialized companies that offer expertise and services in machine learning, a branch of artificial intelligence focused on developing algorithms and models enabling computers to learn from data and make predictions or decisions. These firms collaborate with businesses across industries to leverage machine learning for operational improvements, data-driven decision-making, and competitive…

-

Unlocking Potential: Artificial Neural Network Machine Learning

Artificial Neural Networks (ANNs) are computational models inspired by the human brain’s structure and function. They consist of interconnected nodes, or “neurons,” that collaborate to process and analyze complex data. Each neuron receives input signals, processes them using a specific function, and produces an output signal. These neurons form layers within the network, with each…

-

Unlocking the Power of BERT: Machine Learning Advancements

BERT (Bidirectional Encoder Representations from Transformers) is a natural language processing technique developed by Google in 2018. It is designed to improve the understanding of context in search queries and enhance the relevance of search results. BERT’s key feature is its bidirectional approach, which analyzes words in relation to both preceding and following words in…

-

Master Machine Learning with Udacity

Machine learning and artificial intelligence (AI) have become prominent topics in the technology sector in recent years. These technologies have significantly altered human-machine interactions and have the potential to revolutionize various industries. Machine learning, a subset of AI, focuses on developing algorithms that enable computers to learn from data and make predictions or decisions based…

-

Unlocking the Power of Audio Machine Learning

Audio machine learning is a specialized field within artificial intelligence that focuses on developing algorithms and models for analyzing, interpreting, and processing audio data. This area has experienced significant growth in recent years due to the increasing availability of diverse audio sources, including speech, music, and environmental sounds. The primary objective of audio machine learning…

-

Revolutionizing AI with NVIDIA Deep Learning

Artificial Intelligence (AI) has a long history, with roots tracing back to ancient times. However, significant progress in the field occurred during the 20th century. The term “artificial intelligence” was coined by John McCarthy in 1956, marking a pivotal moment in AI’s development. Since then, AI has experienced rapid growth and evolution. Deep learning, a…

-

Unlocking the Power of Recurrent Neural Networks

Artificial Intelligence (AI) has been a rapidly evolving field, with the development of various machine learning algorithms and techniques. One such algorithm that has gained significant attention in recent years is the Recurrent Neural Network (RNN). RNNs are a type of neural network designed to recognize patterns in sequences of data, making them particularly well-suited…

-

Revolutionizing Industries with Deep Learning Systems

Deep learning is a branch of artificial intelligence that utilizes complex algorithms to enable machines to learn from data. These algorithms are inspired by the structure and function of the human brain, allowing machines to process information in a similar manner. Deep Learning systems excel at identifying patterns and extracting features from large datasets, making…

-

Exploring the Power of Bayesian Deep Learning

Bayesian deep learning combines Deep Learning techniques with Bayesian inference to incorporate uncertainty into model predictions. This approach enhances the robustness and reliability of deep learning models, making them more effective in complex and uncertain environments. The integration of Bayesian methods allows AI systems to quantify uncertainty, leading to more accurate predictions and improved decision-making…

-

Unsupervised Deep Learning: Unlocking Hidden Patterns

Unsupervised deep learning is a branch of machine learning that focuses on training algorithms to identify patterns and structures in unlabeled data. This approach is particularly valuable when working with large, complex datasets where manual labeling is impractical or unfeasible. Unsupervised Deep Learning algorithms employ various techniques, including clustering, dimensionality reduction, and generative modeling, to…

-

Exploring Deep Learning with MATLAB

Deep learning is a branch of machine learning that employs multi-layered neural networks to analyze and interpret complex data. This approach has become increasingly popular due to its effectiveness in solving intricate problems across various domains, including image and speech recognition, as well as natural language processing. MATLAB, a high-level programming language and interactive environment…

-

Distributed Machine Learning: Advancing AI Across Networks

Distributed machine learning is a branch of artificial intelligence that involves training machine learning models across multiple computing devices. This approach enables parallel processing of large datasets, resulting in faster training times and the ability to handle more complex models. As datasets continue to grow in size and complexity, distributed machine learning has become increasingly…

-

Unlocking the Power of Embedded Machine Learning

Embedded machine learning integrates machine learning algorithms and models into embedded systems like IoT devices, microcontrollers, and edge devices. This technology enables these devices to make intelligent decisions and predictions independently, without constant cloud or central server connectivity. By bringing artificial intelligence capabilities to the edge, embedded machine learning allows devices to process and analyze…

-

Unlocking Sentiment Insights with Analytics Tool

Sentiment analysis, also known as opinion mining, is a computational technique used to determine the emotional tone behind written text. It employs natural language processing, text analysis, and computational linguistics to interpret attitudes, opinions, and emotions expressed in online content. This method is particularly valuable for businesses and organizations seeking to understand public perception of…

-

Unveiling the Power of Autoencoders in Data Compression

Autoencoders are a specialized type of artificial neural network used in machine learning and artificial intelligence for data compression and representation learning. Their architecture consists of three main components: an input layer, a hidden layer, and an output layer. The input layer receives raw data, which is then compressed by the hidden layer and reconstructed…

-

Mastering TensorFlow: A Beginner’s Guide

Artificial Intelligence (AI) has become a prominent focus in the technology sector, with TensorFlow emerging as a key driver of this advancement. Developed by Google Brain, TensorFlow is an open-source machine learning library that facilitates the creation and deployment of machine learning models. It offers a comprehensive ecosystem of tools, libraries, and community resources, enabling…

-

Mastering Deep Learning with Coursera’s AI Course

Coursera’s AI course offers a comprehensive introduction to artificial intelligence and deep learning. Led by industry experts, the curriculum covers a broad spectrum of topics, ranging from fundamental neural network concepts to advanced AI applications across various sectors. The course caters to both novices and experienced professionals seeking to enhance their AI and Deep Learning…

-

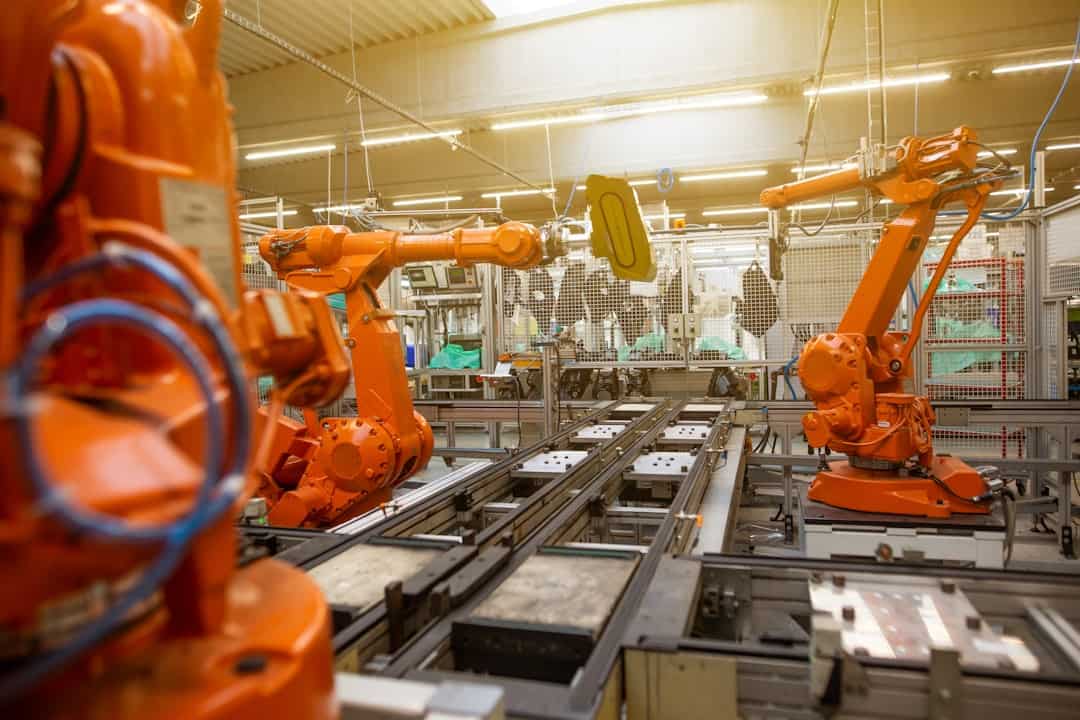

Advancing Robotics with Reinforcement Learning

Reinforcement learning is a machine learning technique that enables robots to acquire knowledge through environmental interaction and trial-and-error processes. This approach is founded on the principle of reward and punishment, where the robot receives positive feedback for correct decisions and negative feedback for incorrect ones. Through this mechanism, robots can continuously adapt and enhance their…