Supervised learning is a machine learning technique that utilizes labeled training data to teach algorithms. This method involves training models on input-output pairs, enabling them to learn the relationship between inputs and corresponding outputs. The primary objective of supervised learning is to develop a function that can accurately map inputs to outputs, allowing the model to make predictions on new, unseen data.

The term “supervised” refers to the presence of a supervisor, which provides the correct answers during the training process. This approach has widespread applications across various fields, including image and speech recognition, natural language processing, and numerous other domains. Supervised learning has proven to be a powerful tool for addressing complex problems and generating predictions based on historical data.

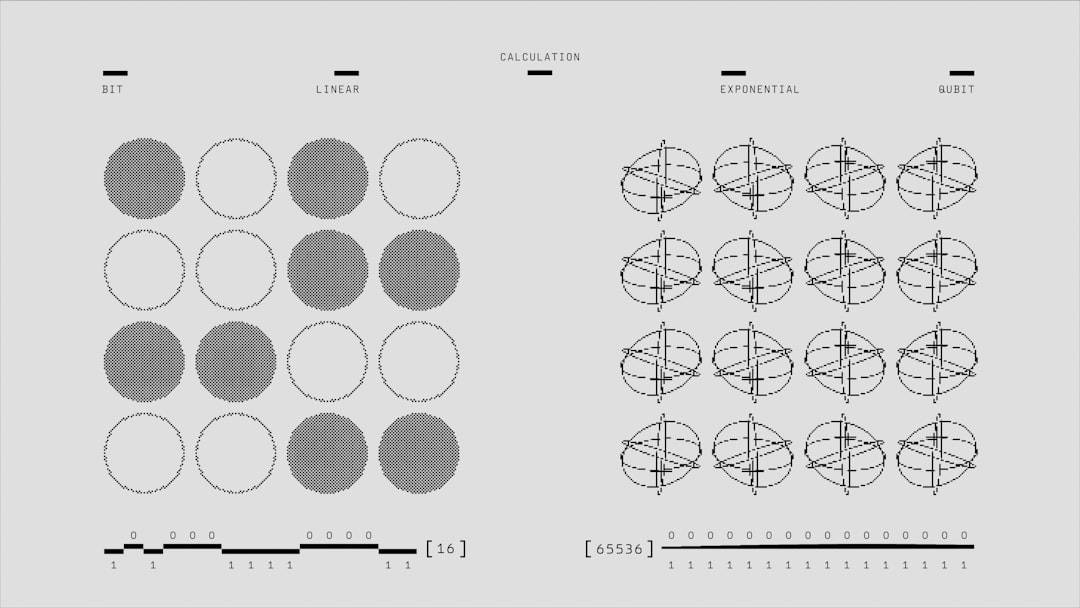

As artificial intelligence and machine learning continue to advance, supervised learning has become integral to many industries, such as healthcare, finance, and marketing. The exponential growth of data in recent years has increased the demand for effective supervised learning algorithms. These algorithms are crucial for extracting valuable insights from large datasets and facilitating informed decision-making processes.

As a result, supervised learning remains a critical area of research and development in the field of machine learning and artificial intelligence.

Key Takeaways

- Supervised learning is a type of machine learning where the model is trained on a labeled dataset to make predictions or decisions.

- Common supervised learning algorithms include linear regression, logistic regression, decision trees, random forests, and support vector machines.

- The choice of the right supervised learning model depends on the nature of the data, the problem at hand, and the trade-off between bias and variance.

- Preprocessing and feature engineering techniques such as data cleaning, normalization, and dimensionality reduction are crucial for improving the performance of supervised learning models.

- Evaluation metrics like accuracy, precision, recall, and F1 score are used to assess the performance of supervised learning models, and techniques like cross-validation and hyperparameter tuning can help improve their performance.

Understanding the Basics of Supervised Learning Algorithms

Types of Supervised Learning Algorithms

Regression algorithms are used when the output variable is a continuous value, such as predicting house prices or stock prices. On the other hand, classification algorithms are used when the output variable is a category or label, such as classifying emails as spam or non-spam.

Common Supervised Learning Algorithms

Some common supervised learning algorithms include linear regression, logistic regression, decision trees, random forests, support vector machines, and neural networks. Each algorithm has its own strengths and weaknesses, and the choice of algorithm depends on the specific problem at hand and the nature of the data. For example, decision trees are often used for their interpretability and ease of use, while neural networks are known for their ability to capture complex patterns in data.

Choosing the Right Algorithm

It’s important to understand the underlying principles of each algorithm in order to choose the right one for a given problem. Additionally, it’s crucial to consider factors such as the size of the dataset, the dimensionality of the input features, and the computational resources available when selecting an algorithm for a supervised learning task.

Choosing the Right Supervised Learning Model for Your Data

Choosing the right supervised learning model for a given dataset is a critical step in the machine learning process. There are several factors to consider when selecting a model, including the nature of the problem, the size and quality of the data, and the computational resources available. It’s important to evaluate different models and compare their performance on a given dataset in order to make an informed decision.

One approach to choosing the right model is to start with simple models and gradually increase the complexity if necessary. For example, one might start with a linear regression model and then move on to more complex models such as decision trees or neural networks if the linear model does not perform well. It’s also important to consider the interpretability of the model, as some models are more transparent and easier to understand than others.

Another important consideration is the trade-off between bias and variance. A model with high bias tends to underfit the data, while a model with high variance tends to overfit the data. Finding the right balance between bias and variance is crucial for building a model that generalizes well to new, unseen data.

Preprocessing and Feature Engineering for Supervised Learning

| Technique | Purpose | Example |

|---|---|---|

| Normalization | Scaling numerical features to a standard range | Scaling age and income to a range of 0-1 |

| One-Hot Encoding | Converting categorical variables into binary vectors | Converting ‘gender’ into two binary columns: ‘male’ and ‘female’ |

| Feature Selection | Choosing the most relevant features for the model | Using correlation or feature importance to select top features |

| Imputation | Filling in missing values in the dataset | Replacing missing age values with the mean age |

Preprocessing and feature engineering are essential steps in preparing data for supervised learning tasks. Preprocessing involves cleaning and transforming the raw data into a format that is suitable for training a machine learning model. This may include handling missing values, scaling numerical features, encoding categorical variables, and splitting the data into training and testing sets.

Feature engineering involves creating new features from the existing ones in order to improve the performance of the model. This may include creating interaction terms, polynomial features, or transforming existing features to better capture relationships in the data. Feature engineering requires domain knowledge and creativity, as it involves understanding the underlying patterns in the data and finding ways to represent them effectively.

In addition to preprocessing and feature engineering, it’s important to consider techniques such as dimensionality reduction and feature selection in order to reduce the complexity of the model and improve its performance. These techniques help to remove irrelevant or redundant features from the dataset, which can lead to faster training times and better generalization to new data.

Evaluating and Improving the Performance of Supervised Learning Models

Evaluating the performance of supervised learning models is crucial for understanding how well they generalize to new, unseen data. There are several metrics that can be used to evaluate model performance, including accuracy, precision, recall, F1 score, and area under the ROC curve. The choice of metric depends on the specific problem at hand and the nature of the data.

In addition to evaluating model performance using metrics, it’s important to use techniques such as cross-validation to assess how well a model generalizes to new data. Cross-validation involves splitting the data into multiple folds and training the model on different subsets of the data in order to obtain a more robust estimate of its performance. Once a model has been evaluated, there are several techniques that can be used to improve its performance.

This may include tuning hyperparameters, such as learning rate or regularization strength, in order to find the best configuration for the model. It may also involve collecting more data, improving feature engineering, or using ensemble methods to combine multiple models for better performance.

Advanced Techniques and Strategies for Supervised Learning

Transfer Learning

One such technique is transfer learning, which involves leveraging pre-trained models on large datasets and fine-tuning them for a specific task. Transfer learning can be especially useful when working with limited labeled data or when training deep learning models.

Ensemble Learning

Another advanced strategy is ensemble learning, which involves combining multiple models in order to improve predictive performance. This may include techniques such as bagging, boosting, or stacking, which aim to reduce variance and improve generalization by aggregating predictions from multiple models.

Dealing with Limited Labeled Data

Furthermore, techniques such as semi-supervised learning and active learning can be used when labeled data is scarce or expensive to obtain. Semi-supervised learning leverages both labeled and unlabeled data to train a model, while active learning involves iteratively selecting the most informative samples for labeling in order to improve model performance.

Real-World Applications and Case Studies of Supervised Learning in AI

Supervised learning has numerous real-world applications across various industries. In healthcare, it can be used for predicting patient outcomes, diagnosing diseases from medical images, or personalizing treatment plans based on patient data. In finance, supervised learning can be used for credit scoring, fraud detection, or stock price prediction.

In marketing, it can be used for customer segmentation, churn prediction, or recommendation systems. One notable case study of supervised learning in AI is its application in autonomous vehicles. Supervised learning algorithms can be used for detecting objects on the road, predicting pedestrian behavior, or recognizing traffic signs and signals.

These applications require large amounts of labeled data and sophisticated models in order to ensure safety and reliability. Another case study is the use of supervised learning in natural language processing applications such as language translation, sentiment analysis, or chatbots. Supervised learning models can be trained on large corpora of text data in order to understand language patterns and make accurate predictions on new text inputs.

In conclusion, supervised learning is a powerful approach for solving complex problems and making predictions based on historical data. By understanding the basics of supervised learning algorithms, choosing the right model for a given dataset, preprocessing and feature engineering the data effectively, evaluating and improving model performance, leveraging advanced techniques and strategies, and exploring real-world applications and case studies, it’s possible to harness the full potential of supervised learning in AI across various domains.

If you’re interested in exploring the potential of virtual spaces, you may want to check out this article on entering the metaverse and exploring virtual spaces. It delves into the various ways in which virtual spaces can be utilized and the impact they can have on different industries, including the entertainment and media sector. This article provides valuable insights into the possibilities of the metaverse and how it can be leveraged for various purposes.

FAQs

What is supervised learning?

Supervised learning is a type of machine learning where the algorithm is trained on a labeled dataset, meaning that the input data is paired with the correct output. The algorithm learns to make predictions or decisions based on the input data.

How does supervised learning work?

In supervised learning, the algorithm is trained on a labeled dataset, where the input data is paired with the correct output. The algorithm learns to map the input data to the correct output by finding patterns and relationships in the data.

What are some common algorithms used in supervised learning?

Some common algorithms used in supervised learning include linear regression, logistic regression, decision trees, random forests, support vector machines, and neural networks.

What are some applications of supervised learning?

Supervised learning is used in a wide range of applications, including image and speech recognition, natural language processing, recommendation systems, and predictive modeling in fields such as finance, healthcare, and marketing.

What are the advantages of supervised learning?

Some advantages of supervised learning include the ability to make accurate predictions or decisions, the ability to handle complex tasks, and the ability to learn from large amounts of data.

What are the limitations of supervised learning?

Some limitations of supervised learning include the need for labeled data, the potential for overfitting, and the inability to handle new or unseen data that is different from the training data.

Leave a Reply