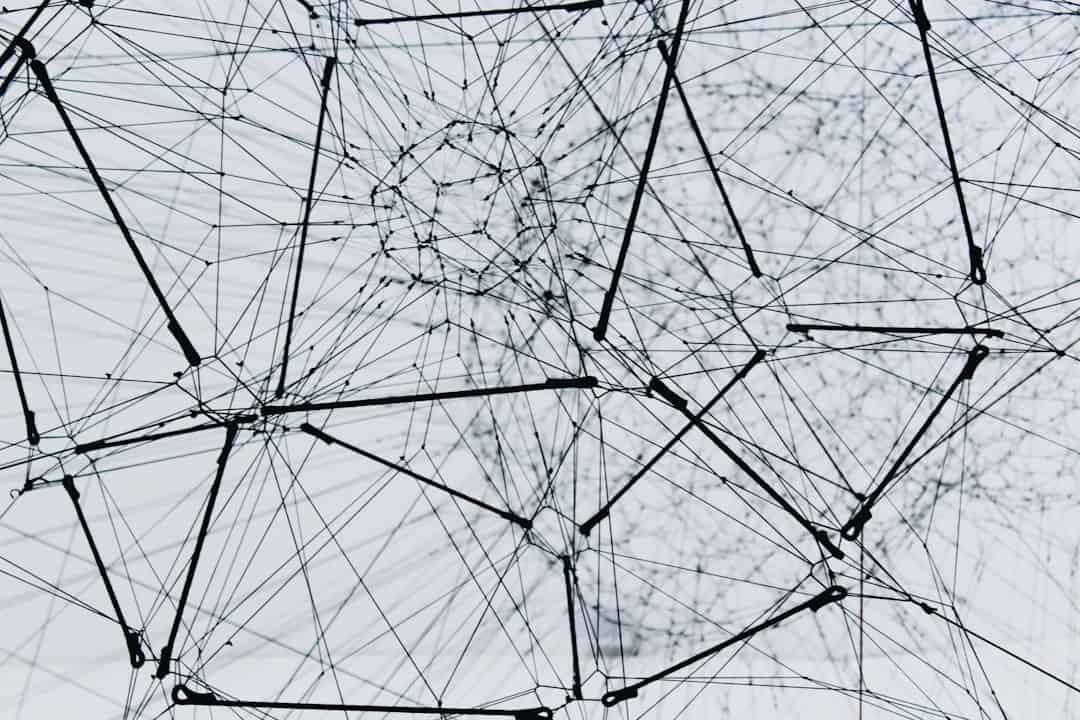

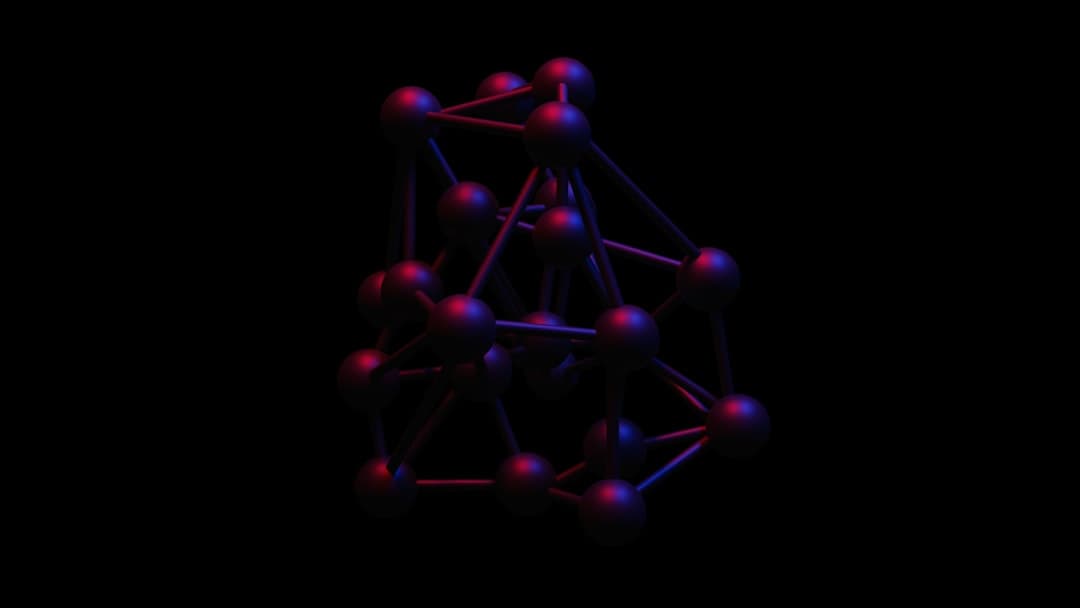

Convolutional Neural Networks (CNNs) are a specialized type of artificial intelligence algorithm designed for processing and analyzing visual data. These networks consist of multiple layers, each serving a specific purpose in image recognition. The primary components of a CNN include convolutional layers, pooling layers, activation functions, fully connected layers, and dropout layers.

Each layer contributes to the network’s ability to accurately identify and classify images. Convolutional layers are a key component of CNNs, responsible for detecting features within an image. These layers utilize filters to scan the input image and identify various patterns, such as edges, textures, and shapes.

By applying these filters across the entire image, convolutional layers generate feature maps that highlight the presence of specific visual elements. This process enables the CNN to construct a hierarchical representation of the input image, with lower layers detecting simple features and higher layers identifying more complex patterns. The convolutional layers are fundamental to the CNN’s ability to interpret and understand visual data.

Key Takeaways

- CNN layers in AI are essential for processing and analyzing visual data, and they consist of convolutional, pooling, activation, fully connected, and dropout layers.

- Convolutional layers play a crucial role in CNN by applying filters to input data to extract features and patterns, enabling the network to learn and understand complex visual information.

- Pooling layers help reduce the spatial dimensions of the input data, allowing the network to focus on the most important features while also aiding in controlling overfitting.

- Activation functions, such as ReLU and Sigmoid, introduce non-linearity to the CNN layers, enabling the network to learn and model complex relationships within the data.

- Fully connected layers connect all neurons in one layer to those in the next layer, allowing the network to make predictions based on the learned features and patterns.

- Dropout layers are used to prevent overfitting by randomly deactivating a certain percentage of neurons during training, forcing the network to learn more robust and generalizable features.

- Integrating CNN layers in AI applications enables advanced image recognition capabilities, making it possible to analyze and understand visual data for various real-world applications.

Exploring the Role of Convolutional Layers in CNN

The convolutional layers in a CNN are essential for capturing the spatial hierarchies present in an image. These layers use a technique known as convolution to apply a set of filters to the input image. Each filter is designed to detect a specific feature, such as a horizontal edge or a diagonal texture.

By convolving the filters across the entire input image, the convolutional layers are able to create feature maps that highlight the presence of these features. This process allows the CNN to gradually build up a hierarchical representation of the input image, with lower layers detecting simple features and higher layers detecting more complex patterns. In addition to detecting features, the convolutional layers also play a crucial role in reducing the dimensionality of the input data.

This is achieved through techniques such as stride and pooling, which help to downsample the feature maps and extract the most important information from the input image. By reducing the dimensionality of the data, the convolutional layers enable the CNN to focus on the most relevant visual elements and discard unnecessary details. Overall, the convolutional layers are essential for enabling the CNN to effectively process and analyze visual data.

Unraveling the Power of Pooling Layers in CNN

Pooling layers are an essential component of convolutional neural networks (CNN) and play a crucial role in reducing the dimensionality of feature maps while retaining important information. The primary function of pooling layers is to downsample the feature maps generated by the convolutional layers, thereby reducing the spatial dimensions of the data. This downsampling process helps to make the CNN more computationally efficient while also improving its ability to generalize and recognize patterns in visual data.

There are several types of pooling layers commonly used in CNNs, including max pooling and average pooling. Max pooling works by selecting the maximum value from each subregion of the feature map, effectively highlighting the most prominent features present in the data. On the other hand, average pooling calculates the average value from each subregion, providing a more generalized representation of the features.

Both types of pooling layers help to reduce the dimensionality of the data and extract important information from the feature maps, ultimately improving the performance of the CNN in image recognition tasks.

Leveraging the Benefits of Activation Functions in CNN Layers

| Activation Function | Advantages | Disadvantages |

|---|---|---|

| ReLU (Rectified Linear Unit) | – Fast convergence – Avoids vanishing gradient problem |

– Output can be zero for negative input |

| Sigmoid | – Output between 0 and 1 – Smooth gradient |

– Vanishing gradient problem for very large/small inputs |

| Tanh | – Output between -1 and 1 – Zero-centered |

– Vanishing gradient problem for very large/small inputs |

| Leaky ReLU | – Avoids dying ReLU problem | – More complex than ReLU |

Activation functions are a critical component of convolutional neural networks (CNN) and play a key role in introducing non-linearity into the network. Non-linear activation functions are essential for enabling CNNs to learn complex patterns and relationships within visual data, as they allow for more sophisticated decision boundaries to be formed. Without activation functions, CNNs would be limited to learning only linear relationships, severely restricting their ability to accurately classify and recognize images.

There are several types of activation functions commonly used in CNNs, including ReLU (Rectified Linear Unit), sigmoid, and tanh (hyperbolic tangent). ReLU is one of the most widely used activation functions in CNNs due to its simplicity and effectiveness in combating the vanishing gradient problem. Sigmoid and tanh functions are also used in certain scenarios, particularly when dealing with binary classification tasks or when normalization of output values is required.

Overall, activation functions are essential for enabling CNNs to learn complex patterns and make accurate predictions about visual data.

Harnessing the Potential of Fully Connected Layers in CNN

Fully connected layers are an integral part of convolutional neural networks (CNN) and play a crucial role in processing the feature maps generated by the convolutional and pooling layers. These layers are responsible for taking the output from the previous layers and using it to make predictions about the input data. By connecting every neuron in one layer to every neuron in the next layer, fully connected layers enable CNNs to learn complex relationships and make high-level decisions about visual data.

The fully connected layers in a CNN typically consist of one or more hidden layers followed by an output layer. The hidden layers use activation functions to introduce non-linearity into the network and learn complex patterns within the feature maps. The output layer then uses these learned patterns to make predictions about the input data, such as classifying an image into different categories.

Overall, fully connected layers are essential for enabling CNNs to learn from visual data and make accurate predictions about the content of images.

Examining the Impact of Dropout Layers in CNN

Dropout layers are a powerful tool for preventing overfitting in convolutional neural networks (CNN) by randomly deactivating a certain percentage of neurons during training. Overfitting occurs when a CNN becomes too specialized on the training data and performs poorly on new, unseen data. Dropout layers help to combat overfitting by forcing neurons to become more independent and robust, ultimately improving the generalization capabilities of the network.

During training, dropout layers randomly deactivate a certain percentage of neurons with each forward pass through the network. This process forces the remaining neurons to become more independent and learn more robust features from the training data. By doing so, dropout layers help to prevent co-adaptation between neurons and reduce overfitting, ultimately improving the performance of the CNN on new, unseen data.

Overall, dropout layers are an essential component of CNNs for improving their generalization capabilities and preventing overfitting.

Integrating CNN Layers for Advanced Image Recognition in AI applications

In advanced image recognition applications, convolutional neural networks (CNN) leverage multiple layers to effectively process and analyze visual data. By integrating convolutional, pooling, activation, fully connected, and dropout layers, CNNs are able to learn complex patterns within images and make accurate predictions about their content. This integration enables CNNs to excel at tasks such as object detection, facial recognition, and scene understanding, making them an essential tool for AI applications that rely on visual data.

By combining these different types of layers, CNNs are able to gradually build up a hierarchical representation of visual data, starting from simple features such as edges and textures and progressing to more complex patterns and relationships within images. This hierarchical approach enables CNNs to effectively capture spatial hierarchies present in images and make high-level decisions about their content. Overall, integrating multiple layers within CNNs is essential for enabling advanced image recognition capabilities in AI applications.

In conclusion, understanding the role of each layer within a convolutional neural network is essential for grasping how these networks process and analyze visual data. From detecting features with convolutional layers to reducing dimensionality with pooling layers, introducing non-linearity with activation functions, learning complex relationships with fully connected layers, and preventing overfitting with dropout layers, each type of layer plays a crucial role in enabling CNNs to excel at image recognition tasks. By integrating these different types of layers, CNNs are able to effectively process visual data and make accurate predictions about its content, making them an essential tool for advanced image recognition in AI applications.

CNN recently published an article discussing the impact of the metaverse on real-world events and conferences. The article explores the challenges and opportunities presented by the regulatory landscape and the hybrid reality of the metaverse. For more in-depth information on this topic, you can check out this related article on metaversum.it.

FAQs

What are CNN layers?

CNN layers, or Convolutional Neural Network layers, are a type of deep learning algorithm commonly used in image recognition and classification tasks. They consist of multiple layers, including convolutional layers, pooling layers, and fully connected layers.

What is the purpose of CNN layers?

The purpose of CNN layers is to extract features from input images and learn to recognize patterns and objects within the images. This makes CNNs well-suited for tasks such as image classification, object detection, and image segmentation.

How do CNN layers work?

CNN layers work by applying a series of mathematical operations, such as convolution and pooling, to the input image data. These operations help the network to learn and extract features from the images, which are then used for classification or other tasks.

What are the different types of CNN layers?

The main types of CNN layers include convolutional layers, which apply filters to the input image to extract features, pooling layers, which reduce the spatial dimensions of the feature maps, and fully connected layers, which perform the final classification based on the extracted features.

What are some applications of CNN layers?

CNN layers are widely used in various applications, including image recognition, facial recognition, medical image analysis, autonomous vehicles, and more. They have also been applied to tasks such as natural language processing and speech recognition.

Leave a Reply