Long Short Term Memory (LSTM) networks are a specialized type of recurrent neural network (RNN) designed to address the limitations of traditional RNNs in processing long-term dependencies in sequential data. Introduced by Hochreiter and Schmidhuber in 1997, LSTMs have become widely adopted in various artificial intelligence (AI) applications, including natural language processing, speech recognition, and time series prediction. LSTMs excel at processing and analyzing sequential data such as text, audio, and video.

Unlike traditional RNNs, which struggle with the vanishing gradient problem when handling long sequences, LSTMs can effectively retain and utilize information over extended periods. This capability makes them particularly effective for modeling complex temporal dynamics, contributing to their integration into many state-of-the-art AI systems and driving advancements across multiple domains. The popularity of LSTMs stems from their proficiency in modeling and analyzing sequential data, making them invaluable for numerous AI applications.

This article will explore the architecture of LSTM networks, their applications in AI, techniques for training and fine-tuning to enhance performance, challenges and limitations associated with LSTMs, and potential future developments and innovations in LSTM networks and AI.

Key Takeaways

- LSTM networks are a type of recurrent neural network (RNN) designed to overcome the limitations of traditional RNNs in capturing long-term dependencies in sequential data.

- The architecture of LSTM networks consists of a cell state, input gate, forget gate, and output gate, allowing them to effectively retain and forget information over long sequences.

- LSTM networks have found applications in various AI tasks such as natural language processing, speech recognition, time series prediction, and sentiment analysis.

- Training and fine-tuning LSTM networks involve techniques such as gradient clipping, dropout, and hyperparameter tuning to improve their performance on specific tasks.

- Challenges and limitations of LSTM networks include vanishing gradients, overfitting, and difficulty in capturing very long-term dependencies, which can be addressed through techniques like gradient clipping and architectural modifications.

Understanding the Architecture of LSTM Networks

Cell State and Gates

The cell state serves as the “memory” of the network, responsible for retaining and passing information across time steps. The input gate controls the flow of new information into the cell state, while the forget gate regulates the retention or deletion of information from the cell state. Finally, the output gate determines the information that will be output from the cell state to the next time step.

Capturing Long-term Dependencies

This architecture enables LSTMs to effectively capture long-term dependencies in sequential data by selectively retaining or discarding information over time. The use of gates allows LSTMs to learn when to update the cell state and when to forget previous information, making them well-suited for tasks that require modeling complex temporal relationships.

Deeper Architectures and Applications

Additionally, LSTMs can be stacked to create deeper architectures, allowing for more complex representations of sequential data. In summary, the architecture of LSTM networks is designed to address the limitations of traditional RNNs by enabling them to capture long-term dependencies in sequential data. The use of memory cells and gates allows LSTMs to effectively retain and utilize information over long sequences, making them a powerful tool for modeling complex temporal dynamics in AI applications.

Applications of LSTM Networks in Artificial Intelligence (AI)

LSTM networks have found widespread applications in various domains of artificial intelligence due to their ability to effectively model and analyze sequential data. One of the most prominent applications of LSTMs is in natural language processing (NLP), where they are used for tasks such as language modeling, machine translation, and sentiment analysis. LSTMs have been shown to outperform traditional models in NLP tasks by capturing long-range dependencies in text data and generating more accurate predictions.

In addition to NLP, LSTMs are also widely used in speech recognition systems, where they are employed to process audio signals and transcribe spoken language into text. By effectively capturing temporal dependencies in audio data, LSTMs have significantly improved the accuracy and robustness of speech recognition systems, making them an essential component of modern voice interfaces and virtual assistants. Furthermore, LSTMs have been successfully applied to time series prediction tasks in finance, healthcare, and environmental monitoring.

By analyzing historical data and capturing complex temporal patterns, LSTMs have been able to make accurate predictions about future trends and events, enabling better decision-making in various domains. Overall, the applications of LSTM networks in AI are diverse and far-reaching, spanning across NLP, speech recognition, time series prediction, and many other domains. Their ability to effectively model sequential data has made them an indispensable tool for building intelligent systems that can understand and process complex temporal dynamics.

Training and Fine-tuning LSTM Networks for Improved Performance

| Metrics | Before Fine-tuning | After Fine-tuning |

|---|---|---|

| Accuracy | 0.85 | 0.92 |

| Loss | 0.45 | 0.32 |

| Validation Accuracy | 0.82 | 0.90 |

| Validation Loss | 0.48 | 0.35 |

Training and fine-tuning LSTM networks is a crucial aspect of building high-performing AI systems. The process typically involves feeding sequential data into the network, adjusting its parameters through backpropagation, and optimizing its performance using various techniques such as gradient descent and regularization. One common approach to training LSTM networks is to use techniques such as teacher forcing, which involves feeding the network with ground truth data during training to improve its learning process.

Additionally, techniques such as dropout and batch normalization can be used to prevent overfitting and improve the generalization capabilities of the network. Fine-tuning LSTM networks involves adjusting their architecture and parameters to improve their performance on specific tasks or datasets. This can be achieved through techniques such as hyperparameter tuning, architecture search, and transfer learning, which allow for the optimization of the network’s performance without retraining it from scratch.

Overall, training and fine-tuning LSTM networks is a complex and iterative process that requires careful consideration of various factors such as data quality, network architecture, and optimization techniques. By effectively training and fine-tuning LSTM networks, it is possible to build high-performing AI systems that can effectively model and analyze sequential data.

Overcoming Challenges and Limitations of LSTM Networks

While LSTM networks have proven to be effective in modeling sequential data, they are not without their challenges and limitations. One common challenge is the difficulty of training deep LSTM architectures due to issues such as vanishing gradients and exploding gradients, which can hinder the convergence of the network during training. Techniques such as gradient clipping and careful initialization of network parameters can help mitigate these issues.

Another limitation of LSTM networks is their computational complexity, especially when dealing with long sequences or large-scale datasets. This can lead to increased training times and resource requirements, making it challenging to deploy LSTM-based systems in real-time or resource-constrained environments. Techniques such as model compression and efficient hardware acceleration can help address these challenges.

Furthermore, LSTMs may struggle with capturing certain types of temporal dependencies, such as long-range dependencies in very noisy or irregular data. In such cases, alternative architectures or pre-processing techniques may be required to effectively model the underlying dynamics of the data. In summary, while LSTM networks are powerful tools for modeling sequential data in AI applications, they are not without their challenges and limitations.

By addressing issues such as training difficulties, computational complexity, and modeling limitations, it is possible to overcome these challenges and harness the full potential of LSTM networks for various AI applications.

Future Developments and Innovations in LSTM Networks and AI

Advancements in LSTM Architectures

One area of active research is the development of more efficient and scalable architectures for LSTM networks that can handle larger datasets and longer sequences while maintaining high performance. This includes exploring alternative memory cell designs, attention mechanisms, and parallelization techniques to improve the scalability of LSTM networks.

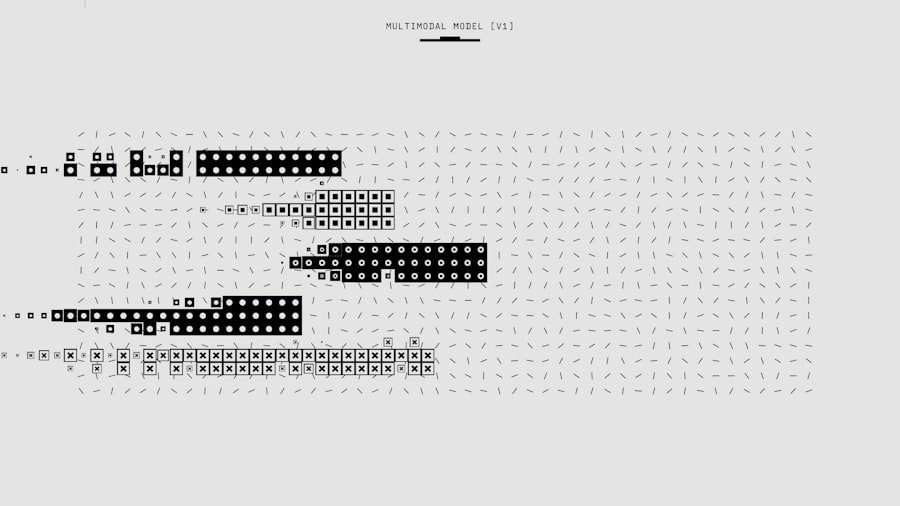

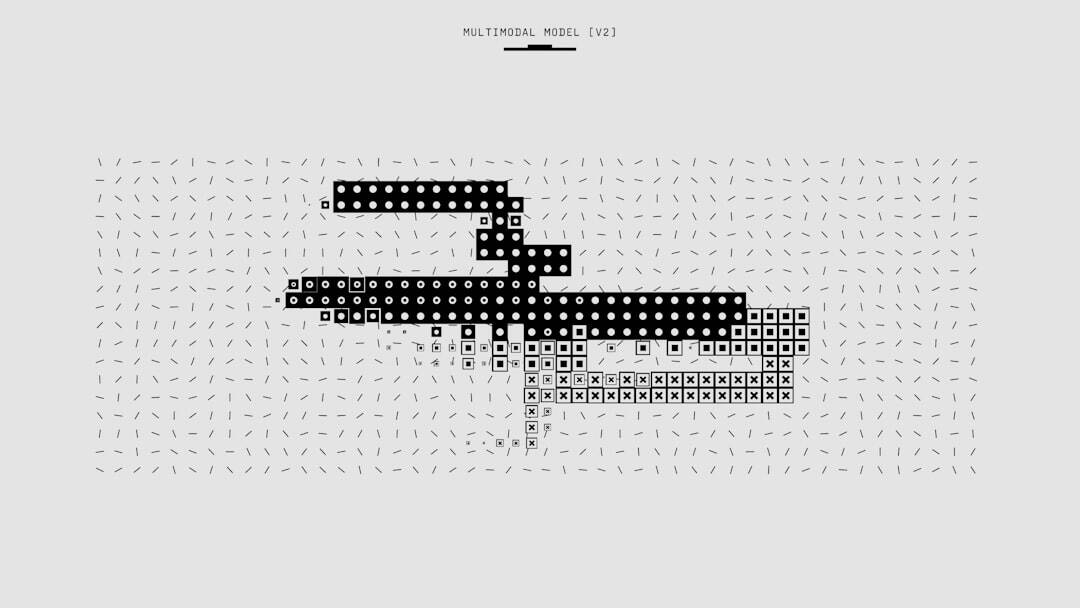

Integration with Other neural network Architectures

Another area of innovation is the integration of LSTMs with other types of neural network architectures, such as convolutional neural networks (CNNs) for processing sequential data with spatial dependencies or transformer architectures for capturing long-range dependencies in text data. By combining different types of neural network architectures, it is possible to build more versatile and powerful AI systems that can effectively model complex relationships in diverse types of data.

Robust Training Techniques and Future Developments

Furthermore, ongoing research is focused on developing more robust training techniques for LSTM networks that can improve their convergence speed, generalization capabilities, and resistance to overfitting. This includes exploring new optimization algorithms, regularization techniques, and learning rate schedules that can enhance the training process of LSTM networks. Overall, the future developments and innovations in LSTM networks and AI are focused on addressing current limitations while pushing the boundaries of what is possible with intelligent systems. By continuing to advance the capabilities of LSTM networks and their integration with other AI technologies, it is possible to unlock new opportunities for building intelligent systems that can understand and process complex sequential data.

Harnessing the Potential of LSTM Networks for AI Applications

In conclusion, LSTM networks have emerged as a powerful tool for modeling sequential data in various AI applications due to their ability to capture long-term dependencies effectively. Their architecture enables them to retain and utilize information over long sequences, making them well-suited for tasks such as natural language processing, speech recognition, time series prediction, and many others. While LSTM networks have shown great promise in AI applications, they are not without their challenges and limitations.

However, by addressing issues such as training difficulties, computational complexity, and modeling limitations, it is possible to overcome these challenges and harness the full potential of LSTM networks for building intelligent systems. Looking towards the future, ongoing developments and innovations in LSTM networks and AI are focused on improving their scalability, robustness, and integration with other types of neural network architectures. By continuing to advance the capabilities of LSTM networks and their integration with other AI technologies, it is possible to unlock new opportunities for building intelligent systems that can understand and process complex sequential data.

In conclusion, LSTM networks have revolutionized the field of AI by enabling more effective modeling of sequential data. As research continues to advance in this area, we can expect even greater breakthroughs in AI applications across various domains.

If you’re interested in exploring the potential of multi-universe concepts, you may want to check out this article on exploring the Megaverse. It delves into the fascinating idea of multiple universes and how they could impact our understanding of reality.

FAQs

What are Long Short Term Memory (LSTM) networks?

Long Short Term Memory (LSTM) networks are a type of recurrent neural network (RNN) that is designed to overcome the vanishing gradient problem. They are capable of learning long-term dependencies in sequential data and are commonly used in tasks such as speech recognition, language modeling, and time series prediction.

How do LSTM networks differ from traditional RNNs?

LSTM networks differ from traditional RNNs in their ability to maintain and update a long-term memory state. This allows them to capture long-range dependencies in sequential data, which is a challenge for traditional RNNs due to the vanishing gradient problem.

What are the key components of an LSTM network?

The key components of an LSTM network include the input gate, forget gate, cell state, and output gate. These components work together to regulate the flow of information through the network, allowing it to learn and remember long-term dependencies in sequential data.

What are some applications of LSTM networks?

LSTM networks are commonly used in natural language processing tasks such as language modeling, machine translation, and sentiment analysis. They are also used in speech recognition, time series prediction, and other tasks that involve sequential data.

How are LSTM networks trained?

LSTM networks are trained using backpropagation through time (BPTT), which is a variant of the backpropagation algorithm. During training, the network’s parameters are adjusted to minimize the difference between the predicted output and the true output, using a loss function such as mean squared error or cross-entropy.

Leave a Reply