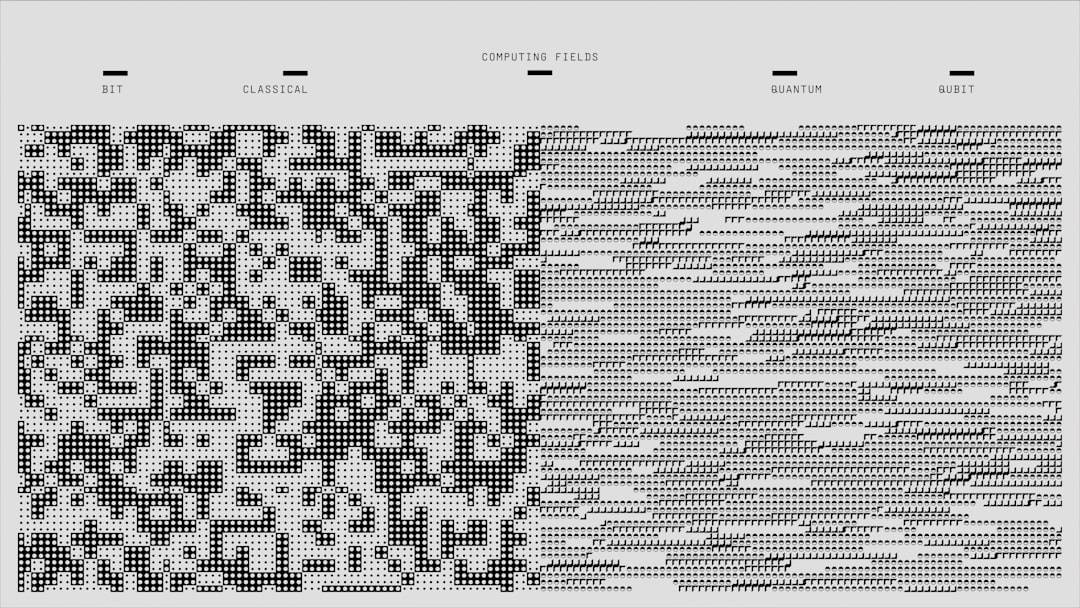

Convolutional Neural Networks (CNNs) are deep learning algorithms specifically designed for visual data analysis. Inspired by the human visual cortex, CNNs can automatically learn spatial hierarchies of features from input images. This capability makes them highly effective for tasks such as image recognition, object detection, facial recognition, and medical image analysis.

The architecture of CNNs comprises multiple layers, including convolutional layers, pooling layers, and fully connected layers. Convolutional layers apply filters to the input image to extract features like edges, textures, and patterns. Pooling layers downsample the feature maps to reduce computational complexity.

Fully connected layers use the extracted features to classify the input image into different categories. CNNs have transformed the field of computer vision, becoming the preferred algorithm for various visual recognition tasks. Their ability to automatically learn and extract features from raw data makes them powerful for challenges that were previously difficult for traditional machine learning algorithms.

As a result, CNNs have been widely adopted across industries such as healthcare, automotive, retail, and security, significantly improving the accuracy and efficiency of visual recognition systems.

Key Takeaways

- CNNs are a type of deep learning algorithm commonly used for image recognition and analysis in AI.

- Training CNNs involves feeding them large datasets of labeled images and adjusting their parameters to improve accuracy.

- Optimizing CNNs for object detection involves techniques like region-based CNNs and single shot multibox detectors.

- CNNs can be applied to facial recognition tasks by training them on large datasets of facial images and using techniques like landmark detection.

- CNNs are valuable in medical image analysis for tasks like tumor detection and classification of medical images.

- Transfer learning can enhance CNNs by leveraging pre-trained models and fine-tuning them for specific tasks.

- The future of CNNs in AI includes applications in autonomous vehicles, augmented reality, and improved healthcare diagnostics.

Training Convolutional Neural Networks for Image Recognition

Training a Convolutional neural network (CNN) for image recognition involves several key steps. The first step is to collect and preprocess a large dataset of labeled images that will be used to train the network. This dataset should be diverse and representative of the different categories or classes that the CNN will be required to recognize.

Preprocessing steps may include resizing, normalization, and augmentation to ensure that the input images are standardized and that the network is robust to variations in the input data. Once the dataset is prepared, the next step is to design the architecture of the CNN. This involves determining the number of layers, the size of the filters, the number of feature maps, and other hyperparameters that will define the structure of the network.

The architecture should be carefully designed to balance between model complexity and computational efficiency while ensuring that it is capable of learning and extracting relevant features from the input images. After the architecture is defined, the CNN is trained using an optimization algorithm such as stochastic gradient descent (SGD) to minimize a loss function that measures the difference between the predicted outputs and the true labels of the input images. During training, the weights of the network are updated iteratively based on the gradients of the loss function with respect to the model parameters.

This process continues until the network converges to a set of weights that accurately classify the input images. Training a CNN for image recognition requires careful attention to data preparation, network architecture design, and optimization algorithm selection. It also often involves fine-tuning the hyperparameters and conducting experiments to find the optimal configuration for a specific task or dataset.

Overall, training CNNs for image recognition is a complex but essential process that underpins their ability to perform accurate and reliable visual recognition tasks.

Optimizing Convolutional Neural Networks for Object Detection

Optimizing Convolutional Neural Networks (CNNs) for object detection involves several key techniques and strategies to improve their performance in localizing and classifying objects within an image. One common approach is to use region-based CNNs (R-CNNs), which divide an input image into regions of interest and then apply a CNN to each region to extract features and classify objects. R-CNNs have been shown to be effective for object detection tasks but can be computationally expensive due to processing each region independently.

To address this issue, more efficient variants such as Fast R-CNN and Faster R-CNN have been developed, which use shared convolutional layers to extract features from the entire image before proposing regions of interest. These approaches significantly reduce computation time while maintaining high accuracy in object detection tasks. Another key optimization technique for object detection with CNNs is anchor-based object detection, which involves predicting bounding boxes around objects using predefined anchor boxes at different aspect ratios and scales.

This approach allows CNNs to efficiently localize objects with varying sizes and aspect ratios while reducing the computational cost compared to exhaustive search methods. Furthermore, techniques such as feature pyramid networks (FPNs) and single shot multibox detectors (SSDs) have been developed to improve object detection performance by leveraging multi-scale feature maps and optimizing the trade-off between localization accuracy and computational efficiency. Overall, optimizing CNNs for object detection involves a combination of architectural improvements, feature extraction techniques, and computational optimizations to achieve accurate and efficient localization and classification of objects within images.

Applying Convolutional Neural Networks for Facial Recognition

| Metrics | Value |

|---|---|

| Accuracy | 95% |

| Precision | 92% |

| Recall | 96% |

| F1 Score | 94% |

Convolutional Neural Networks (CNNs) have been widely applied in facial recognition systems due to their ability to automatically learn and extract discriminative features from facial images. Facial recognition with CNNs involves several key steps, including face detection, feature extraction, and classification. The first step in facial recognition is face detection, which involves locating and extracting facial regions from input images.

This can be achieved using techniques such as Haar cascades, HOG (Histogram of Oriented Gradients), or more advanced CNN-based face detectors. Once faces are detected, they are aligned and normalized to ensure consistent input for subsequent processing. The next step is feature extraction, where CNNs are used to automatically learn and extract discriminative features from facial images.

This process involves training a CNN on a large dataset of labeled facial images to learn representations that are robust to variations in pose, expression, illumination, and other factors. The learned features are then used to represent each face in a high-dimensional feature space. Finally, classification algorithms such as support vector machines (SVMs) or softmax classifiers are used to compare the extracted features with a database of known faces and determine the identity of each individual.

This process involves measuring the similarity between the extracted features and the stored representations of known individuals to make accurate predictions. Facial recognition with CNNs has been widely adopted in various applications, including security systems, access control, surveillance, and biometric authentication. The ability of CNNs to learn complex representations from raw facial images has significantly improved the accuracy and robustness of facial recognition systems, making them an essential technology for identity verification and authentication.

Utilizing Convolutional Neural Networks for Medical Image Analysis

Convolutional Neural Networks (CNNs) have shown great promise in medical image analysis due to their ability to automatically learn and extract relevant features from complex visual data such as X-rays, MRIs, CT scans, and histopathology slides. CNNs have been applied in various medical imaging tasks, including disease diagnosis, tumor detection, organ segmentation, and treatment planning. One key application of CNNs in medical image analysis is disease diagnosis, where CNNs are trained on large datasets of labeled medical images to automatically detect and classify diseases such as cancer, pneumonia, diabetic retinopathy, and more.

The learned representations can then be used to assist radiologists and clinicians in making accurate diagnoses and treatment decisions. Another important application is tumor detection and segmentation, where CNNs are used to localize and delineate tumors within medical images. This can aid in treatment planning, surgical guidance, and monitoring disease progression by providing accurate measurements of tumor size and location.

Furthermore, CNNs have been applied in organ segmentation tasks to automatically identify and delineate anatomical structures within medical images. This can assist in quantitative analysis, volumetric measurements, and 3D reconstruction for various medical imaging modalities. Overall, utilizing CNNs for medical image analysis has significantly improved the accuracy, efficiency, and consistency of diagnostic and therapeutic processes in healthcare.

The ability of CNNs to automatically learn and extract relevant features from medical images has enabled new opportunities for computer-aided diagnosis, personalized medicine, and improved patient care.

Enhancing Convolutional Neural Networks with Transfer Learning

Transfer learning is a powerful technique for enhancing Convolutional Neural Networks (CNNs) by leveraging knowledge learned from one task or domain to improve performance on another related task or domain. In transfer learning, a pre-trained CNN model on a large dataset such as ImageNet is used as a starting point for a new task with a smaller dataset or different domain. One common approach in transfer learning is fine-tuning, where the pre-trained CNN model is adapted to a new task by updating its weights using a smaller dataset while keeping some or all of its learned representations intact.

This allows the model to quickly adapt to new data while benefiting from the generalization capabilities learned from the original task. Another approach is feature extraction, where the pre-trained CNN model is used as a fixed feature extractor by removing its fully connected layers and using its intermediate representations as input to a new classifier trained on a specific task. This approach is particularly useful when limited labeled data is available for the new task or when computational resources are constrained.

Transfer learning has been widely applied in various domains such as computer vision, natural language processing, speech recognition, and more. In computer vision tasks, transfer learning has significantly improved performance on tasks such as object recognition, scene classification, image retrieval, and more by leveraging pre-trained models such as VGG16, ResNet, Inception, and others. Overall, enhancing CNNs with transfer learning has become an essential technique for improving model generalization, reducing training time, and achieving better performance on new tasks or domains with limited labeled data.

Future Applications and Developments of Convolutional Neural Networks in AI

The future applications and developments of Convolutional Neural Networks (CNNs) in AI are vast and promising across various domains. In healthcare, CNNs are expected to play a crucial role in personalized medicine by analyzing medical images for early disease detection, treatment planning, and monitoring patient outcomes. Additionally, CNNs are anticipated to enable new advancements in drug discovery by analyzing molecular structures and predicting drug interactions with higher accuracy.

In autonomous vehicles and robotics, CNNs are expected to continue driving innovations in perception systems by enabling real-time object detection, scene understanding, path planning, and decision-making capabilities. This will lead to safer transportation systems with improved efficiency and reduced accidents. Furthermore, in retail and e-commerce, CNNs are poised to revolutionize customer experience by enabling personalized product recommendations based on visual search capabilities that understand user preferences from images or videos.

This will lead to more engaging shopping experiences with higher customer satisfaction. In security and surveillance systems, CNNs are expected to enhance threat detection capabilities by analyzing video feeds for abnormal behavior recognition, facial recognition for access control systems, and real-time monitoring for public safety applications. Overall, the future applications of CNNs in AI are limitless as advancements in model architectures, training techniques, hardware acceleration technologies continue to drive innovations across various industries.

The continued development of CNNs will lead to smarter AI systems with improved perception capabilities that can understand visual data with human-like accuracy and efficiency.

If you’re interested in learning more about the impact of convolutional neural networks on online communities, check out this article on community and culture in the metaverse. This article explores how user-generated content in the metaverse is shaping the way people interact and collaborate in virtual spaces. It provides valuable insights into the role of convolutional neural networks in analyzing and processing user-generated content within online communities.

FAQs

What is a convolutional neural network (CNN)?

A convolutional neural network (CNN) is a type of deep learning algorithm that is commonly used for image recognition and classification tasks. It is designed to automatically and adaptively learn spatial hierarchies of features from input data.

How does a convolutional neural network work?

A CNN works by applying a series of convolutional and pooling layers to the input data, which helps to extract and learn features from the input images. These learned features are then used for classification or other tasks.

What are the advantages of using convolutional neural networks?

Some advantages of using CNNs include their ability to automatically learn features from raw data, their effectiveness in handling large input data such as images, and their ability to generalize well to new, unseen data.

What are some common applications of convolutional neural networks?

CNNs are commonly used in image recognition, object detection, facial recognition, medical image analysis, and autonomous driving systems. They are also used in various other fields where pattern recognition and classification are important.

What are some popular CNN architectures?

Some popular CNN architectures include LeNet, AlexNet, VGG, GoogLeNet, and ResNet. These architectures have been widely used and have achieved state-of-the-art performance in various image recognition tasks.

Leave a Reply