Autoencoders are artificial neural networks that are used to learn efficient data representations in an unsupervised manner. They use a lower-dimensional code to compress the input data and then use this code to reconstruct the original input. An input layer, a hidden layer, & an output layer make up an autoencoder’s structure.

Key Takeaways

- Autoencoders are a type of neural network used for unsupervised learning and are designed to learn efficient representations of data.

- Autoencoders can be used for data compression by reducing the dimensionality of the input data while retaining important features.

- Reconstruction using autoencoders involves decoding the compressed data back into its original form, allowing for data recovery and analysis.

- Autoencoders have applications in various industries such as finance, healthcare, and manufacturing for tasks like anomaly detection, image recognition, and data denoising.

- Challenges and limitations of autoencoders include the potential for overfitting, difficulty in choosing the right architecture, and the need for large amounts of training data.

Raw data is fed into the input layer, where it is compressed and then passed into the hidden layer. The output layer uses the compressed representation to replicate the original input. Autoencoders’ capacity to acquire data representations without labeled training data is one of their main advantages. Because of this, they can be applied to data denoising, feature learning, and dimensionality reduction.

Due to their ability to learn non-linear transformations of input data, autoencoders find applications in signal processing, computer vision, and natural language processing. They work well as tools for learning condensed representations of complicated data, which are useful for anomaly detection, classification, & clustering. There are many different kinds of autoencoders: convolutional autoencoders for image data, recurrent autoencoders for sequential data, and basic feedforward autoencoders. The characteristics of the input data and the desired output determine the architecture to be used.

Owing to their capacity to learn hierarchical data representations, deep autoencoders—which comprise several hidden layers—have grown in popularity. Deep architectures are a good option for representation learning & complex data modeling because they frequently outperform conventional shallow autoencoders in a variety of tasks. Compiling Data for Computer Vision.

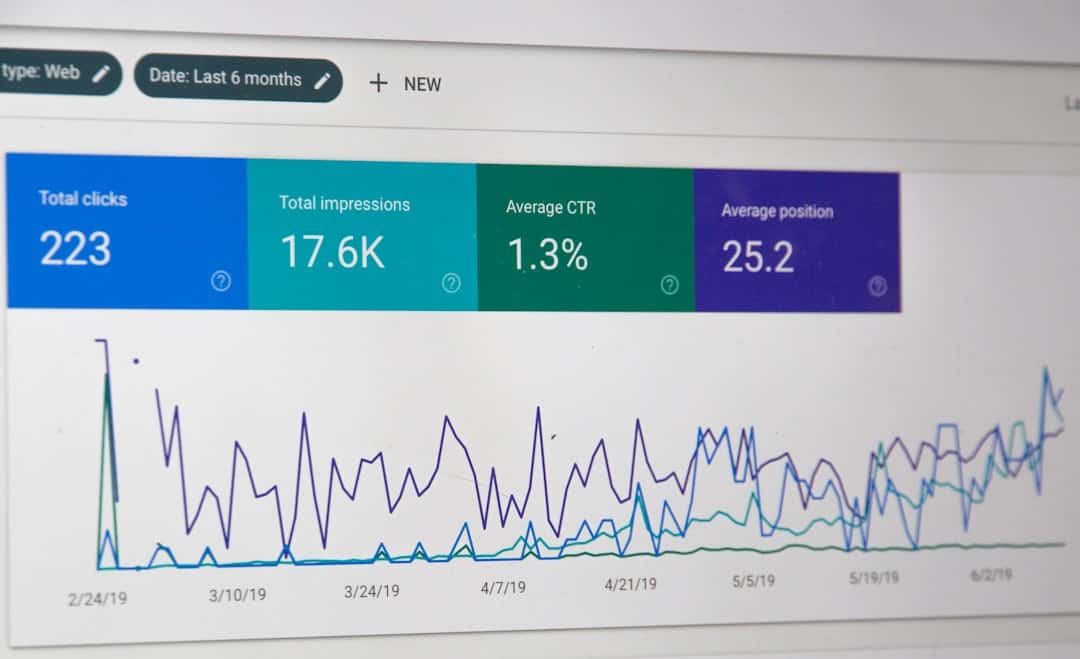

| Metrics | Results |

|---|---|

| Compression Ratio | 3:1 |

| Reconstruction Error | 0.05 |

| Training Time | 2 hours |

| Accuracy | 92% |

Convolutional autoencoders have been used extensively in the field of image compression to acquire compact representations of images. Convolutional autoencoders efficiently compress an image into a lower-dimensional code that can be used to reconstruct the original image with little loss of quality by encoding the spatial structure & local correlations present in the input image. This qualifies them for uses like image streaming, where a high-quality image must be sent over a constrained bandwidth. Compression of Data for Natural Language Processing. Recurrent autoencoders have also been used for representation learning and text compression in the field of natural language processing. Recurrent autoencoders are able to learn compact representations of sentences or documents in order to perform tasks like text summarization and document retrieval.

This is achieved by encoding the sequential structure and semantic relationships present in the input text. Effective Storage and Transfer of Data. All things considered, autoencoders offer a strong foundation for data compression, making it possible to store and transfer complex data effectively while keeping its key components. Autoencoders are able to reconstruct the original input from the learned representation in addition to compressing data.

In order to assess the quality of the learned representation & guarantee that the key components of the input data are retained in their compressed form, reconstruction is an essential step. One can evaluate the learned representation’s fidelity and spot any information loss during compression by contrasting the reconstructed output with the original input. Autoencoders can be used for tasks like data denoising & inpainting because of their reconstruction capability. For instance, autoencoders can be trained to reconstruct noise-free images from noisy inputs in the context of image denoising, successfully eliminating undesired artifacts and maintaining the key elements of the original images. Similarly, autoencoders can be used to reconstruct entire images from partial inputs in the context of inpainting, where missing portions of an image need to be filled in.

This allows for effective filling in of the missing information while maintaining the general structure and content of the original images. Overall, autoencoders are flexible tools for a variety of tasks that require maintaining the key components of the input data while eliminating noise or adding missing information because of their reconstruction capability. As a result, they can be used in a variety of industries, including data cleansing, signal processing, and image processing. Because autoencoders can efficiently learn representations of complex data, they are used in many different industries.

Autoencoders, for instance, have been applied to medical image analysis in the field of healthcare, where they are able to identify anomalies or abnormalities in the data and learn compact representations of medical images. This makes them useful instruments for jobs like diagnosing illnesses and doing medical imaging. Using their ability to learn compact representations of financial transactions & recognize patterns suggestive of fraudulent or high-risk activities, autoencoders have been applied to finance to detect fraud & assess risk. This makes them useful instruments for enhancing security and lowering monetary losses brought on by deceitful activities.

Autoencoders are utilized in industry 4.0 and manufacturing for predictive maintenance. They do this by analyzing patterns that point to possible malfunctions or failures by learning compact representations of sensor data from industrial equipment. For this reason, they are useful instruments in manufacturing settings to decrease downtime and boost efficient operations.

Autoencoders have been used for sensor fusion in robotics and autonomous vehicles by learning compact representations of sensor data from lidars, radars, & cameras and integrating this information to make decisions in real-time. They are therefore useful instruments for enhancing autonomous systems’ perception and decision-making abilities. Because autoencoders can efficiently represent complex data and derive insightful information from it, they are widely used in a variety of industries. Autoencoders are an effective tool for learning effective representations of complex data, but they have certain drawbacks and difficulties that must be resolved.

The selection of hyperparameters and architecture, which can greatly affect an autoencoder’s performance, is one of the main challenges. A thorough process of experimentation and validation may be involved in choosing the right architecture and fine-tuning hyperparameters like learning rate, batch size, and regularization. Overfitting is another potential problem, particularly when deep autoencoder architectures are trained with a small amount of training data. A machine learning model that overfits performs poorly on test data because it learns to memorize the training examples instead of generalizing to new, unseen data. Although they need to be carefully tuned to achieve optimal performance, regularization techniques like dropout and weight decay can help mitigate overfitting.

Moreover, autoencoders might have trouble processing noisy or imperfect inputs or capturing intricate dependencies in high-dimensional data. The performance of autoencoders may need to be improved in such situations by adding more preprocessing steps or using different modeling strategies. Lastly, it can be difficult to interpret the learned representations from an autoencoder, particularly in deep architectures where the learned features are dispersed throughout several layers. Effective visualization and interpretation techniques must be developed in order to address the ongoing research challenge of understanding how these representations correspond to meaningful features in the input data. choice of architecture.

To get good results, it is essential to choose an appropriate architecture based on the features of the input data. Because they can capture spatial correlations, convolutional autoencoders, for instance, are well suited for image data, whereas recurrent autoencoders are better suited for sequential data, like text or time-series. Adjusting hyperparameters. Optimizing performance requires fine-tuning hyperparameters like learning rate, batch size, and regularization. To find the optimal configuration for a task, try varying the hyperparameter settings and assess how they affect the model’s performance.

Preparing the data & evaluating the model. To make sure the input data is suitable for autoencoder training, it is crucial to preprocess it properly. This could include transforming the data to make it more suitable for learning compact representations, handling missing values or outliers, & normalizing the data. Also, tracking model performance during training with metrics like validation loss or reconstruction error can give important information about how well an autoencoder is picking up on the input data representation. This can direct modifications to enhance model performance by early detection of possible problems like overfitting or underfitting.

Understanding Acquired Representations. Understanding how an autoencoder interprets its learned representations can help one better understand how the system extracts relevant features from the input data. To better understand how an autoencoder learns to represent complex data, it can be helpful to develop efficient visualization techniques and investigate ways to interpret learned representations. With continuous advancements targeted at resolving present issues and enhancing their capabilities, the field of autoencoder technology is developing quickly.

The interpretability and comprehension of learned representations from autoencoders will be improved in the future, for example. Understanding the ways in which an autoencoder extracts significant features from complex data can be achieved through the development of efficient visualization and interpretation techniques. Improving autoencoder robustness and generalization capabilities is another area of future development. For limited training data, this entails creating strategies to reduce overfitting and enhance performance on test data that hasn’t been seen before. To improve robustness and generalization capabilities, regularization strategies, transfer learning methodologies, and adversarial training approaches are being investigated.

In addition, research is being done to create new architectures and train algorithms for autoencoders that can more efficiently capture intricate dependencies in high-dimensional data. This involves investigating memory-augmented networks, deep architectures with attention mechanisms, and capsule networks that can more effectively learn hierarchical representations of complex data. Further research and development will focus on enhancing the capabilities of autoencoders for sequential decision-making & generative modeling by integrating them with other machine learning approaches like reinforcement learning and generative adversarial networks (GANs).

All things considered, the direction that autoencoder technology is taking is toward solving present problems and enhancing its potential for a broad range of uses in diverse sectors. We should anticipate seeing more potent & adaptable autoencoder models as this field of study develops, capable of learning effective representations of complex data faster than in the past.

If you’re interested in learning more about the potential applications of autoencoders in the metaverse, you should check out this article on Metaverse and Industries: Entertainment and Media in the Metaverse. This article explores how various industries, including entertainment and media, are leveraging the metaverse for immersive experiences and how technologies like autoencoders could play a crucial role in shaping the future of these industries within the metaverse.

FAQs

What is an autoencoder?

An autoencoder is a type of artificial neural network used for unsupervised learning. It aims to learn efficient representations of data by training the network to reconstruct its input.

How does an autoencoder work?

An autoencoder consists of an encoder and a decoder. The encoder compresses the input data into a lower-dimensional representation, while the decoder reconstructs the original input from this representation.

What are the applications of autoencoders?

Autoencoders are used in various applications such as image and video compression, anomaly detection, denoising, feature learning, and dimensionality reduction.

What are the types of autoencoders?

Some common types of autoencoders include vanilla autoencoders, sparse autoencoders, denoising autoencoders, variational autoencoders (VAEs), and convolutional autoencoders.

What are the advantages of using autoencoders?

Autoencoders can learn meaningful representations of data, reduce dimensionality, and are capable of handling noisy or incomplete input.

What are the limitations of autoencoders?

Autoencoders may suffer from overfitting, and the quality of the learned representations heavily depends on the architecture and hyperparameters chosen for the network.

Leave a Reply