PyTorch Lightning is an open-source library that provides a high-level interface for PyTorch, a popular machine learning framework. It simplifies the process of building and training complex AI models by offering a more organized and streamlined approach. The library abstracts away boilerplate code and infrastructure setup, allowing researchers and developers to focus on core aspects of their deep learning projects.

PyTorch Lightning has gained significant popularity in the AI community due to its ability to accelerate the development and deployment of advanced machine learning models. This library serves as a bridge between researchers and engineers, facilitating more effective collaboration on AI projects. It provides a standardized and modular structure for organizing code, enhancing the understandability, maintainability, and scalability of Deep Learning models.

PyTorch Lightning offers an extensive set of tools and utilities for handling common tasks such as data loading, model training, and validation. This enables more efficient experimentation and iteration, ultimately leading to faster progress in AI research and development.

Key Takeaways

- PyTorch Lightning is a lightweight wrapper for PyTorch that simplifies the process of building and training complex AI models.

- Using PyTorch Lightning can accelerate deep learning projects by providing a high-level interface for AI development.

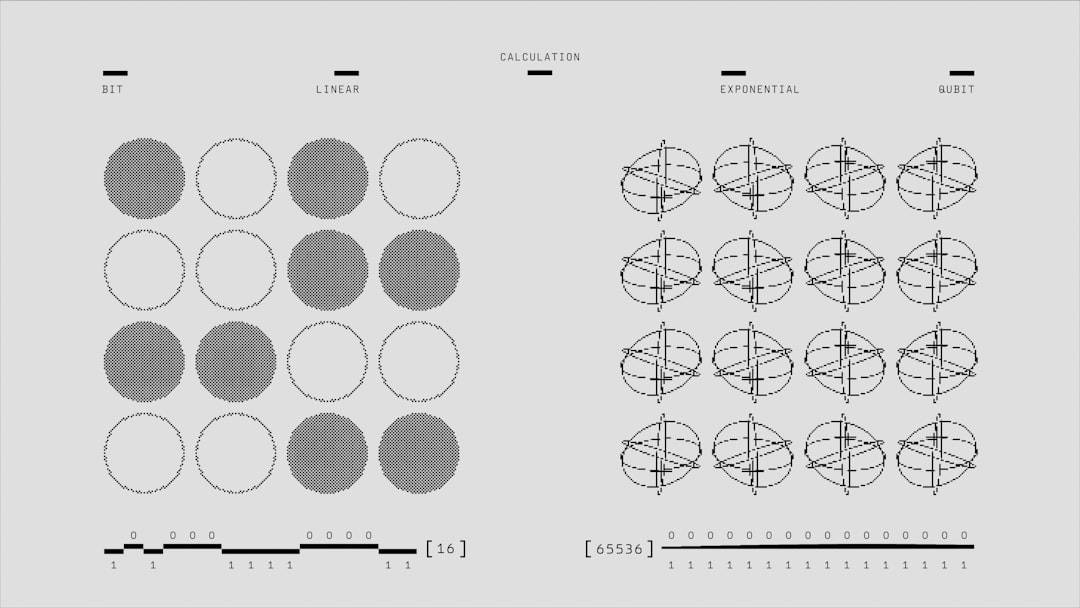

- Key features of PyTorch Lightning include automatic optimization, distributed training, and native support for mixed precision training.

- PyTorch Lightning simplifies the process of building and training complex AI models by providing a standardized structure and reducing boilerplate code.

- Leveraging PyTorch Lightning can lead to scalable and efficient deep learning workflows, making it easier to manage and scale AI projects.

Understanding the benefits of using PyTorch Lightning for AI and deep learning projects

Simplified Code Structure

PyTorch Lightning provides a standardized structure for organizing code, which promotes best practices and code reusability across different projects. This not only improves the overall quality of AI development but also accelerates the pace of innovation in the field.

Reproducibility and Scalability

Another significant benefit of PyTorch Lightning is its focus on reproducibility and scalability. The library provides built-in support for distributed training, allowing developers to seamlessly scale their models across multiple GPUs or even distributed computing clusters. This capability is crucial for handling large-scale datasets and training computationally intensive models.

Seamless Integration with the PyTorch Ecosystem

Furthermore, PyTorch Lightning integrates seamlessly with other popular libraries and tools in the PyTorch ecosystem, such as PyTorch Ecosystem, enabling developers to leverage a wide range of resources for their AI projects.

Exploring the key features and functionalities of PyTorch Lightning for AI development

PyTorch Lightning offers a wide range of features and functionalities that cater to the needs of AI researchers and developers. One of its key features is the LightningModule, which provides a standardized interface for defining deep learning models. By subclassing LightningModule, developers can encapsulate all aspects of their model, including data loading, forward pass, loss computation, and optimization, in a single cohesive unit.

This modular approach promotes code organization and reusability, making it easier to collaborate on AI projects and share code across different teams. In addition to the LightningModule, PyTorch Lightning provides a set of built-in callbacks and hooks that enable developers to customize the training process without having to modify the core logic of their models. These callbacks can be used to implement early stopping, learning rate scheduling, model checkpointing, and other advanced training techniques.

Furthermore, PyTorch Lightning integrates seamlessly with popular logging and visualization tools such as TensorBoard and WandB, allowing developers to monitor and analyze their training runs in real-time.

How PyTorch Lightning simplifies the process of building and training complex AI models

| Benefits of PyTorch Lightning | Explanation |

|---|---|

| Modular Code Structure | Allows for organized and reusable code components |

| Automatic Optimization | Automatically handles optimization and gradient accumulation |

| Native Distributed Training | Supports distributed training across multiple GPUs and nodes |

| Logging and Visualization | Provides built-in support for logging and visualization of training metrics |

| Community Support | Backed by a strong community for assistance and contributions |

PyTorch Lightning simplifies the process of building and training complex AI models by providing a high-level interface that abstracts away the low-level details of model training. This allows researchers and developers to focus on the core aspects of their projects, such as model architecture design and hyperparameter tuning, without getting bogged down by boilerplate code and infrastructure setup. By standardizing the structure of deep learning code, PyTorch Lightning promotes best practices and code reusability, making it easier to collaborate on AI projects and share code across different teams.

Furthermore, PyTorch Lightning provides a set of built-in utilities for handling common tasks such as data loading, model training, and validation. This includes support for distributed training, mixed precision training, automatic optimization, and gradient accumulation, among other advanced features. By leveraging these utilities, developers can streamline the process of building and training complex AI models, ultimately leading to faster iteration and innovation in the field.

Additionally, PyTorch Lightning integrates seamlessly with other popular libraries and tools in the PyTorch ecosystem, enabling developers to leverage a wide range of resources for their AI projects.

Leveraging PyTorch Lightning for scalable and efficient deep learning workflows

PyTorch Lightning is well-suited for scalable and efficient deep learning workflows due to its built-in support for distributed training and seamless integration with other parallel computing frameworks. By leveraging PyTorch Lightning’s distributed training capabilities, developers can scale their models across multiple GPUs or even distributed computing clusters without having to modify their core training logic. This enables them to handle large-scale datasets and train computationally intensive models more efficiently.

Furthermore, PyTorch Lightning provides support for mixed precision training, which allows developers to train deep learning models using lower precision numerical formats without sacrificing model accuracy. This can significantly reduce memory consumption and training time, making it easier to train larger models on limited hardware resources. Additionally, PyTorch Lightning integrates seamlessly with popular performance profiling tools such as NVIDIA’s Nsight Systems and Nsight Compute, enabling developers to identify performance bottlenecks and optimize their deep learning workflows for maximum efficiency.

Case studies and examples of how PyTorch Lightning has accelerated AI projects

Accelerating Natural Language Processing Research

Researchers at OpenAI used PyTorch Lightning to develop GPT-3, one of the largest language models ever created. By leveraging PyTorch Lightning’s modular structure and distributed training capabilities, they were able to train GPT-3 on a massive dataset consisting of hundreds of gigabytes of text data. This accelerated the pace of research in natural language processing and enabled them to achieve state-of-the-art performance on a wide range of language understanding tasks.

Scaling Self-Supervised Learning in Computer Vision

Researchers at Facebook AI used PyTorch Lightning to develop DINO (Emerging Properties in Self-Supervised Vision Transformers), a self-supervised learning framework for vision transformers. By leveraging PyTorch Lightning’s built-in utilities for data loading and model training, they were able to experiment with different self-supervised learning techniques and scale their models across multiple GPUs for efficient training.

Unlocking Efficient AI Development

In both cases, PyTorch Lightning accelerated the development of these AI projects and enabled researchers to achieve competitive performance on various benchmarks. By providing a modular and scalable framework for AI development, PyTorch Lightning has become a go-to tool for researchers and developers working on cutting-edge AI projects.

Tips and best practices for maximizing the potential of PyTorch Lightning in AI and deep learning initiatives

To maximize the potential of PyTorch Lightning in AI and deep learning initiatives, developers should follow a set of tips and best practices that promote efficient usage of the library. Firstly, it is important to familiarize oneself with the core concepts and APIs of PyTorch Lightning by referring to the official documentation and community resources. This will help developers understand how to effectively use the library’s features and functionalities in their AI projects.

Secondly, developers should strive to adhere to best practices for organizing code using PyTorch Lightning’s modular structure. This includes encapsulating all aspects of model training within a LightningModule subclass, using built-in callbacks for advanced training techniques, and leveraging logging and visualization tools for monitoring training runs. By following these best practices, developers can ensure that their code is well-organized, maintainable, and scalable across different projects.

Lastly, it is important to stay updated with the latest developments in the PyTorch ecosystem and community contributions to PyTorch Lightning. This includes keeping an eye on new releases, feature enhancements, bug fixes, and performance optimizations that can improve the efficiency and scalability of deep learning workflows. By staying engaged with the community, developers can leverage the full potential of PyTorch Lightning in their AI initiatives and contribute back to the open-source ecosystem.

If you’re interested in the future of the metaverse and its potential impact on various industries, you may want to check out this article on metaverse and industries: education and learning. It explores how the metaverse could revolutionize the way we approach education and training, offering new opportunities for immersive and interactive learning experiences. This could have implications for the development of new technologies and platforms, including those built on frameworks like PyTorch Lightning.

FAQs

What is PyTorch Lightning?

PyTorch Lightning is a lightweight PyTorch wrapper that provides a high-level interface for organizing and standardizing PyTorch code. It simplifies the training process and allows for cleaner, more organized code.

What are the key features of PyTorch Lightning?

Some key features of PyTorch Lightning include automatic optimization, distributed training, and native support for mixed precision training. It also provides a standardized structure for organizing PyTorch code, making it easier to write and maintain complex deep learning models.

How does PyTorch Lightning simplify the training process?

PyTorch Lightning simplifies the training process by abstracting away the boilerplate code required for setting up training loops, logging, and validation. This allows researchers and engineers to focus on the core logic of their models without getting bogged down in repetitive implementation details.

Is PyTorch Lightning suitable for distributed training?

Yes, PyTorch Lightning provides native support for distributed training across multiple GPUs and even multiple machines. It handles the complexities of distributed training, such as gradient synchronization and communication, allowing users to easily scale their models to larger datasets and more powerful hardware.

Can PyTorch Lightning be used for production deployment?

While PyTorch Lightning is primarily designed for research and development, it can also be used for production deployment. Its modular and organized structure makes it easier to maintain and scale deep learning models in a production environment. However, it’s important to consider the specific requirements and constraints of the production deployment when using PyTorch Lightning.

Leave a Reply