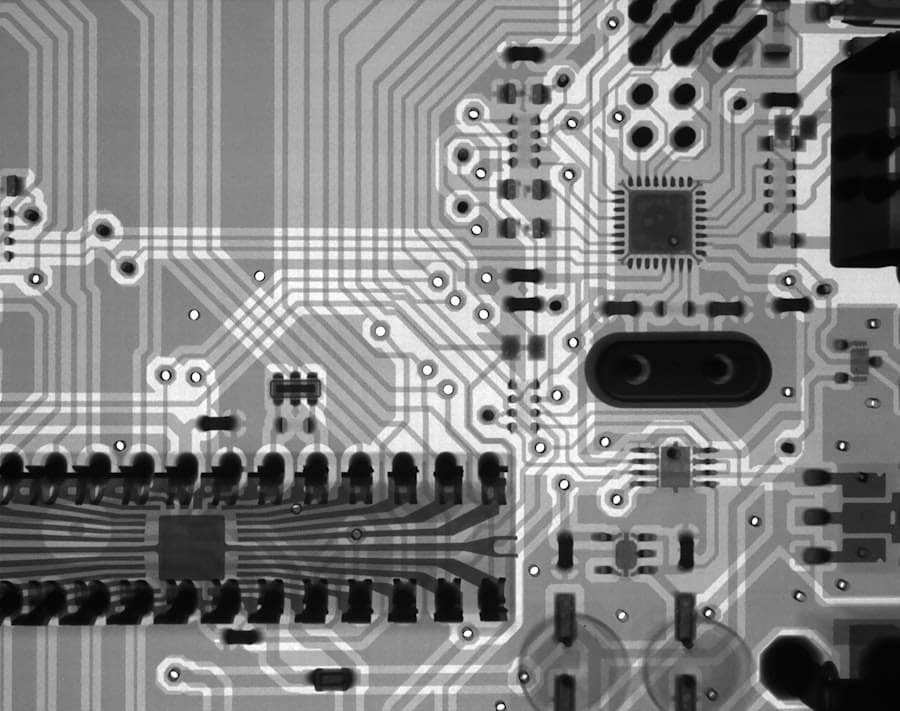

Field Programmable Gate Arrays (FPGAs) have become a key technology in artificial intelligence (AI), particularly for implementing neural networks. These devices allow for hardware customization to suit specific computational tasks, offering high performance and efficiency for AI applications. Unlike fixed-architecture processors, FPGAs can be reconfigured to optimize neural network algorithm execution, resulting in faster processing times and lower power consumption.

This adaptability is especially valuable in deep learning, where neural network complexity and size can vary significantly depending on the application. The integration of FPGAs with neural networks represents a significant advancement in AI, combining hardware programmability with machine learning capabilities. FPGAs’ parallel processing abilities enable the implementation of complex algorithms that might be constrained by conventional computing architectures.

This synergy enhances AI system performance and creates opportunities for innovation in sectors such as healthcare, automotive, and telecommunications. As demand for real-time data processing and intelligent decision-making grows, FPGA neural networks are positioned to play a crucial role in shaping the future of AI technologies.

Key Takeaways

- FPGA neural networks combine the power of field-programmable gate arrays (FPGAs) with the capabilities of neural networks to create efficient and flexible AI solutions.

- The impact of AI on FPGA technology has led to the development of specialized hardware and software tools that optimize the performance of neural networks on FPGAs.

- Using FPGAs for AI applications offers advantages such as low power consumption, high parallelism, and reconfigurability, making them suitable for real-time and edge computing applications.

- Challenges and limitations of implementing FPGA neural networks include the complexity of hardware design, limited resources, and the need for specialized expertise in FPGA programming.

- Case studies have demonstrated successful applications of FPGA neural networks in AI, including image recognition, natural language processing, and autonomous driving, showcasing their potential in various industries.

- Future trends in FPGA neural networks for AI include the integration of advanced algorithms, increased hardware acceleration, and the development of more user-friendly tools for FPGA programming.

- In conclusion, FPGA neural networks have the potential to revolutionize AI by providing efficient and flexible solutions for a wide range of applications, paving the way for advancements in the field of artificial intelligence.

The Impact of AI on FPGA Technology

The rise of artificial intelligence has had a profound impact on the development and evolution of FPGA technology. As AI applications become increasingly sophisticated, there is a corresponding need for hardware that can efficiently handle the computational demands associated with training and deploying neural networks. FPGAs have risen to this challenge by providing a flexible platform that can be tailored to meet the specific requirements of various AI workloads.

This adaptability allows engineers to optimize their designs for speed, power efficiency, and resource utilization, which are critical factors in deploying AI solutions at scale. Moreover, the intersection of AI and FPGA technology has spurred innovation in design methodologies and tools. Traditional hardware design processes often involve lengthy development cycles and significant upfront investment.

However, with the advent of AI-driven design tools, engineers can now leverage machine learning algorithms to automate aspects of the design process, resulting in faster prototyping and reduced time-to-market. This shift not only enhances productivity but also democratizes access to advanced FPGA capabilities, enabling a broader range of developers to harness the power of AI in their projects. As a result, we are witnessing a transformative period where AI is not just a consumer of FPGA technology but also a catalyst for its advancement.

Advantages of Using FPGA for AI Applications

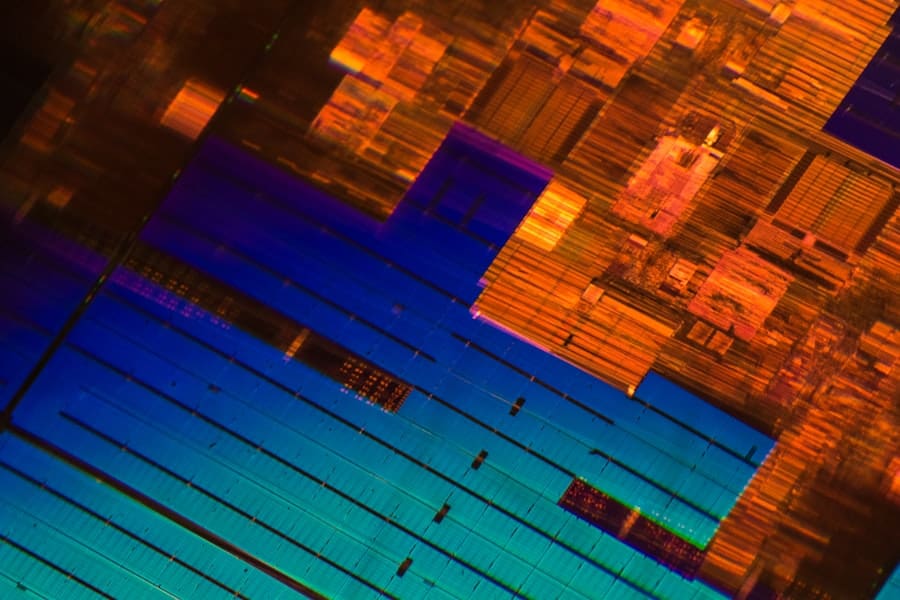

One of the most compelling advantages of using FPGAs for AI applications is their unparalleled ability to perform parallel processing. Unlike traditional CPUs that execute instructions sequentially, FPGAs can execute multiple operations simultaneously due to their highly parallel architecture. This characteristic is particularly advantageous for neural networks, which often involve numerous calculations that can be performed concurrently.

By distributing these computations across multiple processing elements within an FPGA, developers can achieve significant speedups in both training and inference phases of machine learning models. In addition to their parallel processing capabilities, FPGAs offer remarkable energy efficiency compared to other hardware options such as GPUs or CPUs. As AI applications become more prevalent, the demand for energy-efficient solutions has intensified, especially in edge computing scenarios where power constraints are critical.

FPGAs can be optimized for specific tasks, allowing them to consume less power while delivering high performance. This efficiency not only reduces operational costs but also extends the lifespan of battery-powered devices, making FPGAs an ideal choice for applications ranging from autonomous vehicles to wearable health monitors. The combination of speed and energy efficiency positions FPGAs as a formidable contender in the competitive landscape of AI hardware.

Challenges and Limitations of Implementing FPGA Neural Networks

| Challenges and Limitations of Implementing FPGA Neural Networks |

|---|

| 1. Limited resources on FPGA devices |

| 2. High power consumption |

| 3. Difficulty in programming and debugging |

| 4. Limited support for complex neural network architectures |

| 5. Limited memory bandwidth |

| 6. Limited flexibility for real-time adaptation |

Despite their numerous advantages, implementing FPGA neural networks is not without its challenges and limitations. One significant hurdle is the complexity associated with programming FPGAs. Unlike software development for CPUs or GPUs, which often relies on high-level programming languages, FPGA programming typically requires knowledge of hardware description languages (HDLs) such as VHDL or Verilog.

This steep learning curve can deter many developers from fully embracing FPGA technology, particularly those who may not have a background in hardware design. Consequently, there is a pressing need for more user-friendly development tools that can abstract some of this complexity while still allowing for efficient hardware utilization. Another challenge lies in the resource constraints inherent to FPGAs.

While these devices are highly flexible, they also have limited resources compared to larger computing systems. This limitation can pose difficulties when attempting to implement large-scale neural networks that require substantial memory and processing power. Developers must carefully balance their designs to ensure that they fit within the available resources while still meeting performance requirements.

Additionally, optimizing neural network architectures specifically for FPGA deployment often necessitates trade-offs in model accuracy or complexity. As such, finding the right balance between performance and resource utilization remains a critical consideration for those looking to leverage FPGAs for AI applications.

Case Studies: Successful Applications of FPGA Neural Networks in AI

Numerous case studies illustrate the successful application of FPGA neural networks across various industries, showcasing their potential to drive innovation and efficiency. One notable example is in the field of autonomous driving, where companies like Tesla and Waymo have integrated FPGAs into their vehicle systems to process vast amounts of sensor data in real time. By utilizing FPGAs for tasks such as object detection and classification, these companies have been able to enhance the safety and reliability of their autonomous systems while maintaining low latency—an essential requirement for real-time decision-making on the road.

Another compelling case study can be found in healthcare, where FPGAs are being employed for medical imaging applications such as MRI and CT scans. Researchers have developed FPGA-based systems that accelerate image reconstruction algorithms, significantly reducing the time required to generate high-quality images from raw data. This advancement not only improves patient outcomes by enabling faster diagnoses but also enhances operational efficiency within medical facilities.

By harnessing the power of FPGA neural networks, healthcare providers can deliver timely and accurate results while optimizing resource utilization—a critical factor in today’s healthcare landscape.

Future Trends and Developments in FPGA Neural Networks for AI

Looking ahead, several trends are poised to shape the future development of FPGA neural networks within the AI landscape. One prominent trend is the increasing integration of machine learning techniques into FPGA design processes themselves. As AI continues to evolve, we can expect to see more sophisticated tools that leverage machine learning algorithms to optimize hardware configurations automatically.

This shift will likely streamline the design process further, enabling developers to create highly efficient FPGA implementations with minimal manual intervention. Additionally, as edge computing gains traction, FPGAs are expected to play an even more significant role in enabling intelligent processing at the edge of networks. With the proliferation of IoT devices generating vast amounts of data, there is a growing need for localized processing capabilities that can deliver real-time insights without relying on cloud infrastructure.

FPGAs are uniquely positioned to meet this demand due to their ability to perform complex computations efficiently while consuming minimal power. As advancements continue in both FPGA technology and AI algorithms, we can anticipate a future where FPGA neural networks become integral components of intelligent edge devices across various sectors.

The Potential of FPGA Neural Networks in Revolutionizing AI

In conclusion, FPGA neural networks represent a transformative force within the realm of artificial intelligence, offering unique advantages that address some of the most pressing challenges faced by traditional computing architectures. Their ability to provide high-performance parallel processing combined with energy efficiency makes them an ideal choice for a wide range of AI applications—from autonomous vehicles to healthcare solutions. While challenges remain in terms of programming complexity and resource constraints, ongoing advancements in design tools and methodologies are paving the way for broader adoption.

As we look toward the future, it is clear that FPGA neural networks will continue to play a pivotal role in revolutionizing AI technologies. The convergence of machine learning with hardware design will unlock new possibilities for innovation and efficiency across industries. With their adaptability and performance capabilities, FPGAs are not just enhancing existing AI applications; they are also enabling entirely new paradigms that will shape how we interact with technology in our daily lives.

The potential for FPGA neural networks is vast, and as research and development efforts continue to advance this field, we can expect to see even more groundbreaking applications emerge in the years ahead.

If you’re exploring the integration of FPGA technology with neural networks, it’s essential to consider the broader context in which these technologies operate, including ethical considerations. An insightful article that discusses the ethical implications of emerging technologies in digital environments, such as the metaverse, can be found at Challenges and Opportunities in the Metaverse: Ethical Considerations. This article provides a comprehensive overview of the ethical challenges that developers and users may face, which is crucial when designing and implementing advanced technologies like FPGA-based neural networks within such platforms.

FAQs

What is an FPGA neural network?

An FPGA neural network is a type of artificial neural network that is implemented on a field-programmable gate array (FPGA) hardware platform. FPGAs are integrated circuits that can be configured to perform specific tasks, making them well-suited for implementing neural network algorithms.

How does an FPGA neural network work?

An FPGA neural network works by using the reconfigurable nature of FPGAs to implement the various components of a neural network, such as neurons, connections, and layers. This allows for parallel processing and efficient execution of neural network algorithms.

What are the advantages of using an FPGA for neural networks?

Using an FPGA for neural networks offers advantages such as high parallelism, low power consumption, and flexibility in design. FPGAs can also be reconfigured to adapt to different neural network architectures and algorithms, making them suitable for a wide range of applications.

What are some applications of FPGA neural networks?

FPGA neural networks are used in various applications such as image and speech recognition, natural language processing, autonomous vehicles, robotics, and financial modeling. They are also used in edge computing and IoT devices where low power consumption and real-time processing are important.

What are the challenges of implementing FPGA neural networks?

Challenges of implementing FPGA neural networks include the complexity of designing and optimizing the hardware architecture, as well as the need for specialized knowledge in FPGA programming and neural network algorithms. Additionally, FPGAs may have limited resources compared to traditional GPUs or CPUs.

Leave a Reply