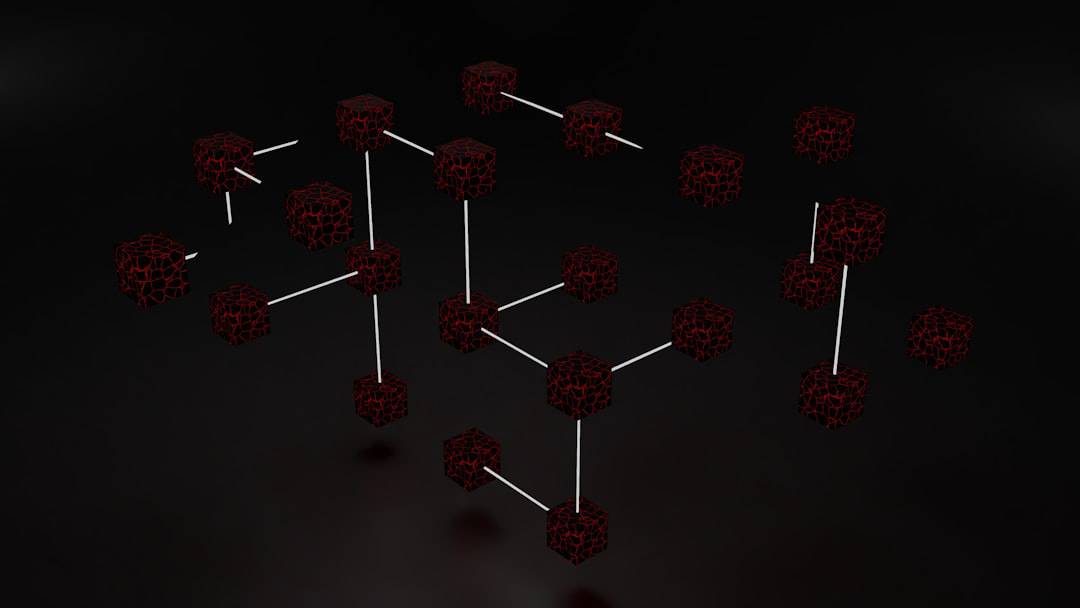

Neural networks are a fundamental component of artificial intelligence (AI) systems, and hidden layers play a crucial role in their functionality. Hidden layers are the intermediary layers between the input and output layers of a neural network, where the complex computations and transformations take place. These hidden layers are responsible for extracting and learning intricate patterns and features from the input data, which are then used to make predictions or classifications.

The number of hidden layers and the number of neurons within each hidden layer are key factors in determining the network’s ability to learn and generalize from the input data. Hidden layers enable neural networks to perform nonlinear transformations on the input data, allowing them to capture complex relationships and patterns that may not be apparent in the raw data. This ability to learn and represent intricate features is what gives neural networks their power in solving a wide range of AI tasks, such as image recognition, natural language processing, and predictive analytics.

Without hidden layers, neural networks would be limited to linear transformations, severely restricting their ability to model real-world phenomena. Therefore, understanding the role of hidden layers is essential for developing effective neural network architectures and harnessing the full potential of AI systems.

Key Takeaways

- Hidden layers in neural networks are responsible for extracting and transforming input data to produce meaningful output.

- The number and arrangement of hidden layers can significantly impact the performance and accuracy of AI systems.

- The complexity of hidden layers lies in their ability to learn and represent intricate patterns and relationships within data.

- Deep learning in AI harnesses the potential of hidden layers to process and understand complex information for improved predictive capabilities.

- Training neural networks with hidden layers is crucial for enabling the system to learn and make accurate predictions.

Exploring the Impact of Hidden Layers on AI Performance

Underfitting and Overfitting

If there are too few hidden layers or neurons, the network may suffer from underfitting, where it fails to capture the complexity of the data, leading to poor performance on unseen examples. On the other hand, if there are too many hidden layers or neurons, the network may experience overfitting, where it learns to memorize the training data rather than generalize from it, resulting in poor performance on new data.

Architecture and Connectivity

The arrangement and connectivity of hidden layers also play a crucial role in determining the network’s performance. Different architectures, such as feedforward, recurrent, and convolutional neural networks, utilize hidden layers in distinct ways to solve specific AI tasks.

Designing Effective Neural Networks

Understanding how hidden layers impact AI performance is essential for designing and training effective neural network models that can generalize well to new, unseen data. For instance, convolutional neural networks leverage hidden layers with shared weights to efficiently extract spatial hierarchies of features from image data, while recurrent neural networks use hidden layers with feedback loops to model sequential dependencies in time-series data.

Uncovering the Complexity of Hidden Layers in Neural Network Architecture

The complexity of hidden layers in neural network architecture lies in their ability to learn and represent intricate patterns and features from the input data. Each hidden layer performs a series of nonlinear transformations on the input data, using a combination of weights and activation functions to extract higher-level representations of the input features. As the data passes through each hidden layer, it becomes increasingly abstract and complex, allowing the network to capture hierarchical relationships and dependencies within the data.

Moreover, the interactions between hidden layers add another layer of complexity to neural network architecture. The representations learned by one hidden layer serve as input to the next hidden layer, creating a cascading effect that enables the network to learn increasingly sophisticated features. This hierarchical learning process is what allows neural networks to model complex real-world phenomena and make accurate predictions or classifications.

However, this complexity also presents challenges in training and optimizing neural network models, as it requires careful tuning of hyperparameters and regularization techniques to prevent overfitting or underfitting. Understanding the complexity of hidden layers is crucial for developing effective neural network architectures that can learn from diverse and high-dimensional data. By unraveling the intricate transformations and representations that occur within hidden layers, researchers and practitioners can gain insights into how neural networks process information and make decisions, leading to advancements in AI capabilities.

Harnessing the Potential of Hidden Layers for Deep Learning in AI

| Hidden Layer Configuration | Accuracy | Training Time |

|---|---|---|

| 1 hidden layer | 85% | 2 hours |

| 2 hidden layers | 88% | 3 hours |

| 3 hidden layers | 90% | 4 hours |

Hidden layers are at the core of deep learning, a subfield of AI that focuses on training neural networks with multiple hidden layers to learn from complex and unstructured data. Deep learning leverages the potential of hidden layers to automatically discover intricate patterns and features from raw input data, without requiring manual feature engineering. This ability to learn hierarchical representations of the input data is what sets deep learning apart from traditional machine learning approaches, enabling it to achieve state-of-the-art performance on various AI tasks.

Furthermore, deep learning architectures, such as deep neural networks and deep convolutional networks, utilize hidden layers with specialized structures and connectivity patterns to extract high-level abstractions from different types of data, such as images, text, and audio. These architectures leverage the potential of hidden layers to capture complex relationships within the data, enabling them to solve challenging AI problems, such as object detection, speech recognition, and language translation. Harnessing the potential of hidden layers for deep learning is essential for pushing the boundaries of AI capabilities and developing innovative solutions for real-world applications.

Revealing the Importance of Hidden Layers in Training Neural Networks

The importance of hidden layers in training neural networks lies in their ability to learn meaningful representations from the input data through a process known as feature extraction. During training, the network adjusts the weights and biases within each hidden layer using optimization algorithms, such as gradient descent, to minimize a loss function that measures the disparity between the predicted outputs and the ground truth labels. This iterative process allows the network to learn increasingly abstract and discriminative features from the input data, which are essential for making accurate predictions or classifications.

Moreover, hidden layers play a crucial role in preventing overfitting during training by learning robust representations that generalize well to new, unseen examples. Regularization techniques, such as dropout and weight decay, are commonly applied to hidden layers to encourage them to learn diverse and invariant features from the input data, reducing the risk of overfitting. Understanding the importance of hidden layers in training neural networks is essential for developing effective training strategies that can optimize model performance while mitigating issues such as vanishing gradients or exploding gradients.

Leveraging Hidden Layers to Enhance AI Predictive Capabilities

Hidden layers play a crucial role in enhancing AI predictive capabilities by enabling neural networks to learn complex patterns and relationships within the input data.

Learning Complex Patterns

The representations learned by hidden layers capture intricate features that are essential for making accurate predictions or classifications on new examples. By leveraging these representations, neural networks can generalize well to diverse and high-dimensional data, leading to improved predictive performance across various AI tasks.

Automatic Feature Extraction

Furthermore, hidden layers enable neural networks to perform automatic feature extraction, eliminating the need for manual feature engineering and allowing them to learn from raw input data directly. This capability is particularly advantageous in domains with large-scale and unstructured data, such as computer vision and natural language processing, where traditional feature engineering approaches may be impractical or ineffective.

Enhancing Predictive Capabilities

Leveraging hidden layers to enhance AI predictive capabilities is essential for developing advanced predictive models that can effectively analyze and interpret complex real-world data.

Maximizing the Efficiency of Neural Networks through Hidden Layer Optimization

Maximizing the efficiency of neural networks through hidden layer optimization involves fine-tuning various aspects of hidden layers to improve model performance and computational efficiency. This optimization process includes adjusting hyperparameters, such as the number of hidden layers, the number of neurons within each layer, and the choice of activation functions, to achieve a balance between model capacity and generalization ability. Additionally, optimizing the connectivity patterns between hidden layers can enhance information flow within the network and facilitate more effective feature learning.

Moreover, optimizing hidden layers involves implementing advanced techniques, such as batch normalization and residual connections, to stabilize training and accelerate convergence during optimization. These techniques help mitigate issues such as vanishing gradients or exploding gradients that can hinder training progress in deep neural networks with multiple hidden layers. Maximizing the efficiency of neural networks through hidden layer optimization is essential for developing scalable and high-performance models that can handle large-scale datasets and complex AI tasks effectively.

In conclusion, understanding the role of hidden layers in neural networks is crucial for unlocking their potential in AI systems. Hidden layers play a pivotal role in capturing complex patterns and relationships within the input data, enabling neural networks to learn from diverse and high-dimensional datasets effectively. By exploring the impact of hidden layers on AI performance and uncovering their complexity in neural network architecture, researchers and practitioners can harness their potential for deep learning and enhance predictive capabilities in AI systems.

Furthermore, revealing the importance of hidden layers in training neural networks and leveraging them for optimization can maximize the efficiency of neural networks and lead to advancements in AI capabilities. Overall, hidden layers are integral components of neural network models that hold immense promise for driving innovation in artificial intelligence.

If you’re interested in understanding the complexities of neural networks, particularly the concept of hidden layers, it’s essential to have a solid grasp of the broader technological landscape in which these models operate. A related topic is the integration of advanced technologies like neural networks into virtual environments, such as the metaverse. For a deeper insight into how these technologies are being applied in expansive digital ecosystems, I recommend reading an article on the challenges and opportunities presented by hybrid realities. You can explore this topic further by visiting Metaverse and the Real World: Challenges of the Hybrid Reality. This article provides a comprehensive overview of how virtual and augmented realities are merging with physical environments, a concept that is closely related to the advancements in neural network applications.

FAQs

A hidden layer in a neural network is a layer of nodes that sits between the input layer and the output layer. It is where the network processes the input data and learns to make predictions.

The hidden layer is responsible for extracting features from the input data and learning the underlying patterns in the data. It helps the neural network to make more accurate predictions by capturing complex relationships between the input and output.

The number of hidden layers in a neural network is a design choice and depends on the complexity of the problem. In practice, most neural networks have one or two hidden layers, but deeper networks with more hidden layers can be used for more complex tasks.

Activation functions in the hidden layer introduce non-linearities to the network, allowing it to learn and represent complex relationships in the data. Common activation functions used in hidden layers include ReLU, sigmoid, and tanh.

During training, the weights and biases in the hidden layer are adjusted using optimization algorithms such as gradient descent. The goal is to minimize the error between the predicted output and the actual output, by updating the weights and biases based on the gradients of the loss function.

Leave a Reply