Overfitting is a significant challenge in machine learning that occurs when a model becomes excessively complex relative to the training data. This phenomenon results in the model learning not only the underlying patterns but also the noise and random variations present in the training set. Consequently, the model exhibits high performance on the training data but fails to generalize effectively to new, unseen data.

The primary cause of overfitting is the model’s excessive complexity in relation to the available training data. This leads to high variance in the model’s predictions, making it overly sensitive to minor fluctuations in the input data. As a result, the model may perform exceptionally well on the training set but struggle with new data, ultimately compromising its predictive capabilities.

Overfitting can manifest in various types of machine learning algorithms, including regression, classification, and clustering models. It is crucial for data scientists and machine learning practitioners to recognize the signs of overfitting and implement appropriate techniques to mitigate its effects. Failure to address overfitting can lead to inaccurate and unreliable predictions, potentially undermining the effectiveness of the machine learning solution in real-world applications.

To combat overfitting, practitioners employ various strategies such as regularization, cross-validation, and early stopping. These techniques help to balance model complexity with generalization ability, ensuring that the model performs well on both training and unseen data. By understanding and addressing overfitting, data scientists can develop more robust and reliable machine learning models that deliver accurate predictions across a wide range of scenarios.

Key Takeaways

- Overfitting occurs when a machine learning model performs well on training data but poorly on new, unseen data.

- The bias-variance tradeoff in AI refers to the balance between a model’s ability to capture the true relationship in the data (bias) and its sensitivity to small fluctuations in the training data (variance).

- Techniques for preventing overfitting include using simpler models, increasing the amount of training data, and applying regularization methods such as L1 and L2 regularization.

- Cross-validation helps to assess a model’s performance on unseen data, while regularization methods like dropout and early stopping can prevent overfitting in machine learning models.

- Feature selection and dimensionality reduction techniques such as PCA and LDA can help to reduce overfitting by focusing on the most important features and reducing the complexity of the model.

Understanding the Bias-Variance Tradeoff in AI

Understanding the Bias-Variance Tradeoff

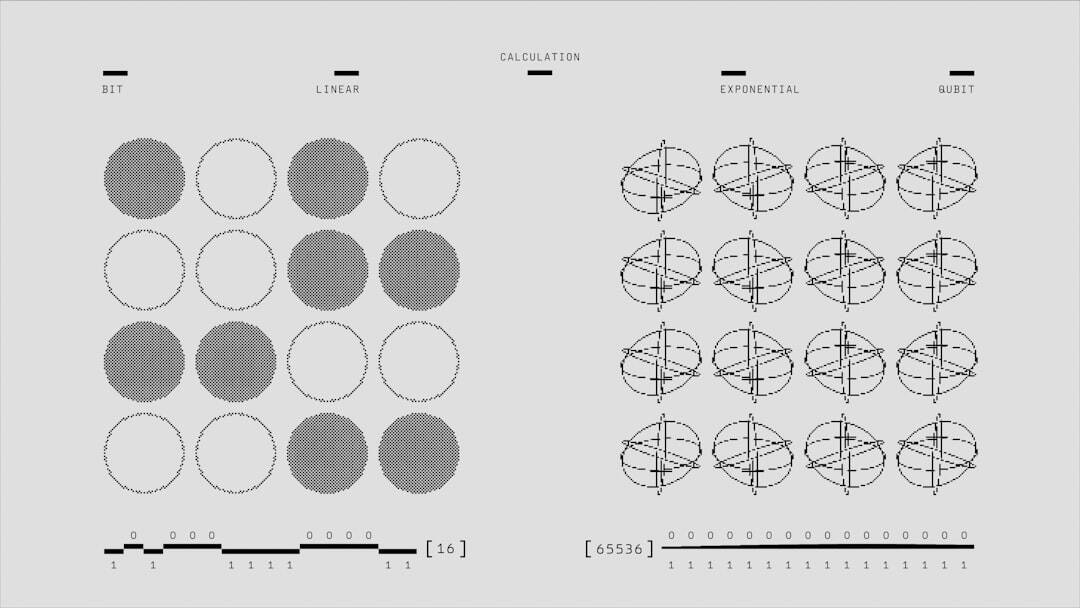

The bias-variance tradeoff states that as the complexity of a model increases, its bias decreases but its variance increases, and vice versa. This means that as a model becomes more complex, it is better able to fit the training data, but it also becomes more sensitive to small fluctuations in the data.

The Consequences of Imbalance

Finding the right balance between bias and variance is crucial for building machine learning models that generalize well to new data. High bias models are too simplistic and may fail to capture the underlying patterns in the data, leading to underfitting. On the other hand, high variance models are too complex and capture both the underlying patterns and the random noise in the data, leading to overfitting.

Mitigating the Bias-Variance Tradeoff

Finding the optimal level of model complexity that minimizes both bias and variance is essential for building robust machine learning models. Techniques such as cross-validation, regularization, and ensemble methods can help mitigate the bias-variance tradeoff and improve the predictive performance of machine learning models.

Techniques for Preventing Overfitting in Machine Learning Models

There are several techniques that can be used to prevent overfitting in machine learning models. One common approach is to use regularization methods, such as L1 and L2 regularization, which add a penalty term to the model’s cost function to discourage overly complex models. Regularization helps prevent overfitting by constraining the model’s weights and reducing its sensitivity to small fluctuations in the training data.

Another technique for preventing overfitting is early stopping, where the training of the model is stopped once the validation error starts to increase, indicating that the model is starting to overfit the training data. Feature selection and dimensionality reduction are also effective techniques for preventing overfitting in machine learning models. By selecting only the most relevant features or reducing the dimensionality of the input data, we can build simpler models that are less prone to overfitting.

Techniques such as principal component analysis (PCA) and feature importance scores can help identify the most important features in the data and reduce its dimensionality. Additionally, ensemble methods such as bagging and boosting can help prevent overfitting by combining multiple models to make more robust predictions.

Cross-Validation and Regularization Methods in AI

| Method | Purpose | Advantages | Disadvantages |

|---|---|---|---|

| Cross-Validation | Evaluating model performance | Reduces overfitting, utilizes data efficiently | Computationally expensive for large datasets |

| L1 Regularization (Lasso) | Feature selection, sparse models | Automatic feature selection, handles multicollinearity | May shrink coefficients to zero, sensitive to outliers |

| L2 Regularization (Ridge) | Reduces model complexity, prevents overfitting | Stable with correlated features, reduces variance | Does not perform feature selection, may not handle large number of features well |

Cross-validation is a powerful technique for preventing overfitting in machine learning models. It involves splitting the training data into multiple subsets, training the model on different combinations of these subsets, and evaluating its performance on a separate validation set. By averaging the performance of the model across multiple validation sets, we can obtain a more reliable estimate of its predictive performance and reduce the risk of overfitting.

Cross-validation helps ensure that the model generalizes well to new data and is not overly sensitive to fluctuations in the training data. Regularization methods such as L1 and L2 regularization are also effective for preventing overfitting in machine learning models. These methods add a penalty term to the model’s cost function, which discourages overly complex models by constraining their weights.

L1 regularization (Lasso) encourages sparsity in the model’s weights, leading to feature selection and reducing its complexity. On the other hand, L2 regularization (Ridge) penalizes large weights and reduces their impact on the model’s predictions. By using regularization methods, we can build more robust machine learning models that generalize well to new data.

Feature Selection and Dimensionality Reduction in Machine Learning

Feature selection and dimensionality reduction are essential techniques for preventing overfitting in machine learning models. Feature selection involves selecting only the most relevant features from the input data, while dimensionality reduction involves reducing its complexity by transforming it into a lower-dimensional space. By using these techniques, we can build simpler models that are less prone to overfitting and generalize well to new data.

Principal component analysis (PCA) is a popular technique for dimensionality reduction in machine learning. It involves transforming the input data into a new set of orthogonal features called principal components, which capture most of its variance. By retaining only a subset of these principal components, we can reduce the dimensionality of the input data while preserving most of its information.

Feature importance scores are also effective for feature selection, as they help identify the most relevant features in the data and discard irrelevant ones.

Ensemble Methods and Model Averaging in AI

Types of Ensemble Methods

Bagging is a popular ensemble method that involves training multiple models on different subsets of the training data and averaging their predictions. This helps reduce the variance in the model’s predictions and improve its predictive performance. Boosting is another effective ensemble method that involves training multiple models sequentially, where each model focuses on correcting the errors of its predecessor.

Benefits of Ensemble Methods

By combining these models, we can build more robust machine learning models that generalize well to new data. This approach can lead to improved predictive performance and reduced overfitting.

Improved Model Accuracy

By leveraging the strengths of multiple models, ensemble methods can provide a more accurate and reliable estimate of the target variable. This can be particularly useful in applications where high-stakes decisions are made based on model predictions.

Conclusion and Best Practices for Preventing Overfitting in Machine Learning Models

In conclusion, overfitting is a common problem in machine learning models that can lead to poor predictive performance and unreliable results. It occurs when a model learns the training data too well, including its noise and random fluctuations, leading to high variance and poor generalization to new data. To prevent overfitting, it is important to understand the bias-variance tradeoff and use techniques such as cross-validation, regularization, feature selection, dimensionality reduction, ensemble methods, and model averaging.

Best practices for preventing overfitting in machine learning models include using cross-validation to obtain a more reliable estimate of their predictive performance, using regularization methods such as L1 and L2 regularization to discourage overly complex models, selecting only the most relevant features from the input data, reducing its dimensionality using techniques such as PCA, and using ensemble methods such as bagging and boosting to make more robust predictions. By following these best practices, we can build more accurate and reliable machine learning models that generalize well to new data and are less prone to overfitting.

If you’re interested in learning more about user-generated content in the metaverse, you should check out this article on the topic. It discusses the impact of user-generated content on community and culture within the metaverse, and how it can contribute to the overall experience for users. Understanding the role of user-generated content in the metaverse can help prevent overfitting and ensure a diverse and engaging environment for all participants.

FAQs

What is overfitting?

Overfitting is a common problem in machine learning where a model learns the training data too well, to the point that it negatively impacts its ability to generalize to new, unseen data.

How does overfitting occur?

Overfitting occurs when a model is too complex relative to the amount of training data available. This can lead the model to learn the noise and specific patterns in the training data, rather than the underlying relationships that would allow it to make accurate predictions on new data.

What are the consequences of overfitting?

The consequences of overfitting include poor performance on new, unseen data, as well as a lack of generalization and robustness in the model’s predictions. This can lead to inaccurate and unreliable results.

How can overfitting be prevented?

Overfitting can be prevented by using techniques such as cross-validation, regularization, and feature selection. These methods help to ensure that the model learns the underlying patterns in the data, rather than the noise and specific details of the training set.

What are some common indicators of overfitting?

Common indicators of overfitting include a large difference in performance between the training and test data, as well as high variance in the model’s predictions. Additionally, if the model performs well on the training data but poorly on new data, it may be overfit.

Leave a Reply