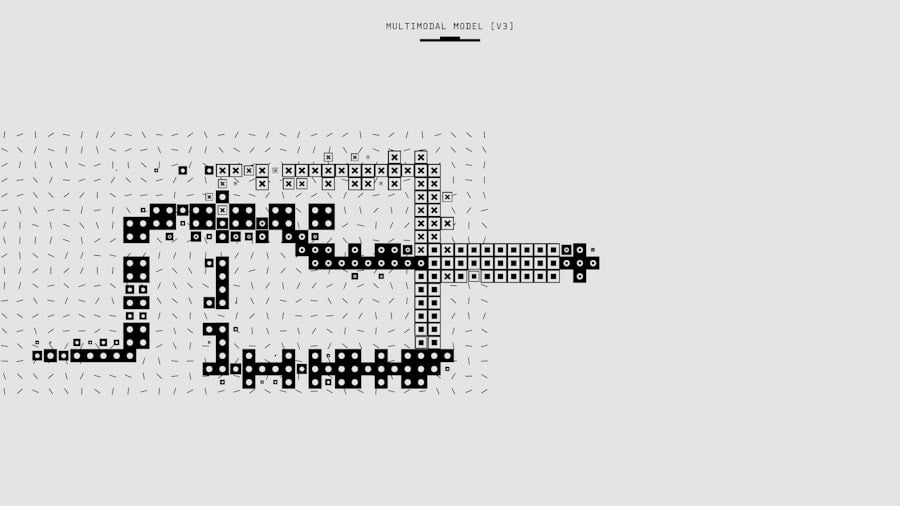

Convolutional Neural Networks (CNNs) are a specialized type of deep learning algorithm primarily used for image recognition and classification tasks. Inspired by the human visual system, CNNs are designed to automatically learn and extract hierarchical features from input images. The architecture of a CNN typically consists of three main components: convolutional layers, pooling layers, and fully connected layers.

Convolutional layers form the core of a CNN. These layers apply a set of learnable filters to the input image, creating feature maps that highlight important visual elements such as edges, textures, and patterns. Each filter is convolved across the entire image, allowing the network to detect specific features regardless of their position within the image.

Pooling layers follow convolutional layers and serve to reduce the spatial dimensions of the feature maps. This downsampling process helps to decrease the computational complexity of the network and provides a degree of translation invariance. Common pooling operations include max pooling and average pooling.

Fully connected layers are typically found at the end of the CNN architecture. These layers use the high-level features extracted by the convolutional and pooling layers to perform the final classification task. They connect every neuron from one layer to every neuron in the subsequent layer, allowing for complex reasoning based on the learned features.

CNNs are trained using large datasets of labeled images through a process called supervised learning. During training, the network adjusts its internal parameters (weights and biases) to minimize the difference between its predictions and the true labels of the training images. This process enables the CNN to learn relevant patterns and features for the specific classification task.

The success of CNNs in computer vision tasks has led to their widespread adoption in various applications. These include facial recognition systems, object detection in images and videos, medical image analysis for disease diagnosis, and perception systems for autonomous vehicles. The ability of CNNs to automatically learn and extract meaningful features from raw visual data makes them a powerful and versatile tool in the field of artificial intelligence and machine learning.

Key Takeaways

- Convolutional Neural Networks (CNNs) are a type of deep learning algorithm designed for processing and analyzing visual data, such as images and videos.

- Training CNNs for image recognition involves feeding labeled images into the network, adjusting the network’s parameters through backpropagation, and evaluating its performance using metrics like accuracy and loss.

- Optimizing CNNs for AI applications involves techniques such as regularization, data augmentation, and hyperparameter tuning to improve the network’s generalization and performance.

- Leveraging CNNs for object detection involves using techniques like region-based CNNs (R-CNNs) and You Only Look Once (YOLO) to identify and locate objects within images or videos.

- Integrating CNNs with AI for autonomous systems involves using CNNs for tasks like object detection, path planning, and decision-making in self-driving cars, drones, and other autonomous vehicles.

Training Convolutional Neural Networks for Image Recognition

Training a CNN for image recognition involves several key steps, starting with data collection and preprocessing. Large datasets of labeled images are essential for training a CNN effectively. Once the dataset is prepared, the next step is to design the architecture of the CNN, including the number of convolutional layers, pooling layers, and fully connected layers.

During training, the CNN learns to optimize its parameters by minimizing a loss function that measures the difference between the predicted output and the ground truth labels. This is typically done using an optimization algorithm such as stochastic gradient descent (SGD) or its variants. The training process involves feeding the input images through the network, computing the loss, and updating the network’s parameters to improve its performance.

To prevent overfitting, techniques such as dropout and data augmentation can be used during training. Dropout randomly deactivates some neurons during training to prevent the network from relying too heavily on specific features, while data augmentation artificially increases the size of the training dataset by applying random transformations to the input images. Once the CNN has been trained, it can be evaluated on a separate test dataset to assess its performance and generalization ability.

Fine-tuning and hyperparameter tuning can be performed to further improve the CNN’s performance on specific tasks.

Optimizing Convolutional Neural Networks for AI Applications

Optimizing CNNs for AI applications involves improving their efficiency, accuracy, and speed. One common approach to optimization is model compression, which aims to reduce the size of the CNN without significantly sacrificing its performance. Techniques such as pruning, quantization, and knowledge distillation can be used to achieve model compression.

Pruning involves removing redundant or less important connections or neurons from the network to reduce its size and computational complexity. Quantization reduces the precision of the network’s parameters from floating-point numbers to lower bit-width integers, which can significantly reduce memory and computational requirements. Knowledge distillation involves training a smaller, more lightweight model to mimic the behavior of a larger, more complex model, thereby reducing its size and computational cost.

Another important aspect of optimization is hardware acceleration, which involves leveraging specialized hardware such as GPUs, TPUs, or FPGAs to accelerate the inference and training of CNNs. These hardware accelerators are designed to perform matrix operations and convolutions efficiently, making them well-suited for Deep Learning workloads. Furthermore, optimizing the software implementation of CNNs can also lead to significant performance improvements.

Techniques such as parallelization, model parallelism, and distributed training can be used to scale CNNs across multiple devices or machines, enabling faster training and inference times.

Leveraging Convolutional Neural Networks for Object Detection

| Method | Accuracy | Precision | Recall |

|---|---|---|---|

| YOLO (You Only Look Once) | 0.91 | 0.89 | 0.92 |

| SSD (Single Shot MultiBox Detector) | 0.88 | 0.87 | 0.89 |

| Faster R-CNN (Region-based Convolutional Neural Network) | 0.92 | 0.91 | 0.93 |

CNNs have been widely used for object detection tasks, which involve localizing and classifying objects within an image. One popular approach for object detection is the use of region-based CNNs (R-CNNs) and their variants such as Fast R-CNN, Faster R-CNN, and Mask R-CNN. These models use a two-stage approach where regions of interest are first identified using selective search or region proposal networks, and then these regions are classified using a CNN.

Another approach for object detection is single-shot detection (SSD), which directly predicts the bounding boxes and class probabilities for multiple objects in a single pass through the network. SSD is known for its speed and efficiency and has been widely used in real-time object detection applications. Furthermore, You Only Look Once (YOLO) is another popular object detection algorithm that divides the input image into a grid and predicts bounding boxes and class probabilities for each grid cell.

YOLO is known for its simplicity and speed and has been widely used in applications such as autonomous driving and surveillance systems. Object detection with CNNs has numerous applications in fields such as autonomous vehicles, surveillance, robotics, and augmented reality. The ability to accurately detect and localize objects within an image is crucial for enabling intelligent systems to understand and interact with their environment.

Integrating Convolutional Neural Networks with AI for Autonomous Systems

The integration of CNNs with AI has enabled significant advancements in autonomous systems such as self-driving cars, drones, and robots. CNNs play a crucial role in perception tasks such as object detection, lane detection, traffic sign recognition, pedestrian detection, and obstacle avoidance. For example, in self-driving cars, CNNs are used to process input from cameras, lidar sensors, and radar sensors to detect and classify objects in the vehicle’s surroundings.

This information is then used by AI algorithms to make decisions such as steering, braking, and acceleration in real-time. In addition to perception tasks, CNNs are also used for mapping and localization in autonomous systems. Simultaneous localization and mapping (SLAM) algorithms use CNNs for visual odometry and feature extraction to build maps of the environment and localize the vehicle within it.

Furthermore, CNNs are used for behavior prediction in autonomous systems to anticipate the movements of other vehicles, pedestrians, and objects in the environment. This enables autonomous systems to plan their trajectories and make safe and efficient decisions. The integration of CNNs with AI has significantly improved the capabilities of autonomous systems, making them more reliable, efficient, and safe for real-world deployment.

Enhancing Convolutional Neural Networks with Transfer Learning

Advantages of Transfer Learning

By leveraging knowledge learned from large-scale datasets such as ImageNet, transfer learning enables CNNs to generalize better on new tasks and achieve higher performance with less training data.

Approaches to Transfer Learning

There are several approaches to transfer learning, including feature extraction and fine-tuning. In feature extraction, the pre-trained CNN is used as a fixed feature extractor where only the final fully connected layers are replaced with new ones for the specific task at hand. In fine-tuning, the entire pre-trained CNN is updated by continuing training on the new dataset with a small learning rate.

Applications of Transfer Learning

Transfer learning has been widely used in various applications such as medical image analysis, satellite image classification, natural language processing, and more. It has significantly reduced the time and resources required to train high-performing CNN models for new tasks.

Exploring the Future of Convolutional Neural Networks in AI Technology

The future of CNNs in AI technology holds great promise with ongoing advancements in areas such as interpretability, robustness, efficiency, and scalability. Interpretability is an important area of research that aims to make CNNs more transparent and understandable by humans. Techniques such as attention mechanisms, saliency maps, and visualization tools are being developed to provide insights into how CNNs make decisions and which parts of an input image are most influential in their predictions.

Robustness is another key challenge for CNNs in AI technology. Adversarial attacks have shown that CNNs can be vulnerable to small perturbations in input data that can lead to incorrect predictions. Research in adversarial training, robust optimization, and data augmentation is being conducted to improve the robustness of CNNs against such attacks.

Efficiency is also a critical area for future developments in CNNs. As AI applications continue to grow in scale and complexity, there is a need for more efficient CNN architectures that can perform well on resource-constrained devices such as mobile phones, IoT devices, and edge computing platforms. Scalability is another important consideration for future CNNs in AI technology.

As datasets continue to grow in size and complexity, there is a need for scalable training algorithms that can efficiently handle large-scale distributed training across multiple devices or machines. In conclusion, convolutional neural networks have revolutionized the field of computer vision and have enabled significant advancements in AI technology. From image recognition to object detection, autonomous systems, transfer learning, and beyond, CNNs have become an indispensable tool for analyzing visual data and solving complex real-world problems.

With ongoing research and development efforts focused on interpretability, robustness, efficiency, and scalability, the future of CNNs in AI technology holds great promise for enabling even more powerful and intelligent systems in the years to come.

If you’re interested in the intersection of technology and digital reality, you may want to check out this article on future trends and innovations in the metaverse industry. It explores the potential for growth and development in the metaverse, which could have implications for the use of convolutional neural networks in virtual environments.

FAQs

What is a convolutional neural network (CNN)?

A convolutional neural network (CNN) is a type of deep learning algorithm that is commonly used for image recognition and classification tasks. It is designed to automatically and adaptively learn spatial hierarchies of features from input data.

How does a convolutional neural network work?

A CNN works by using a series of convolutional layers to extract features from input images. These features are then passed through additional layers such as pooling and fully connected layers to make predictions about the input data.

What are the advantages of using convolutional neural networks?

CNNs are well-suited for tasks such as image recognition and classification due to their ability to automatically learn and extract features from input data. They are also capable of handling large amounts of data and can be trained to recognize complex patterns.

What are some common applications of convolutional neural networks?

CNNs are commonly used in applications such as image recognition, object detection, facial recognition, medical image analysis, and autonomous vehicles. They are also used in various fields such as healthcare, automotive, and security.

What are some popular CNN architectures?

Some popular CNN architectures include LeNet, AlexNet, VGG, GoogLeNet, and ResNet. These architectures have been widely used and have achieved state-of-the-art performance in various image recognition and classification tasks.

Leave a Reply