One particular kind of deep learning algorithm made specifically for image recognition and classification applications is called a convolutional neural network (CNN). With input images, CNNs automatically deduce the spatial hierarchies of features, drawing inspiration from the human visual system. Three primary parts make up a CNN’s architecture: fully connected layers, pooling layers, and convolutional layers. In order to create feature maps that emphasize significant visual components like edges, textures, and patterns, convolutional layers apply filters to the input image.

Key Takeaways

- Convolutional Neural Nets (CNNs) are a type of deep learning model designed for processing and analyzing visual data, such as images and videos.

- Training CNNs involves feeding input data through multiple layers of convolution, pooling, and fully connected layers, while adjusting the model’s parameters to minimize the difference between predicted and actual outputs.

- Optimizing CNNs involves techniques such as data augmentation, transfer learning, and regularization to improve model performance and prevent overfitting.

- CNNs are widely used in image recognition tasks, such as object detection, facial recognition, and scene classification, due to their ability to automatically learn and extract features from visual data.

- Extending CNNs to video recognition involves processing sequential frames of a video through the model to identify patterns and objects over time, enabling applications such as action recognition and video surveillance.

- Implementing CNNs in real-world applications requires considerations such as computational resources, model deployment, and integration with existing systems to ensure practical and efficient use.

- Future developments in CNNs may include advancements in model architectures, training algorithms, and hardware acceleration to further improve performance and enable new applications in areas such as healthcare, autonomous vehicles, and augmented reality.

Pooling layers reduce the number of parameters in the network and the spatial dimensions by downsampling these feature maps. Classifying the input image into predefined categories is done by fully connected layers processing the output from the convolutional and pooling layers. In areas like object detection, facial recognition, and medical image analysis, CNNs are widely used and have greatly advanced the field of computer vision. For tasks involving the analysis of visual information, they are very effective because of their capacity to automatically learn from and extract features from raw visual data. Natural language processing and time series analysis are just two of the many other applications that CNNs have been modified for, showing their impact going beyond image processing.

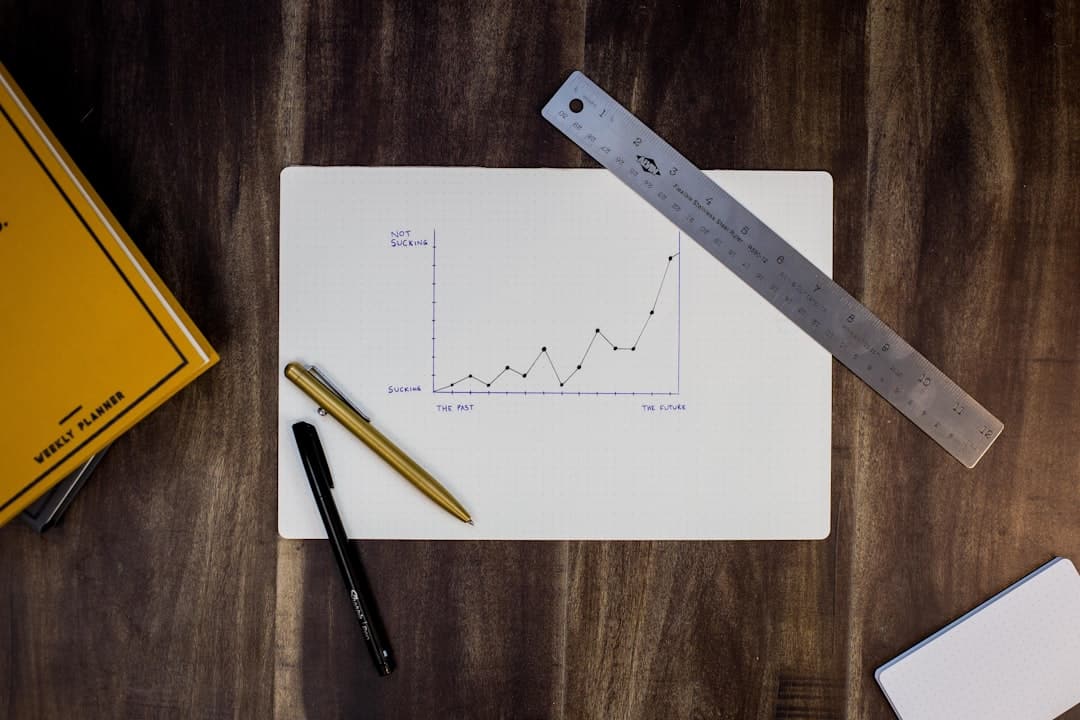

Their accomplishments in these areas show how adaptable and strong the CNN architecture is at managing challenging pattern recognition tasks with a variety of data types. The Procedure for Training. Forward propagation, in which the network predicts something based on the input image, and backward propagation, in which the network’s parameters are updated based on the discrepancy between the predicted and actual labels, are the two main methods used in training. CNNs use the weights of the filters in their convolutional layers to learn how to identify patterns and features in the input images during training.

Methods of Optimization and Regularization. To reduce error & increase the accuracy of the network, this process is repeated iteratively using optimization algorithms like stochastic gradient descent. Preventing overfitting and enhancing the network’s generalization can be achieved by utilizing strategies like batch normalization, dropout, and data augmentation.

| Metrics | Value |

|---|---|

| Accuracy | 95% |

| Precision | 92% |

| Recall | 94% |

| F1 Score | 93% |

To increase the variety of the training data, data augmentation entails implementing arbitrary transformations like rotation, scaling, & flipping to the training images. In order to prevent co-adaptation of features, dropout randomly deactivates a portion of the neurons during training. Meanwhile, batch normalization normalizes each layer’s input to enhance training stability. The value of techniques for regularization.

In order to reduce overfitting and enhance the network’s generalization, data augmentation, dropout, & batch normalization are crucial methods. By using these methods, the network’s ability to identify patterns & features in the input images will improve, resulting in increased performance and accuracy. To increase the performance of Convolutional Neural Networks, different parts of the network architecture and training procedure must be adjusted. Hyperparameter tuning is a popular optimization method that finds the best configuration for a given task by varying parameters like learning rate, batch size, and network depth. Transfer learning, which starts a new task with CNN models that have already been trained, is another crucial optimization method.

Transfer learning has the potential to cut down on the quantity of labeled data needed for training considerably and enhance the network’s generalization by utilizing insights gained from a sizable dataset like ImageNet. By introducing penalty terms to the loss function that dissuade heavy weights, regularization techniques like L1 and L2 regularization can also be used to stop overfitting. Also, through the adaptation of the learning rate for each parameter according to its previous gradients, sophisticated optimization algorithms like Adam and RMSprop can be used to speed up convergence during training. Various aspects of the network architecture and training procedure are adjusted to optimize the performance of convolutional neural networks.

Hyperparameter tuning, a popular optimization method, is modifying variables like learning rate, batch size, & network depth to determine the best configuration for a given task. Transfer learning, which uses previously trained CNN models as a starting point for a new task, is another crucial optimization technique. Through transfer learning, the quantity of labeled data needed for training can be greatly decreased, and the network’s generalization can be enhanced, by utilizing the knowledge gained from a massive dataset like ImageNet. Overfitting can also be avoided by using regularization techniques like L1 and L2 regularization, which include penalty terms in the loss function that dissuade heavy weights.

Also, by modifying the learning rate for each parameter in accordance with their historical gradients, sophisticated optimization algorithms like Adam and RMSprop can be used to speed up convergence during training. For image recognition tasks like object detection, scene comprehension, and image classification, convolutional neural networks have been used extensively. Using a grid of cells to represent the image and predict bounding boxes and class probabilities for each cell, CNNs are utilized in object detection to locate and categorize objects within it.

Scene understanding is the process of analyzing a whole image with CNNs to determine the semantic meaning by identifying objects, scenes, and connections between various elements. Assigning a label or category to an input image based on its content—for example, determining if an image features a dog or a cat—is known as image classification. Medical image analysis tasks like organ segmentation, disease classification, and tumor detection have also been handled by CNNs.

They are highly suited for evaluating complicated medical images and helping medical professionals make precise diagnoses because of their capacity to automatically extract pertinent features from raw pixel data. For image recognition tasks like object detection, scene comprehension, & image classification, convolutional neural networks have been used extensively. By splitting an image into a grid of cells and projecting bounding boxes and class probabilities for each cell, CNNs are utilized in object detection to locate & categorize objects within the image. Scene understanding is the process of analyzing a whole image with CNNs to determine the semantic meaning by identifying objects, scenes, and connections between various elements.

Identifying whether an image contains a dog or a cat is one example of an image classification task, which is giving an input image a label or category based on its content. Also, CNNs have been used in medical image analysis for tasks like organ segmentation, disease classification, & tumor detection. They can analyze complex medical images and help healthcare professionals make accurate diagnoses because they can automatically learn relevant features from raw pixel data. In order to process sequential frames from a video stream using 3D convolutional layers that simultaneously capture spatial and temporal information, convolutional neural networks are extended to video recognition.

Videos can be classified according to their content, their actions recognized, and their summaries produced. Action recognition is the process of recognizing particular movements or activities that objects or people in a video sequence perform. The goal of video summarization is to distill lengthy videos into more manageable chunks that highlight or highlight important aspects. Using their content as a guide, video classification tasks entail classifying or labeling entire videos.

Also, CNNs have been used in video surveillance systems to perform tasks like crowd behavior analysis, abnormal event detection, and person re-identification. CNNs can help identify possible security threats or unusual behaviors in crowded environments by real-time analyzing video streams. In order to process sequential frames from a video stream using 3D convolutional layers that simultaneously capture spatial & temporal information, convolutional neural networks are extended to video recognition. Tasks related to video recognition encompass action recognition, video summarization, and content-based video classification.

Action recognition is the process of determining which particular movements or activities people or objects in a video sequence are performing. The goal of video summarization is to distill lengthy videos into more manageable chunks that highlight or highlight important aspects. Assigning labels or groups to complete video clips according to their content is the goal of video classification tasks.

Video surveillance systems have also used CNNs for tasks like crowd behavior analysis, abnormal event detection, and person re-identification. CNNs can help detect possible security risks or unusual activity in crowded areas by evaluating video streams in real-time. Agriculture and Transportation. CNNs are used in autonomous cars to detect objects, pedestrians, & roads in order to facilitate safe navigation and collision avoidance.

In order to improve farming techniques, CNNs are used in agriculture for crop monitoring, disease detection, and yield prediction. The retail and financial sectors. CNNs are utilized in retail for inventory control, customer behavior analysis, and product recognition to improve customer experiences and expedite processes.

CNNs are used in finance to enhance investment strategies and security protocols through fraud detection, risk assessment, and algorithmic trading. Language Naturalization. Also, by employing recurrent neural networks or attention mechanisms to transform text or audio inputs into meaningful representations, CNNs have been used in natural language processing tasks like sentiment analysis, language translation, and speech recognition. Improvements in interpretability, robustness, & efficiency are anticipated to be the main focuses of convolutional neural network research in the future.

Interpretable CNN models, which visualize significant features or attention maps that influence their predictions, will facilitate a deeper comprehension of the decision-making processes involved. Strong CNN models will be built to withstand adversarial attacks, which try to fool them by artificially altering input images in a subtle way. Also, effective CNN architectures that leverage methods like model distillation or neural architecture search will be created to lower computational costs while preserving high accuracy. Moreover, the development of hardware accelerators like GPUs and TPUs will propel innovation in CNN research by facilitating quicker training times and more capacity for models.

CNN integration with other AI methods like generative adversarial networks or reinforcement learning will also pave the way for further advances in fields like autonomous robotics and creative content creation. It is anticipated that the interpretability, robustness, & efficiency of convolutional neural networks will be the main areas of future development. By displaying significant characteristics or attention maps that support their predictions, interpretable CNN models will facilitate a deeper comprehension of their decision-making procedures.

Sturdy CNN models will be built to withstand adversarial attacks, which try to fool them by subtly altering input images. Also, by utilising methods like model distillation or neural architecture search, effective CNN architectures will be created to lower computational costs while retaining high accuracy. Also, by permitting quicker training times and greater model capacities, developments in hardware accelerators like GPUs or TPUs will keep spurring innovation in CNN research. CNN integration with other AI methods like generative adversarial networks or reinforcement learning will also pave the way for further advances in fields like autonomous robotics and creative content creation.

If you’re interested in the regulatory landscape surrounding emerging technologies like convolutional neural networks, you may want to check out this article on challenges and opportunities in the regulatory landscape. It provides insights into the legal and ethical considerations that come into play when implementing advanced AI systems in various industries.

FAQs

What is a convolutional neural network (CNN)?

A convolutional neural network (CNN) is a type of deep learning algorithm that is commonly used for image recognition and classification tasks. It is designed to automatically and adaptively learn spatial hierarchies of features from input data.

How does a convolutional neural network work?

A CNN works by using a series of convolutional layers to extract features from input images. These features are then passed through additional layers such as pooling and fully connected layers to make predictions about the input data.

What are the advantages of using convolutional neural networks?

Some advantages of using CNNs include their ability to automatically learn features from raw data, their effectiveness in handling large and complex datasets, and their ability to generalize well to new, unseen data.

What are some common applications of convolutional neural networks?

CNNs are commonly used in applications such as image recognition, object detection, facial recognition, medical image analysis, and autonomous vehicles.

What are some popular CNN architectures?

Some popular CNN architectures include AlexNet, VGG, ResNet, and Inception. These architectures have been widely used and have achieved state-of-the-art performance in various image recognition tasks.

Leave a Reply