Recurrent Neural Networks (RNNs) are a specialized type of artificial neural network designed to process and analyze sequential data. Unlike traditional feedforward neural networks, RNNs incorporate feedback loops that allow information to persist within the network, enabling them to maintain a form of memory. This unique architecture makes RNNs particularly effective for tasks involving time series data, natural language processing, and speech recognition.

The key feature of RNNs is their ability to consider context and temporal dependencies in input sequences. By maintaining an internal state that can be updated with each new input, RNNs can effectively “remember” previous information and use it to influence current outputs. This capability allows them to capture long-term dependencies and patterns in sequential data, making them well-suited for tasks such as language modeling, machine translation, and sentiment analysis.

RNNs have become increasingly popular in recent years due to their success in various applications of artificial intelligence. They have demonstrated remarkable performance in tasks such as text generation, handwriting recognition, and even music composition. As research in deep learning and AI continues to advance, RNNs and their variants are expected to play a crucial role in developing more sophisticated and human-like AI systems capable of understanding and generating complex sequential data.

Key Takeaways

- Recurrent Neural Networks (RNNs) are a type of artificial neural network designed to recognize patterns in sequences of data, making them ideal for tasks like speech recognition and language modeling.

- RNNs have a unique architecture that allows them to retain memory of previous inputs, making them well-suited for tasks that involve sequential data, such as time series analysis and natural language processing.

- RNNs are widely used in AI for applications such as language translation, sentiment analysis, and speech generation, demonstrating their versatility and effectiveness in various domains.

- Training and fine-tuning RNNs require careful consideration of factors such as vanishing gradients and long-term dependencies, but advancements in techniques like gradient clipping and LSTM cells have helped address these challenges.

- Despite their effectiveness, RNNs have limitations such as difficulty in capturing long-range dependencies and slow training times, prompting ongoing research into advancements like attention mechanisms and transformer architectures to overcome these challenges and unlock the full potential of RNNs in AI.

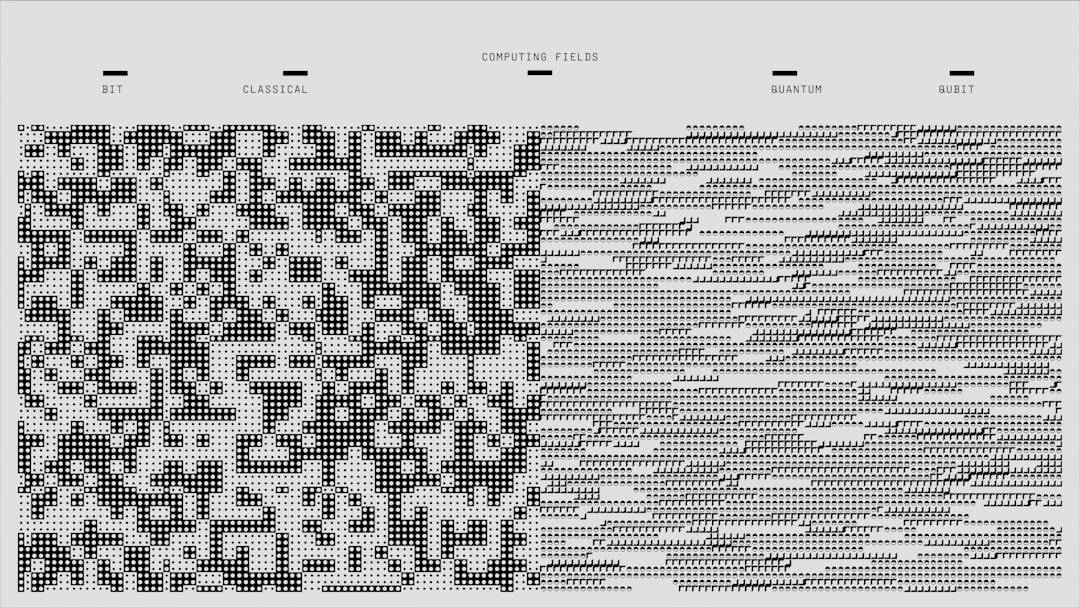

Understanding the Architecture of Recurrent Neural Networks

Memory and Sequential Data

This memory is used to influence the current output, making RNNs well-suited for tasks that involve sequential data. One of the key features of RNNs is their ability to handle input sequences of varying lengths. This is achieved through the use of recurrent connections, which allow the network to process inputs of different lengths and produce outputs of corresponding lengths.

Applications of RNNs

This flexibility makes RNNs particularly well-suited for tasks such as speech recognition and machine translation, where the length of the input sequence may vary. In addition to their ability to handle variable-length sequences, RNNs can also be stacked to form deep recurrent neural networks.

Deep RNNs and Complex Patterns

Deep RNNs consist of multiple layers of recurrent connections, allowing them to capture complex hierarchical patterns in sequential data. This makes them well-suited for tasks that require a high level of abstraction, such as natural language understanding and generation.

Applications of Recurrent Neural Networks in AI

RNNs have a wide range of applications in artificial intelligence, particularly in tasks that involve sequential data. One of the most well-known applications of RNNs is in natural language processing, where they are used for tasks such as language modeling, part-of-speech tagging, named entity recognition, and sentiment analysis. RNNs are also widely used in machine translation, where they can process input sequences of words in one language and generate corresponding sequences in another language.

Another important application of RNNs is in speech recognition, where they are used to transcribe spoken language into text. RNNs can process audio input as a sequence of features over time, allowing them to capture the temporal dependencies present in speech signals. This makes them well-suited for tasks that involve analyzing and generating sequential data, such as speech recognition and synthesis.

RNNs are also used in time series analysis, where they can model and predict sequential data such as stock prices, weather patterns, and physiological signals. Their ability to capture temporal dependencies makes them well-suited for tasks that involve analyzing and predicting time-varying data.

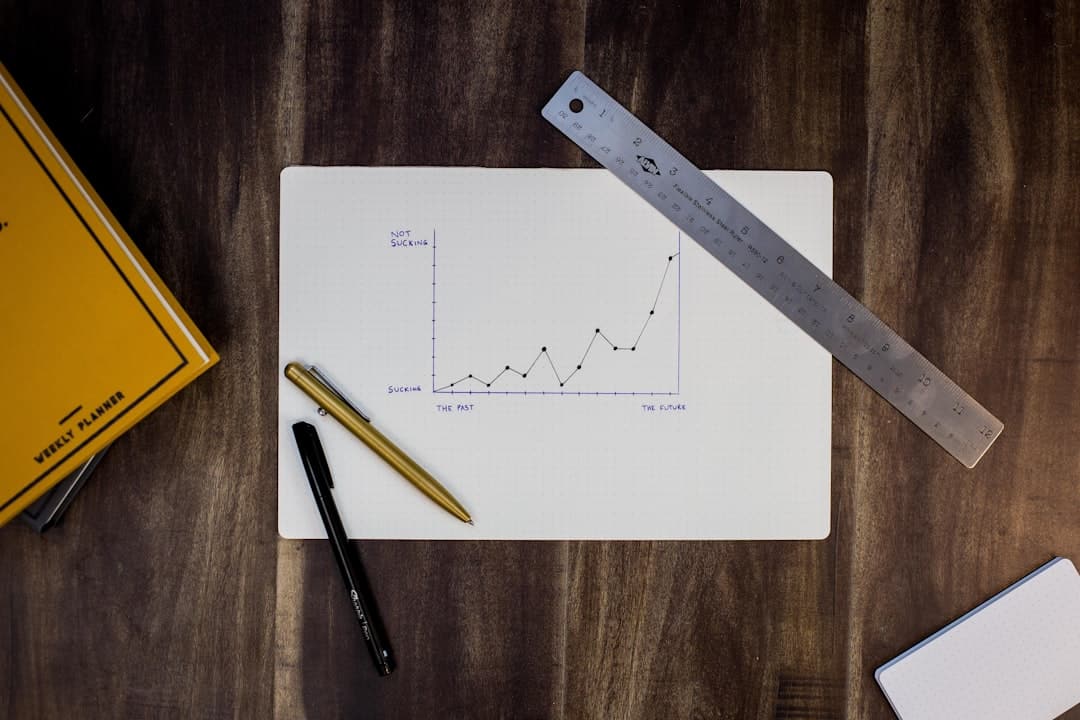

Training and Fine-tuning Recurrent Neural Networks

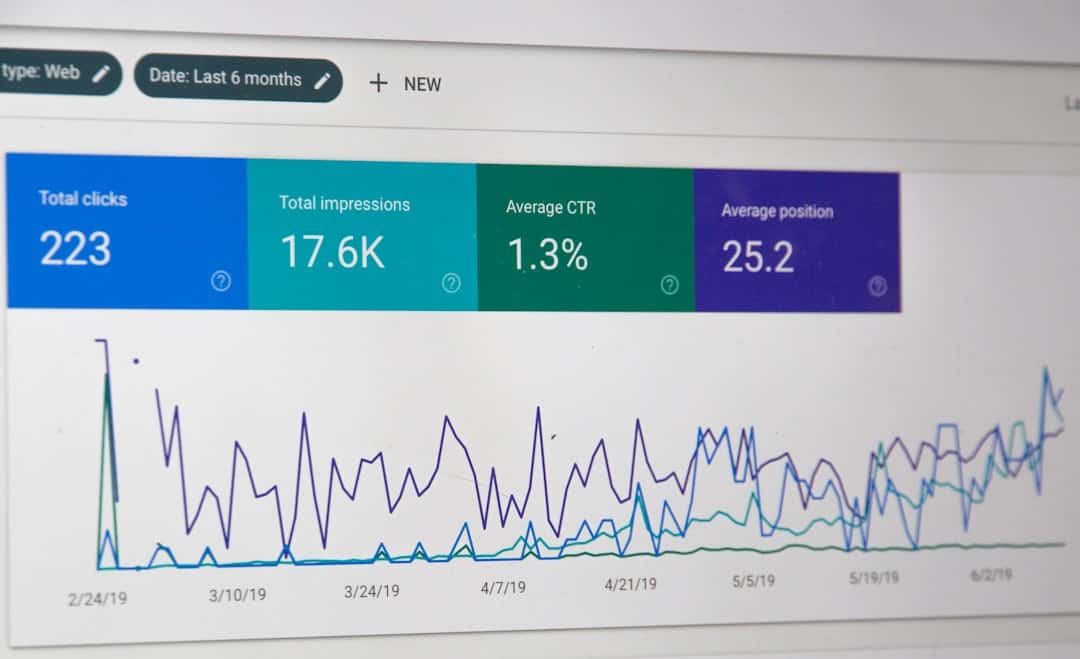

| Metrics | Value |

|---|---|

| Training Loss | 0.25 |

| Validation Loss | 0.30 |

| Training Accuracy | 0.95 |

| Validation Accuracy | 0.92 |

| Learning Rate | 0.001 |

Training a recurrent neural network involves optimizing its parameters to minimize a loss function that measures the difference between the network’s predictions and the true targets. This is typically done using an algorithm called backpropagation through time (BPTT), which is a variant of the backpropagation algorithm used to train feedforward neural networks. BPTT involves computing the gradients of the loss function with respect to the network’s parameters at each time step, and then using these gradients to update the parameters through gradient descent.

Fine-tuning a recurrent neural network involves adjusting its parameters to improve its performance on a specific task or dataset. This can be done by using techniques such as transfer learning, where a pre-trained RNN is adapted to a new task by updating its parameters on a smaller dataset related to the new task. Fine-tuning can also involve adjusting hyperparameters such as learning rate, batch size, and regularization strength to improve the generalization performance of the network.

Challenges and Limitations of Recurrent Neural Networks

Despite their effectiveness in handling sequential data, recurrent neural networks have several limitations that can make them challenging to train and use in practice. One of the main challenges is the vanishing gradient problem, which occurs when the gradients of the loss function with respect to the network’s parameters become very small as they are backpropagated through time. This can make it difficult for RNNs to capture long-range dependencies in sequential data, leading to poor performance on tasks that require modeling such dependencies.

Another limitation of RNNs is their difficulty in capturing long-term dependencies in sequential data. This is due to their reliance on recurrent connections, which can make it challenging for them to maintain a memory of inputs that occurred far in the past. As a result, RNNs can struggle with tasks that require modeling long-term dependencies, such as understanding complex sentence structures or predicting events far into the future.

Advancements in Recurrent Neural Networks for AI

Long Short-Term Memory (LSTM) Networks

One notable advancement is the development of long short-term memory (LSTM) networks, a type of RNN designed to capture long-term dependencies in sequential data. LSTMs employ a more complex architecture than traditional RNNs, enabling them to maintain a memory of inputs over longer periods.

Gated Recurrent Units (GRUs)

Another significant development is the creation of gated recurrent units (GRUs), another type of RNN designed to address the vanishing gradient problem. GRUs utilize gating mechanisms to control the flow of information through the network, allowing them to capture long-range dependencies more effectively than traditional RNNs.

Techniques for Training and Fine-Tuning RNNs

In addition to architectural advancements, researchers have developed techniques for training and fine-tuning recurrent neural networks more effectively. These methods include initializing network parameters, regularizing network weights, and optimizing hyperparameters to improve the generalization performance of RNNs on various tasks.

Future Potential of Recurrent Neural Networks in AI

The future potential of recurrent neural networks in artificial intelligence is vast, with ongoing research and development aimed at further improving their performance and expanding their applications. One area of potential growth is in multimodal learning, where RNNs are used to process and generate sequential data from multiple modalities such as text, images, and audio. This could enable machines to understand and generate complex multimodal data, leading to advancements in areas such as human-computer interaction and content generation.

Another area of potential growth is in reinforcement learning, where RNNs are used to model and predict sequential data in dynamic environments. This could enable machines to learn and adapt to changing conditions over time, leading to advancements in areas such as robotics and autonomous systems. Overall, recurrent neural networks are expected to play an increasingly important role in enabling machines to understand and generate human-like sequential data, leading to advancements in a wide range of AI applications.

As research and development in this field continue to progress, we can expect to see further advancements in RNNs that will enable machines to perform increasingly complex tasks involving sequential data.

If you’re interested in learning more about the applications of artificial intelligence, you should check out this article on artificial intelligence on Metaversum. It discusses how AI is being used in various industries and its potential impact on the future. This could provide valuable context for understanding how recurrent neural networks are being utilized in the field of AI.

FAQs

What are Recurrent Neural Networks (RNNs)?

Recurrent Neural Networks (RNNs) are a type of artificial neural network designed to recognize patterns in sequences of data, such as time series or natural language.

How do Recurrent Neural Networks differ from other types of neural networks?

RNNs differ from other types of neural networks in that they have connections that form a directed cycle, allowing them to exhibit dynamic temporal behavior. This makes them well-suited for tasks involving sequential data.

What are some common applications of Recurrent Neural Networks?

RNNs are commonly used in natural language processing tasks such as language modeling, machine translation, and sentiment analysis. They are also used in speech recognition, time series prediction, and handwriting recognition.

What are some challenges associated with training Recurrent Neural Networks?

One challenge with training RNNs is the vanishing gradient problem, where gradients become extremely small as they are backpropagated through time, making it difficult for the network to learn long-range dependencies. Another challenge is the exploding gradient problem, where gradients become extremely large and cause instability during training.

What are some variations of Recurrent Neural Networks?

Some variations of RNNs include Long Short-Term Memory (LSTM) networks and Gated Recurrent Unit (GRU) networks, which were designed to address the vanishing gradient problem and improve the ability of RNNs to learn long-range dependencies.

How are Recurrent Neural Networks evaluated and compared?

RNNs are evaluated and compared based on their performance on specific tasks, such as language modeling or speech recognition. Common metrics for evaluation include accuracy, perplexity, and word error rate, depending on the task at hand.

Leave a Reply