An essential idea in artificial intelligence and machine learning is neural networks. These are computer models that process and analyze complex data through networked nodes or neurons, modeled after the structure and operations of the human brain. These networks have the ability to recognize patterns in data, learn from it, and make decisions based on information that is fed to it. neural networks are composed of multiple layers: an input layer for entering data, an output layer for generating output, and one or more hidden layers for processing & analyzing data. The strength of each neuronal connection is determined by the weights attached to those connections.

Key Takeaways

- Neural networks are a type of machine learning model inspired by the human brain, consisting of interconnected nodes that process and transmit information.

- Training neural networks involves feeding them with large amounts of data to adjust the weights and biases of the connections between nodes, allowing them to learn and make predictions.

- Optimizing neural networks involves techniques such as regularization, dropout, and batch normalization to improve their performance and prevent overfitting.

- Applying neural networks to real-world problems has led to advancements in fields such as image and speech recognition, natural language processing, and autonomous vehicles.

- Advancements in neural network technology include the development of deep learning, reinforcement learning, and generative adversarial networks, expanding their capabilities and applications.

- Challenges and limitations of neural networks include the need for large amounts of data, computational resources, and the potential for biased or inaccurate predictions.

- The future of neural networks in machine learning holds promise for continued advancements in AI, with potential applications in healthcare, finance, and other industries.

In order to help the network learn & become more efficient over time, these weights are changed during training based on the discrepancy between the output and the desired outcome. Due to its capacity to resolve intricate issues in a variety of domains, such as speech and picture recognition, natural language processing, and autonomous driving, neural networks have become more and more well-known in recent years. For neural networks to be trained, optimized, and applied to real-world problems in an efficient manner, it is imperative to comprehend how they work. different forms of learning.

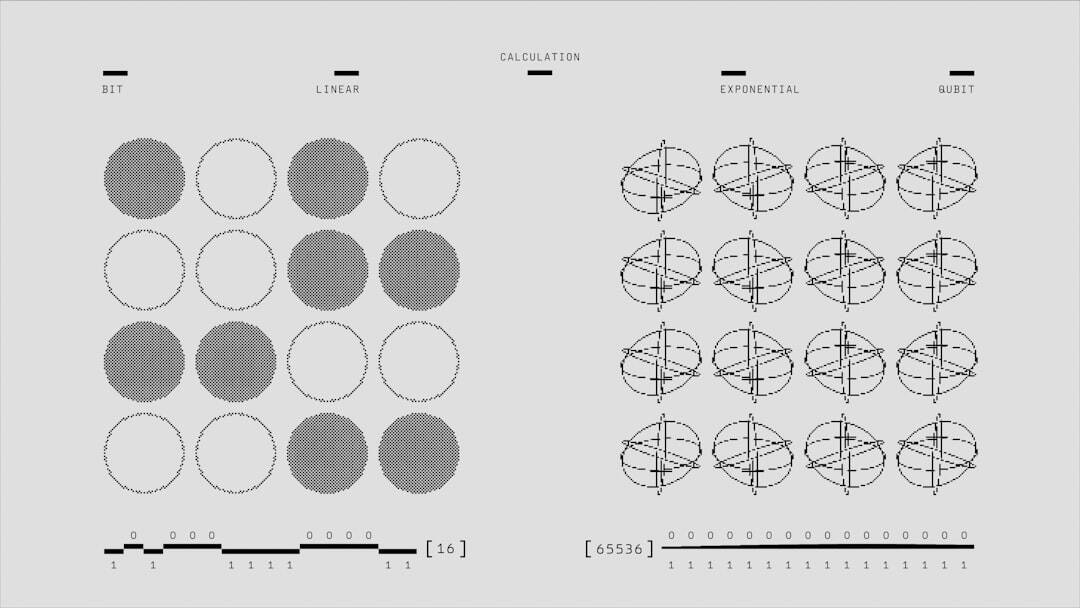

Supervised learning, in which the network is given input-output pairs & is trained to map inputs to outputs, is a popular method of training neural networks. Unsupervised learning is a different strategy in which the network picks up on patterns and structures in the input data without direct supervision. Reward or penalty feedback is another way that a network is trained to make decisions through interaction with the environment. This process is known as reinforcement learning.

Stopping Overfitting. To assess the network’s performance on untested data and avoid overfitting, the data must be divided into training and validation sets during the training process. When a network analyzes training data well but does not generalize to new data, this is known as overfitting. Overfitting can be avoided & the network’s capacity for generalization enhanced by using regularization techniques like dropout and weight decay. Increasing Efficiency.

| Metrics | Value |

|---|---|

| Accuracy | 95% |

| Precision | 90% |

| Recall | 85% |

| F1 Score | 92% |

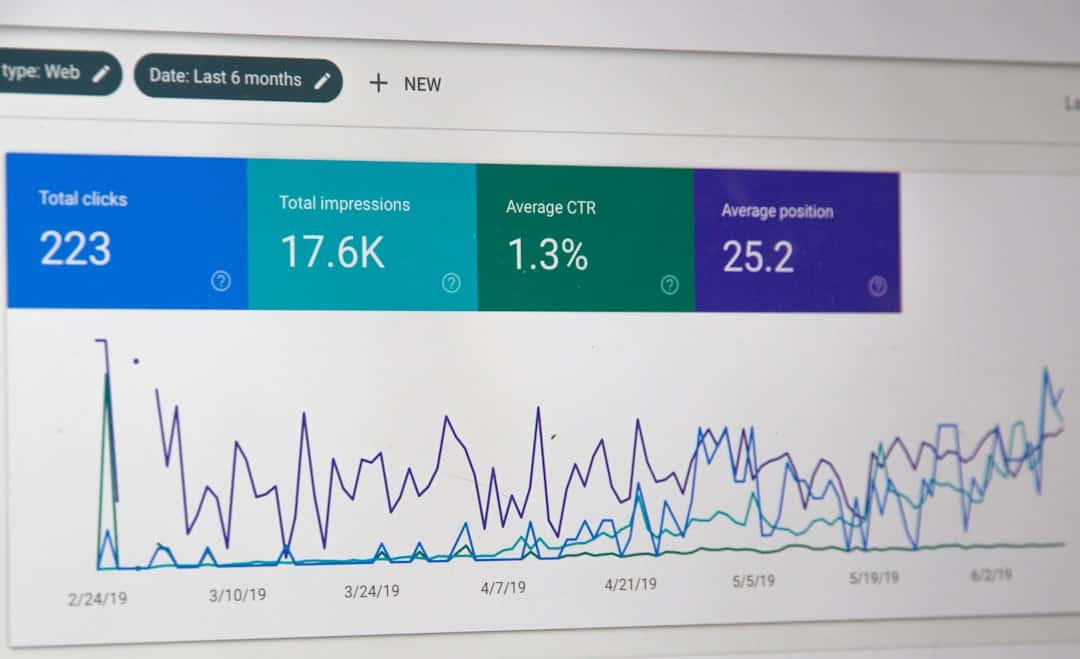

To achieve optimal performance, while training neural networks, it is necessary to carefully consider a number of hyperparameters, including learning rate, batch size, and network architecture. To increase the network’s accuracy and efficiency, it also entails tracking the training procedure, examining performance metrics, & adjusting its parameters. Enhancing neural networks’ performance and efficiency necessitates their optimization.

Increasing the network’s learning rate, momentum, and batch size is one popular optimization method that can be used to improve generalization and accelerate convergence. Also, the training process can be sped up and the network’s capacity to identify optimal solutions enhanced by the use of sophisticated optimization algorithms like Adam & RMSprop. Using regularization techniques to reduce overfitting and enhance the network’s capacity for generalization is another optimization strategy. Reduce overfitting and increase the network’s resilience to noise in the input data by implementing strategies like dropout, which randomly deactivates neurons during training, & weight decay, which penalizes large weights. Moreover, the network’s ability to learn intricate patterns & perform better on difficult tasks can be increased by optimizing the network’s architecture by including more layers, neurons, or activation functions. To prevent overfitting & attain ideal performance, it is crucial to establish a balance between the complexity of the model & its capacity for generalization.

However, the effectiveness of training neural networks can be greatly increased by utilizing strategies like transfer learning, which use pre-trained models as a foundation for new tasks, particularly in situations with a shortage of labeled data. State-of-the-art performance can be attained with minimal computational resources by fine-tuning pre-trained models on novel tasks. In many different fields, neural networks have been effectively used to solve a variety of real-world issues.

Convolutional neural networks (CNNs) have revolutionized tasks in computer vision, including object detection, image segmentation, and image classification. On difficult visual recognition tasks, CNNs have demonstrated human-level performance and the ability to automatically learn hierarchical features from raw pixel data. Transformer models and recurrent neural networks (RNNs) have proven very useful in natural language processing (NLP) for tasks like sentiment analysis, text generation, and language translation. With sequential data, these models are able to capture long-range dependencies and produce outputs that are coherent & pertinent to the context.

Neural networks have also been utilized in the medical field for drug discovery, disease diagnosis, and medical image analysis. Convolutional neural networks can help radiologists make highly accurate and efficient diagnoses by using large-scale medical imaging datasets to identify anomalies in medical pictures. Neural networks have been used in finance for tasks like algorithmic trading, risk assessment, and fraud detection. Neural networks are able to judge credit risk, detect fraudulent transactions, and make trading decisions in real time based on market trends by evaluating vast amounts of financial data.

Neural networks are also essential for perception tasks like object & lane detection and path planning in autonomous driving. Neural networks can help cars sense their environment and make decisions in real time by analyzing sensor data from cameras, lidar, and radar. The capabilities and applications of these models across multiple domains have been greatly enhanced by the advancements in neural network technology.

The creation of multi-layered deep learning architectures that are capable of learning hierarchical data representations is one noteworthy development. Complex tasks like speech recognition, picture recognition, and natural language understanding have shown that deep neural networks perform better than other neural network types. The appearance of attention mechanisms in neural network architectures—particularly in transformer models for NLP tasks—represents another noteworthy development.

When generating predictions, attention mechanisms allow models to concentrate on pertinent segments of the input sequence, which improves performance on tasks involving long-range dependencies and context awareness. Neural network training and inference have also been sped up by developments in hardware, such as graphical processing units (GPUs) & tensor processing units (TPUs). Large-scale neural network computations can be processed in parallel by these specialized hardware platforms, which also bring significant training time reductions for intricate models. More compact & effective neural network models with performance on par with larger models have also been produced by developments in model optimization techniques like knowledge distillation and model pruning. Transferring knowledge from a large teacher model to a smaller student model is known as knowledge distillation, and model pruning is the process of eliminating unnecessary parameters from a network without affecting its overall performance.

Also, developments in generative adversarial networks (GANs) have made it possible to generate synthetic data realistically for tasks like text-to-image generation, style transfer, and image synthesis. The generator and discriminator neural networks that make up a GAN collaborate to produce high-quality synthetic data that is identical to real data. Concerns about privacy and data acquisition. The requirement for a substantial quantity of labeled data to train high-performing models is one major obstacle.

Obtaining labeled data can be expensive & time-consuming, particularly in industries like finance & healthcare where sensitive data is subject to data privacy laws that limit access. Security and Interpretability Risks. The interpretability of neural network models presents another difficulty, especially in high-stakes scenarios where comprehending model predictions is essential. Neural networks’ intricate internal representations make them difficult to explain to end users or regulatory bodies how they make decisions, which is why they are frequently referred to as “black-box” models. Moreover, neural networks are vulnerable to adversarial attacks, which involve the carefully constructed perturbations of input that can lead to highly confidently generated incorrect outputs. Environmental Impact and Computational Resources.

Sophisticated computational resources like GPUs or TPUs are needed for training large-scale neural networks, which means that there will be an environmental impact & high energy consumption. For sustainable deployment in resource-constrained environments, it is imperative to address the energy efficiency of neural network training. Neural networks have a bright future in machine learning, providing opportunities to advance AI capabilities and solve current problems.

Future work should focus on creating methods for semi-supervised learning and active learning that enable effective learning from small amounts of labeled data. The dependence on massive labeled datasets can be minimized by making use of unlabeled data or by carefully choosing informative samples for labeling. Another area of interest is using explainable AI techniques to improve the transparency and interpretability of neural network models. Trust in neural network-based systems can be increased for a variety of applications by creating techniques for quantifying model uncertainty, producing human-understandable explanations for predictions, and visualizing model decisions.

Also, using adversarial training strategies and robust optimization techniques, research is focused on enhancing neural networks’ resilience against adversarial attacks. It is possible to improve the security of AI systems in safety-critical domains by creating models that are robust to tiny input perturbations and maintain high accuracy on clean data. Also, by enabling quicker training times and larger model capacities, hardware technological breakthroughs are anticipated to keep spurring innovation in neural network research.

Further democratizing access to AI technologies and facilitating their deployment in edge devices with constrained computational resources will be made possible by the development of specialized hardware accelerators designed for neural network computations. In conclusion, because neural networks can learn from data & solve complicated problems in a variety of domains, they have completely changed machine learning & artificial intelligence. To train neural networks efficiently on a variety of tasks & maximize their performance through sophisticated techniques, one must have a thorough understanding of the inner workings of these systems. Continued developments in neural network technology hold great promise for overcoming these obstacles and influencing the direction of AI-driven solutions, even in the face of obstacles like data requirements & interpretability problems.

Neural networks’ influence on society is anticipated to increase dramatically over the next several years as research into their capabilities pushes the envelope.

If you’re interested in the potential applications of neural network machine learning in virtual reality, you may want to check out this article on Exploring the Metaverse: A New Frontier in Digital Reality. The article discusses how advancements in machine learning and AI are shaping the future of virtual reality experiences, and how neural networks are being used to create more immersive and interactive virtual environments. It’s a fascinating look at the intersection of technology and digital reality.

FAQs

What is a neural network in machine learning?

A neural network is a type of machine learning algorithm that is inspired by the structure and function of the human brain. It consists of interconnected nodes, or “neurons,” that work together to process and analyze complex data.

How does a neural network learn?

A neural network learns by adjusting the strength of connections between its neurons, a process known as training. During training, the network is exposed to a large amount of labeled data, and it adjusts its internal parameters to minimize the difference between its predictions and the actual labels.

What are the different types of neural networks?

There are several types of neural networks, including feedforward neural networks, convolutional neural networks (CNNs), recurrent neural networks (RNNs), and more. Each type is designed to handle specific types of data and tasks.

What are the applications of neural networks in machine learning?

Neural networks are used in a wide range of applications, including image and speech recognition, natural language processing, financial forecasting, medical diagnosis, and more. They are particularly effective for tasks that involve complex patterns and relationships in data.

What are the advantages of using neural networks in machine learning?

Neural networks are capable of learning complex patterns and relationships in data, making them well-suited for tasks that involve non-linear relationships. They can also handle large amounts of data and are adaptable to a wide range of applications.

What are the limitations of neural networks in machine learning?

Neural networks require a large amount of labeled data for training, and they can be computationally intensive, especially for large-scale applications. They also have a tendency to overfit to the training data if not properly regularized.

Leave a Reply