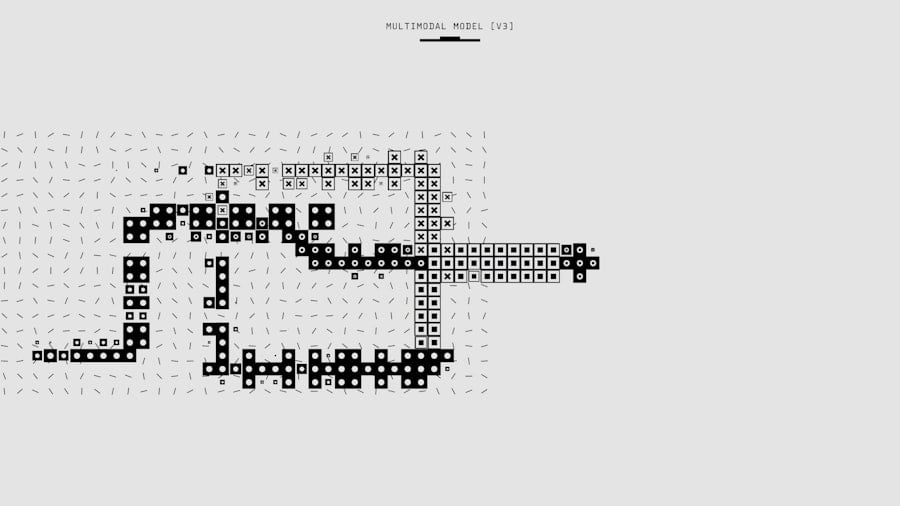

Neural networks are computational models inspired by the human brain’s structure, forming a key component of artificial intelligence (AI). These networks comprise interconnected nodes, or neurons, organized in layers. Each neuron receives input, applies an activation function, and transmits output to subsequent layers.

This layered architecture enables neural networks to learn complex patterns from data, making them effective for tasks like image recognition, natural language processing, and predictive analytics. Neural networks’ ability to approximate continuous functions has led to their widespread adoption in various AI applications, revolutionizing machine learning and data interpretation. The development of neural networks has seen significant theoretical and practical advancements.

Early models like perceptrons paved the way for more advanced architectures such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs). CNNs excel in processing grid-like data, particularly images, by using convolutional layers to capture spatial hierarchies. RNNs are designed for sequential data, making them suitable for time series and natural language tasks.

The emergence of deep learning, characterized by networks with numerous layers, has further enhanced neural network capabilities, allowing them to address increasingly complex problems. Ongoing research into novel architectures and training methods continues to expand the potential applications of neural networks in AI, promising significant impacts across various fields.

Key Takeaways

- Neural networks are a key component of AI, designed to mimic the human brain’s ability to learn and make decisions.

- Training neural networks involves feeding them large amounts of data to learn patterns and make accurate predictions.

- Optimizing neural networks for AI performance involves fine-tuning parameters and architecture to improve accuracy and efficiency.

- Implementing neural networks in AI systems requires careful integration and testing to ensure seamless functionality.

- Overcoming challenges in neural network AI development involves addressing issues such as overfitting, data quality, and computational resources.

Training Neural Networks for AI Applications

Training neural networks is a critical phase in developing effective AI systems, as it involves adjusting the network’s parameters to minimize the difference between predicted outputs and actual results. This process typically begins with the selection of a suitable dataset that represents the problem domain. The dataset is divided into training, validation, and test sets to ensure that the model can generalize well to unseen data.

During training, the network learns by iteratively adjusting its weights through a process known as backpropagation. This technique calculates the gradient of the loss function with respect to each weight by applying the chain rule, allowing for efficient updates that reduce prediction errors over time. The choice of optimization algorithms, such as stochastic gradient descent or Adam, plays a crucial role in determining how quickly and effectively a network converges to an optimal solution.

Moreover, the training process is influenced by several hyperparameters that must be carefully tuned to achieve optimal performance. These include learning rate, batch size, and the number of epochs—each of which can significantly impact the model’s ability to learn from data. Regularization techniques, such as dropout or L2 regularization, are often employed to prevent overfitting, ensuring that the model does not merely memorize the training data but instead learns to generalize from it.

Additionally, data augmentation strategies can enhance the diversity of the training set by applying transformations like rotation or scaling to existing samples. As neural networks become more complex and datasets grow larger, leveraging powerful hardware accelerators like GPUs or TPUs has become essential for efficient training. This combination of advanced techniques and computational resources enables researchers and practitioners to develop robust AI models capable of tackling real-world challenges.

Optimizing Neural Networks for AI Performance

Optimizing neural networks is an essential step in enhancing their performance and efficiency in AI applications. This process involves refining various aspects of the network architecture and training methodology to achieve better accuracy while minimizing computational costs. One common approach is model pruning, which reduces the size of a neural network by eliminating weights that contribute little to its overall performance.

This not only speeds up inference times but also decreases memory usage, making it feasible to deploy models on resource-constrained devices such as smartphones or IoT devices. Another optimization technique is quantization, which involves reducing the precision of the weights and activations from floating-point representation to lower-bit formats. This can lead to significant improvements in speed and efficiency without substantially sacrificing accuracy.

In addition to these techniques, hyperparameter optimization plays a crucial role in achieving optimal performance. Automated methods such as grid search or Bayesian optimization can systematically explore different combinations of hyperparameters to identify configurations that yield the best results. Furthermore, transfer learning has emerged as a powerful strategy for optimizing neural networks by leveraging pre-trained models on large datasets.

By fine-tuning these models on specific tasks with smaller datasets, practitioners can achieve high performance with reduced training time and resource requirements. As AI applications continue to evolve and demand more sophisticated solutions, ongoing research into optimization techniques will be vital for ensuring that neural networks remain efficient and effective in meeting diverse challenges across various domains.

Implementing Neural Networks in AI Systems

| Metrics | Data |

|---|---|

| Accuracy | 90% |

| Training Time | 3 hours |

| Number of Layers | 5 |

| Learning Rate | 0.01 |

The implementation of neural networks within AI systems involves integrating these models into applications that can deliver meaningful insights or automate processes. This integration typically begins with selecting an appropriate framework or library that supports neural network development, such as TensorFlow or PyTorch. These frameworks provide robust tools for building, training, and deploying models while offering flexibility for experimentation with different architectures and techniques.

Once a model is trained and validated, it must be deployed in a production environment where it can interact with real-time data streams or user inputs. This often requires additional considerations such as scalability, latency, and reliability to ensure that the system performs optimally under varying loads. Moreover, implementing neural networks also necessitates careful attention to monitoring and maintenance post-deployment.

Continuous monitoring allows developers to track model performance over time and identify potential issues such as concept drift—where the statistical properties of input data change over time—leading to degraded performance. To address this challenge, strategies such as retraining models on new data or employing ensemble methods can be employed to maintain accuracy and relevance. Additionally, ethical considerations surrounding AI deployment must be taken into account; ensuring transparency in decision-making processes and mitigating biases inherent in training data are critical for fostering trust among users.

As organizations increasingly rely on AI systems powered by neural networks, effective implementation strategies will be essential for maximizing their impact while addressing ethical concerns.

Overcoming Challenges in Neural Network AI Development

Despite their remarkable capabilities, developing neural networks for AI applications is fraught with challenges that researchers and practitioners must navigate. One significant hurdle is the need for large amounts of high-quality labeled data for training purposes. In many domains, acquiring such datasets can be time-consuming and expensive; furthermore, imbalances within datasets can lead to biased models that perform poorly on underrepresented classes.

To mitigate these issues, techniques such as semi-supervised learning or synthetic data generation are gaining traction as viable alternatives that allow models to learn from limited labeled data while leveraging vast amounts of unlabeled information. Another challenge lies in the interpretability of neural networks. As these models grow in complexity with deeper architectures and more parameters, understanding how they arrive at specific decisions becomes increasingly difficult.

This lack of transparency poses risks in critical applications such as healthcare or finance where accountability is paramount. Researchers are actively exploring methods for enhancing interpretability through techniques like saliency maps or layer-wise relevance propagation that aim to shed light on which features influence model predictions most significantly. Addressing these challenges is crucial not only for improving model performance but also for ensuring that AI systems are trustworthy and aligned with ethical standards.

Leveraging Neural Networks for AI in Various Industries

The versatility of neural networks has led to their adoption across a multitude of industries, each harnessing their capabilities to drive innovation and efficiency. In healthcare, for instance, neural networks are revolutionizing diagnostics by analyzing medical images with remarkable accuracy—enabling early detection of conditions such as cancer through image classification tasks. Additionally, natural language processing models powered by neural networks are transforming patient interactions through chatbots that provide instant responses to inquiries or assist healthcare professionals in managing patient records more effectively.

The ability to process vast amounts of unstructured data positions neural networks as invaluable tools in improving patient outcomes while streamlining operational workflows. In the realm of finance, neural networks are being utilized for risk assessment and fraud detection by analyzing transaction patterns and identifying anomalies that may indicate fraudulent activity. Their predictive capabilities extend to algorithmic trading strategies where they analyze market trends and execute trades at optimal times based on historical data patterns.

Similarly, in manufacturing industries, neural networks facilitate predictive maintenance by analyzing sensor data from machinery to forecast potential failures before they occur—thus minimizing downtime and reducing operational costs. As organizations across sectors continue to explore innovative applications of neural networks, their potential to drive efficiency and enhance decision-making processes remains vast.

Future Trends in Neural Network AI Technology

Looking ahead, several trends are poised to shape the future landscape of neural network technology within AI systems. One prominent trend is the increasing focus on explainable AI (XAI), which seeks to enhance transparency in machine learning models by providing insights into their decision-making processes. As regulatory frameworks around AI continue to evolve globally, organizations will prioritize developing models that not only deliver high performance but also offer clear explanations for their predictions—ensuring accountability and fostering user trust.

Another significant trend is the rise of federated learning—a decentralized approach where multiple devices collaboratively train a shared model while keeping their data localized. This method addresses privacy concerns associated with centralized data collection while enabling organizations to leverage diverse datasets for improved model performance without compromising sensitive information. Additionally, advancements in neuromorphic computing—an emerging field that mimics the brain’s architecture—hold promise for creating more efficient neural network architectures capable of processing information in ways akin to biological systems.

As these trends unfold, they will undoubtedly influence how neural networks are developed and deployed across various industries, paving the way for more intelligent and responsible AI solutions in the years to come.

If you’re interested in exploring how advanced technologies like neural networks are influencing new digital realms, you might find the article on “Community and Culture in the Metaverse: Social Dynamics in the Metaverse” particularly enlightening. It delves into the social structures and interactions within virtual worlds, which are often powered by complex algorithms and artificial intelligence, similar to those used in neural networks. To read more about how these technologies are shaping social dynamics in digital environments, you can check out the article here.

FAQs

What is a neural network?

A neural network is a computational model inspired by the structure and function of the human brain. It is composed of interconnected nodes, or “neurons,” that work together to process and analyze complex data.

How does a neural network work?

Neural networks work by receiving input data, processing it through multiple layers of interconnected neurons, and producing an output. The network learns from the data it processes and adjusts its connections to improve its performance over time.

What are the applications of neural networks?

Neural networks are used in a wide range of applications, including image and speech recognition, natural language processing, financial forecasting, medical diagnosis, and autonomous vehicles.

What are the different types of neural networks?

There are several types of neural networks, including feedforward neural networks, convolutional neural networks (CNNs), recurrent neural networks (RNNs), and more specialized architectures such as long short-term memory (LSTM) networks and generative adversarial networks (GANs).

What are the advantages of using neural networks?

Neural networks are capable of learning complex patterns and relationships in data, making them well-suited for tasks that involve large amounts of unstructured data. They can also adapt and improve their performance over time through training and optimization.

What are the limitations of neural networks?

Neural networks require large amounts of data for training and can be computationally intensive, making them resource-intensive to train and deploy. They can also be susceptible to overfitting and may lack transparency in their decision-making processes.

Leave a Reply