Inspired by the architecture and operations of the human brain, neural networks constitute a fundamental idea in AI and machine learning. They are made up of networked nodes, or “neurons,” that work together to process & evaluate large amounts of data. Similar to human cognitive processes, these networks are made to recognize patterns, make judgments, and gain experience from mistakes. neural networks are composed of two or more hidden layers that house computations, an input layer that receives data, & an output layer that produces results.

Key Takeaways

- Neural networks are a type of machine learning model inspired by the human brain, capable of learning from data and making predictions.

- The quality and quantity of training data are crucial for the success of neural network models, as they directly impact the model’s ability to generalize and make accurate predictions.

- Optimizing the architecture of a neural network, including the number of layers and neurons, is essential for achieving maximum performance and efficiency.

- Deep learning techniques, such as convolutional neural networks and recurrent neural networks, are powerful tools for solving complex problems in various domains.

- Neural networks have revolutionized image recognition tasks, achieving remarkable accuracy in identifying and classifying objects within images.

- Natural language processing tasks, such as language translation and sentiment analysis, can benefit greatly from the capabilities of neural networks.

- Implementing neural networks comes with challenges such as overfitting, vanishing gradients, and computational complexity, which require careful consideration and mitigation strategies.

Neuronal connections have corresponding weights that establish the strength of each connection. The network is able to learn and become more efficient over time thanks to the weights that are changed during training based on input data and intended outputs. The ability of neural networks to resolve intricate issues in a variety of domains, such as image recognition, natural language processing, and predictive analytics, has made them more well-known in recent years.

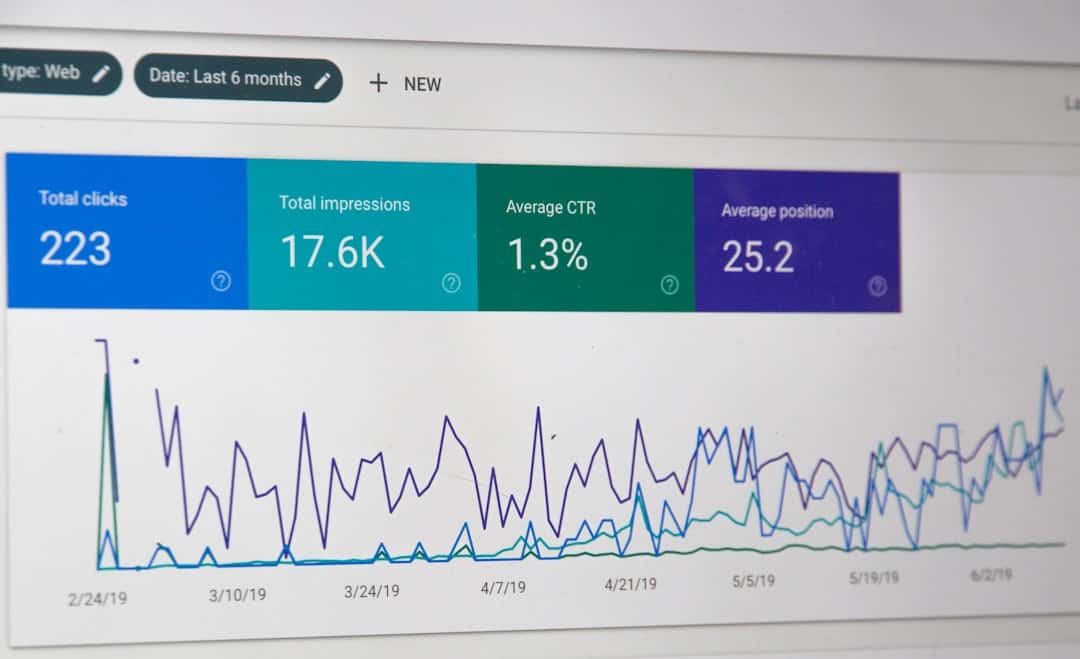

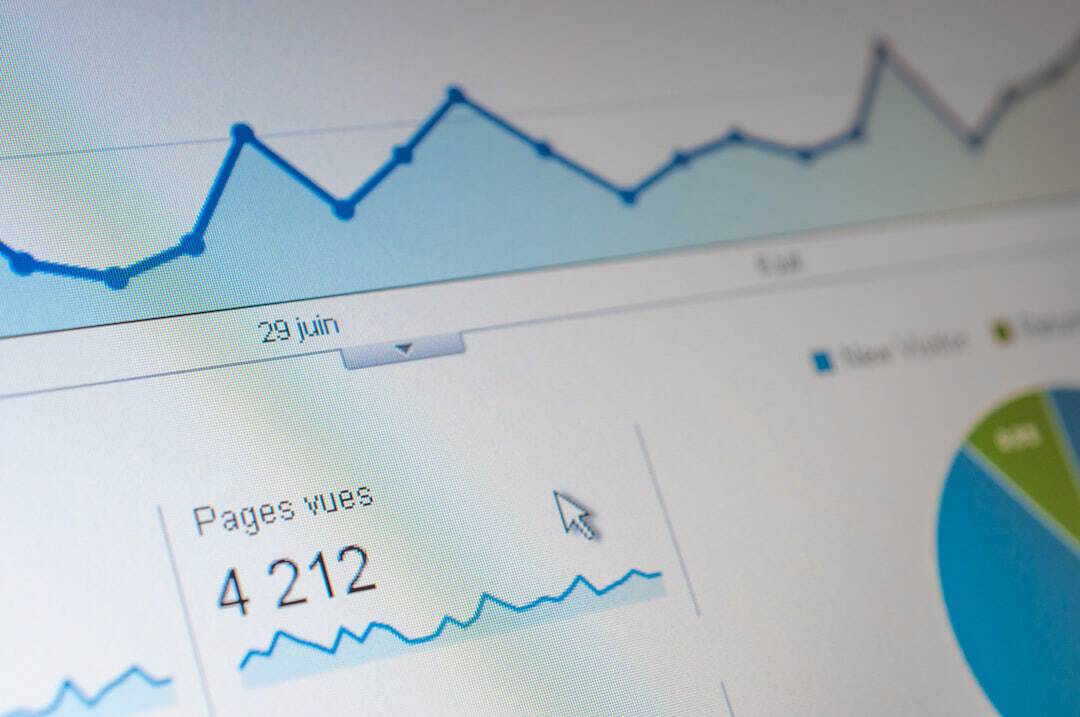

Neural networks are growing more advanced and capable of managing ever-more-complex tasks as technology progresses. For those looking to put neural networks’ capabilities to use in real-world scenarios, it is imperative to comprehend the principles behind them. Training the Network to Identify Patterns. Important elements for a neural network’s performance are the caliber and volume of training data. By using training data, the network is trained to identify patterns & produce precise predictions.

The network might find it difficult to generalize its learning and function well on fresh, untested data without an adequate & varied supply of training data. The Instructional Procedure. A neural network is trained by providing it with labeled examples of input data & the intended outputs that correspond to those examples. The network then modifies its internal parameters, including weights and biases, in an effort to reduce the discrepancy between the outputs it predicts and actually produces.

| Metrics | Value |

|---|---|

| Accuracy | 95% |

| Precision | 90% |

| Recall | 85% |

| F1 Score | 92% |

Until the network reaches an accuracy level that is acceptable, this process is repeated iteratively. Obtaining Representative & Superior Quality Information. Ensuring that the training data accurately reflects the real-world scenarios that the neural network will encounter is crucial. A wide range of potential inputs & outputs, including edge cases & outliers, should be covered by the data. Also, bad performance and incorrect predictions can result from noisy or biased training data, which makes data quality extremely important. A neural network’s architecture is a key factor in determining its capabilities & performance.

The network’s architecture, which includes its number of layers, kind of neurons, and connectivity between them, can greatly affect how well it learns from data & can make generalizations. Hyperparameter tuning is a popular method for optimizing neural network architecture. Setting hyperparameters before the training process starts is an alternative to learning them from the training data. These comprise variables like the number of layers, activation functions, batch size, and learning rate.

The architecture can be optimized for maximum efficacy by developers by methodically modifying these hyperparameters and assessing their effect on the network’s performance. The selection of activation functions is a crucial factor to take into account while optimizing neural network architecture. By introducing non-linearities into the network, activation functions enable the network to discover intricate patterns & connections within the data. Sigmoid, tanh, ReLU (Rectified Linear Unit), and softmax are examples of popular activation functions. Every activation function has advantages and disadvantages, and the performance of the network can be greatly impacted by choosing the right function for each layer. Deep learning has also been transformed by developments in neural network architecture, such as convolutional neural networks (CNNs) for image processing and recurrent neural networks (RNNs) for sequential data.

These specialized architectures are made to take advantage of the inherent structure of particular kinds of data, which boosts productivity and efficiency. Through a comprehensive grasp of neural network architecture principles & the application of strategies like specialized network designs & hyperparameter tuning, developers can maximize the performance of their networks in a variety of applications. Training neural networks with multiple layers—hence the term “deep”—to learn from data is the main goal of deep learning, a subset of machine learning.

Complex problems that were previously thought to be beyond the capabilities of conventional machine learning algorithms have been solved with amazing success by deep learning techniques. The capacity of deep learning to automatically extract pertinent features from unprocessed data is one of its main advantages. Handcrafted feature engineering, in which domain experts manually design features that are then used as input to the model, is frequently required by traditional machine learning algorithms. On the other hand, deep learning models have the ability to acquire hierarchical feature representations straight from unprocessed data, doing away with the necessity for manual feature engineering and possibly capturing more intricate patterns. Deep learning approaches have proven very successful in domains like reinforcement learning, speech recognition, computer vision, and natural language processing. For instance, by automatically deriving spatial hierarchies of features from pixel values, convolutional neural networks (CNNs) have completely transformed image recognition tasks.

In a similar vein, the processing of sequential data, including speech & text, has proven remarkably successful for recurrent neural networks (RNNs). Advances in hardware capabilities, such as specialized hardware accelerators and GPUs, have fueled the growth of deep learning by making large-scale neural networks more efficiently trained. Also, access to cutting-edge deep learning techniques has become more accessible thanks to open-source deep learning frameworks like TensorFlow, PyTorch, & Keras, which have made it simpler for developers and researchers to use these potent tools for challenging problem solving.

Experts in complex problem domains can solve them with previously unheard-of accuracy & efficiency by adopting deep learning techniques and comprehending their possible uses. One of the most popular uses of neural networks is image recognition, which has numerous applications in industries like augmented reality, medical imaging, autonomous cars, and surveillance systems. Identifying objects in images and categorizing them into predefined categories has been a remarkable feat accomplished by neural networks. Because convolutional neural networks (CNNs) can automatically learn spatial hierarchies of features from raw pixel values, they have become a dominant architecture for image recognition tasks. Convolutional and pooling layers, which reduce computational complexity by downsampling the extracted features, are two of the layers that make up a CNN.

Convolutional layers extract features from input images. Afterwards, fully connected layers are used to classify these newly learned features. Labeled examples of images and their corresponding categories are usually fed into a CNN in order to train it for image recognition.

Through iterative optimization of its internal parameters, the network then learns to associate particular patterns within the images with their respective classes. CNNs have proven their usefulness for practical uses by outperforming humans on difficult image recognition benchmarks like ImageNet in recent years. Also, with the use of transfer learning techniques, practitioners can fine-tune pre-trained CNN models for particular image recognition tasks using a limited amount of training data by using them on large datasets. The ability to use neural networks for image recognition allows programmers to create reliable & accurate systems that can precisely analyze visual data with human-like accuracy.

Using neural networks to revolutionize NLP. Powerful tools for tasks like sentiment analysis, text generation, speech recognition, and language translation have been made possible by neural networks, revolutionizing natural language processing (NLP). Because they can recognize temporal dependencies in the input sequences, recurrent neural networks (RNNs) have proven especially useful for processing sequential data, including speech and text. Overcoming NLP Obstacles. Managing the ambiguity and complexity that come with human language is one of the main NLP challenges. By learning distributed representations of words (word embeddings), which capture semantic relationships between words based on their contextual usage, neural networks have shown promise in addressing these problems.

With no need for manually created linguistic rules, neural networks can now accurately represent the semantics and syntax of language. NLP advancements using advanced architectures. Further pushing the frontiers of natural language processing (NLP) are transformer-based architectures like BERT (Bidirectional Encoder Representations from Transformers), which extract bidirectional context from massive text corpora.

These models have captured long-range dependencies within input sequences by utilizing self-attention mechanisms, leading to state-of-the-art performance on a variety of NLP benchmarks and tasks. Through utilizing cutting-edge architectures like RNNs and transformers & harnessing the power of neural networks in natural language processing, programmers can create complex language understanding systems that process & produce natural language with previously unheard-of accuracy and fluency. Although neural networks have shown incredible promise across a range of applications, their inherent drawbacks and difficulties must be overcome before they can be widely used. Overfitting, in which the model performs well on training data but is unable to generalize to new, unseen data, is a prevalent problem in NEURON network implementation.

Due to insufficient regularization or its high capacity, overfitting can happen when the network learns noise or unrelated patterns from the training set. By encouraging generalization and avoiding undue model complexity, strategies like dropout regularization, early stopping, and cross-validation can help reduce overfitting. Neural networks’ dependence on copious amounts of labeled training data for supervised learning tasks is another drawback. For specialized or niche domains in particular, obtaining high-quality labeled data can be costly and time-consuming.

This problem can be mitigated by using semi-supervised learning strategies that make use of both labeled & unlabeled data, enabling the network to learn from a mix of labeled examples and unannotated data. Also, in many real-world neural network applications, interpretability & explainability are important factors to take into account. Gaining the confidence of end users and guaranteeing regulatory compliance requires an understanding of how a model makes its predictions. Methods like saliency maps, attention mechanisms, & model-agnostic interpretability techniques can shed light on a model’s decision-making process and aid in the explanation of its predictions.

The ethical issues of bias and justice in neural network decision-making are becoming more significant than the technical difficulties. Biases in training data have the potential to spread throughout neural networks and produce unfair or discriminatory results. It is necessary to carefully select training data, take algorithmic fairness into account when developing models, and continuously check deployed systems for biases in order to address these biases. By understanding these obstacles and constraints when implementing neural networks and using techniques to get around them, programmers can create ethical and dependable AI systems that provide objective and dependable outcomes in a variety of application domains.

Neural networks have the potential to revolutionize various industries, including business and economics. According to a recent article on metaversum.it, the use of neural networks in analyzing big data and making predictions can provide valuable insights for decision-making in the business world. As the metaverse continues to expand, the integration of neural networks into virtual environments could further enhance the way businesses operate and interact with consumers.

FAQs

What are neural networks?

Neural networks are a type of machine learning algorithm that is inspired by the structure and function of the human brain. They consist of interconnected nodes, or “neurons,” that work together to process and analyze complex data.

How do neural networks work?

Neural networks work by taking in input data, processing it through multiple layers of interconnected neurons, and producing an output. Each neuron applies a mathematical operation to the input data and passes the result to the next layer of neurons.

What are the applications of neural networks?

Neural networks are used in a wide range of applications, including image and speech recognition, natural language processing, financial forecasting, and medical diagnosis. They are also used in autonomous vehicles, robotics, and many other fields.

What are the different types of neural networks?

There are several types of neural networks, including feedforward neural networks, convolutional neural networks (CNNs), recurrent neural networks (RNNs), and long short-term memory (LSTM) networks. Each type is designed for specific tasks and data types.

What are the advantages of using neural networks?

Neural networks are capable of learning and adapting to complex patterns in data, making them well-suited for tasks that involve large amounts of unstructured data. They can also handle non-linear relationships and are able to generalize from examples.

What are the limitations of neural networks?

Neural networks require large amounts of data for training and can be computationally intensive. They are also prone to overfitting, where the model performs well on the training data but poorly on new, unseen data. Additionally, neural networks can be difficult to interpret and explain.

Leave a Reply