Inspired by the information processing of the human brain, deep neural networks (DNNs) are machine learning algorithms. To analyze complicated data, they are made up of several layers of networked neurons or nodes. After processing input from the previous layer using weights and biases, each neuron sends its processed output to the following layer. Several layers of this process are repeated in order to extract progressively abstract features from the input data.

Key Takeaways

- Deep neural networks are a type of machine learning model inspired by the structure of the human brain, consisting of multiple layers of interconnected nodes.

- Training deep neural networks involves feeding them with large amounts of data to adjust the weights and biases of the connections between nodes, typically using algorithms like backpropagation.

- Optimizing deep neural networks involves techniques such as regularization, dropout, and batch normalization to prevent overfitting and improve generalization to new data.

- Deep neural networks can be applied to real-world problems such as image and speech recognition, natural language processing, and autonomous driving, among others.

- Ethical considerations in deep neural networks include issues related to privacy, bias, transparency, and accountability in the use of these technologies.

- Future developments in deep neural networks may involve advancements in areas such as unsupervised learning, reinforcement learning, and the integration of neuroscience principles.

- In conclusion, harnessing the potential of deep neural networks requires a balance between technological advancement and ethical responsibility to ensure their beneficial impact on society.

During training, DNNs modify the weights and biases of their neurons to gain knowledge from the data. Backpropagation is a common technique used in this process, which iteratively adjusts network parameters to reduce the discrepancy between expected and actual output. DNNs are useful for tasks like image and speech recognition, natural language processing, and predictive modeling because of their learning capacity, which allows them to recognize intricate patterns & relationships in data. Deep neural networks (DNNs) have shown remarkable success in a range of applications, such as financial forecasting, medical diagnosis, and autonomous vehicles.

They are helpful for resolving challenging issues in a variety of domains due to their capacity to automatically learn from data and derive insightful conclusions. But training and fine-tuning DNNs can be difficult, involving regularization strategies, optimization algorithms, and meticulous adjustment of hyperparameters. Fairness, transparency, and accountability in the use of DNNs have become topics of discussion as a result of growing concerns about the ethical implications & societal impact of these increasingly complex & large DNNs. One of the Main Challenges with Overfitting.

Overfitting, which happens when the network learns to memorize the training data instead of generalizing to new, unseen data, is one of the main training challenges for DNNs. Optimization Algorithms & Regularization Techniques. Many regularization methods, including batch normalization, weight decay, and dropout, are used to address overfitting in order to enhance the network’s generalization performance and avoid overfitting.

| Metrics | Value |

|---|---|

| Accuracy | 95% |

| Precision | 90% |

| Recall | 85% |

| F1 Score | 92% |

Selecting an optimization algorithm is a crucial step in the training of deep neural networks. Utilizing a direction that minimizes a loss function, gradient descent is a popular optimization algorithm that modifies the network’s parameters. To increase convergence speed and performance, gradient descent variations including mini-batch gradient descent, Adam and RMSprop, and stochastic gradient descent (SGD) have been developed.

New developments in hardware and transfer learning. Since they enable parallel processing and efficient computation of large-scale neural networks, recent hardware advancements like graphics processing units (GPUs) & tensor processing units (TPUs) have greatly accelerated the training of DNNs. To further leverage pretrained models and transfer knowledge from one task to another, methods like transfer learning and pretraining have been developed. This reduces the need for a lot of labeled data and expedites the training process.

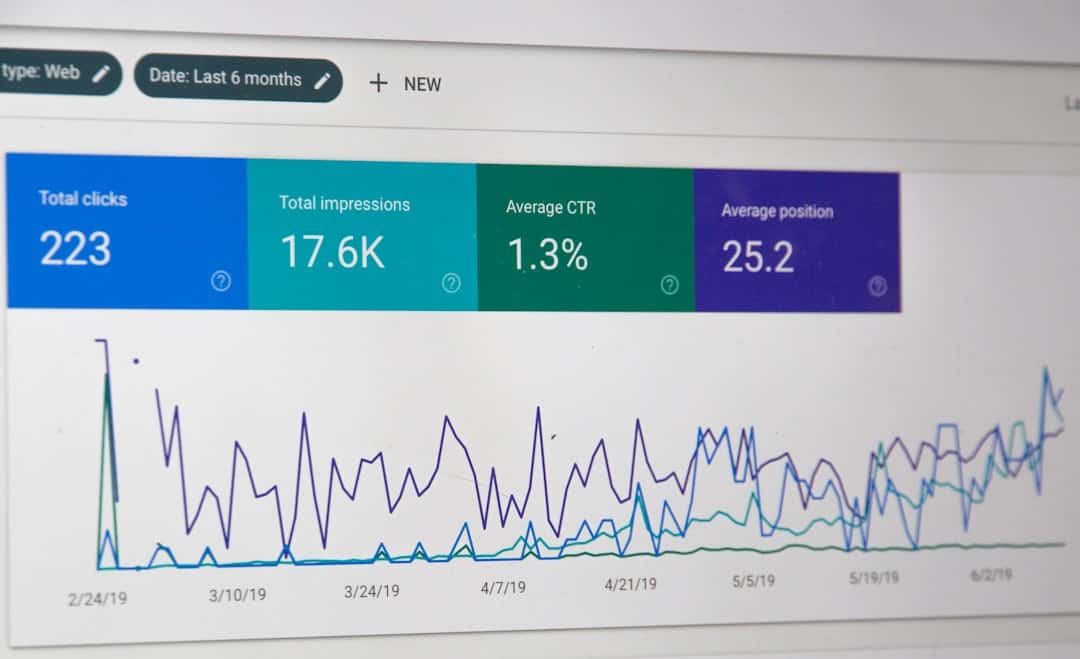

In general, to achieve good performance when training deep neural networks, a number of factors including data quality, model architecture, optimization algorithms, and computational resources must be carefully considered. Enhancing the accuracy, speed, memory utilization, and energy efficiency of deep neural networks is the goal of optimization. Hyperparameter tuning is a popular optimization technique wherein parameters like learning rate, batch size, number of layers, and network architecture are adjusted to determine the best configuration for a given task.

This procedure frequently entails carrying out in-depth tests to investigate various hyperparameter configurations & assess their effect on the network’s performance. Model compression is a crucial component of DNN optimization that tries to shrink the network’s size and boost its performance without compromising accuracy. A network’s predictive power can be maintained by reducing the number of parameters and operations through the use of techniques like quantization, pruning, and knowledge distillation. For instance, during model quantization, weights and activations are represented with less accuracy (e.g.

G. eight-bit integers) to speed up inference and use less memory on hardware with constrained processing power. Also, strengthening a DNN’s resistance to adversarial attacks—which are deliberate modifications to input data that may lead the network to produce inaccurate predictions—is another common optimization task for DNNs. The development of adversarial defense mechanisms, input preprocessing methods, and adversarial training has increased the network’s resistance to these types of attacks and increased its dependability in practical applications. To further optimize DNNs for deployment in resource-constrained environments, effective software implementations using frameworks like TensorFlow and PyTorch, as well as hardware acceleration using specialized processors like TPUs, have been used. In order to attain high performance within realistic constraints, deep neural network optimization generally necessitates a blend of algorithmic advances, hardware innovations, and software optimizations.

The efficacy of deep neural networks in resolving intricate tasks that were previously thought to be beyond the capabilities of conventional machine learning algorithms has been demonstrated by their application to a broad range of real-world issues. Distinctive neural networks (DNNs) have shown impressive results in computer vision applications like medical image analysis, object detection, image classification, and facial recognition. Convolutional neural networks, a subset of DNNs specifically engineered for processing visual data, have demonstrated exceptional efficacy in feature extraction from images and precise prediction-making in applications ranging from medical diagnosis to autonomous driving. DNNs have transformed a number of natural language processing (NLP) tasks, including speech recognition, machine translation, sentiment analysis, and text generation. Language translation services, chatbots, virtual assistants, and other applications have made it possible for machines to comprehend and produce language similar to that of humans by using transformer models and recurrent neural networks (RNNs) to process sequential data, including text and speech.

Moreover, DNNs have been used to solve healthcare issues including drug discovery, medical imaging analysis, personalized medicine, and illness diagnosis. They have demonstrated promise in predicting patient outcomes, enhancing diagnostic accuracy, and developing novel treatments for a range of illnesses thanks to their capacity to recognize intricate patterns in massive amounts of medical data. DNNs have been applied in finance and business to tasks like stock price prediction, fraud detection, risk assessment, customer segmentation, and recommendation systems. Making educated decisions in industries like investment management, banking, insurance, and e-commerce has been made easier by their capacity to evaluate vast amounts of financial data and derive insightful conclusions. Overall, it has been shown that deep neural networks are adaptable and powerful in solving real-world issues in a variety of fields.

Their capacity to draw conclusions from data & generate precise forecasts has created new avenues for tackling difficult problems and developing ground-breaking ideas that will have a big impact on society. Deep neural network usage has become increasingly common, which has brought up significant ethical questions about bias, accountability, fairness, transparency, and privacy. An important issue is algorithmic bias, which is the possibility that biased training data or faulty decision-making procedures will lead to unfair or discriminatory results for DNNs. Biased training data in facial recognition systems, for instance, can result in unfair or erroneous predictions for particular demographic groups, raising concerns about social injustice and privacy violations. Given that DNNs’ intricate decision-making processes can make it challenging to comprehend how they arrive at their predictions or classifications, transparency is another crucial ethical factor to take into account.

Particularly in crucial applications like criminal justice or healthcare diagnosis, this lack of transparency can impede accountability and trust in their use. Also, using DNNs for data mining, personalized advertising, & surveillance raises privacy issues. The gathering and examination of private information by DNNs prompts concerns about permission, data ownership, and safeguarding private information against abuse or illegal access.

To address these moral dilemmas, efforts have been directed toward creating fairness-aware learning algorithms that reduce bias in training data and guarantee equal results for all groups. Also, methods like explainable AI (XAI) try to shed light on DNN decision-making processes by generating explanations for their predictions that are understandable to humans or by visualizing the internal workings of the network. In addition, legal frameworks like the California Consumer Privacy Act (CCPA) in the US and the General Data Protection Regulation (GDPR) in Europe have been put in place to safeguard people’s right to privacy and control how AI systems use personal data.

All things considered, tackling ethical issues with deep neural networks necessitates a multidisciplinary strategy that integrates technological developments with ethical standards and legal laws to guarantee the responsible and moral application of AI technologies. Future advancements in deep neural networks are anticipated to concentrate on a number of important areas, including effective training methods, lifelong learning, adversarial attack resistance, model interpretability, and moral AI design principles. Future DNN research must focus on model interpretability in order to gain understanding of how these intricate models make decisions. Methods like saliency maps, attention mechanisms, and feature visualization are being developed to help users better comprehend and comprehend the inner workings of DNNs and their predictions. In order to allow networks to continuously learn from new data over time without forgetting previously acquired knowledge, lifetime learning is another crucial direction for future developments in DNNs.

Applications that need to adapt to changing environments or changing tasks—like autonomous systems or personalized services—need this capability. In order to make DNNs more resilient to deliberately constructed perturbations that may mislead their predictions, robustness to adversarial attacks is a difficult problem that needs more research. To increase the dependability of DNNs in practical settings, adversarial defense mechanisms, input preprocessing strategies, and adversarial training methodologies are being developed. Future advancements in DNNs will also concentrate on efficient training methods, which seek to lower the energy and computational costs related to training massive models.

Suggested strategies to enhance DNN training efficiency on resource-constrained devices include sparse training methods, low-precision arithmetic operations, and hardware-aware optimization algorithms. Since they encourage the responsible and moral application of AI technologies, ethical AI design principles are anticipated to have a significant influence on how DNNs develop in the future. To guarantee equity, openness, privacy protection, and accountability in their application, efforts must be made to incorporate ethical issues into the AI system design process. All things considered, deep neural network advancements in the future will carry on expanding their capabilities while tackling significant issues with robustness, interpretability, lifelong learning, efficiency, and ethics. In a wide range of industries, including computer vision, natural language processing, healthcare, finance, and many more, deep neural networks have shown to be extremely effective tools for handling challenging problems.

Their capacity to generate precise forecasts & automatically learn from data has created new avenues for tackling pressing issues that have a big impact on society. To fully utilize deep neural networks, however, a number of important considerations pertaining to training optimization techniques must be addressed. These include hyperparameter tuning, regularization methods, optimization algorithms, model compression, adversarial robustness, hardware acceleration, software implementations, ethical considerations, fairness, transparency, accountability, bias, regulatory frameworks, model interpretability, robustness, efficient training techniques, and ethical AI design principles.

By advancing research in these areas & encouraging the responsible and ethical use of AI technologies, we can ensure that deep neural networks continue to drive innovation and create positive societal impact while upholding ethical principles, fairness, privacy protection, and regulatory compliance. In conclusion, deep neural networks hold great promise for influencing our future by allowing us to solve complex problems & come up with creative solutions that have a significant positive societal impact while adhering to ethical principles, fairness, privacy protection, & accountability.

If you’re interested in learning more about deep neural networks, you may want to check out this article on the challenges of the hybrid reality in the metaverse. The article discusses how virtual economies and digital assets are impacting the real world, and how deep neural networks could play a role in shaping this hybrid reality. You can read the full article here.

FAQs

What is a deep neural network?

A deep neural network is a type of artificial neural network with multiple layers between the input and output layers. It is capable of learning complex patterns and representations from data.

How does a deep neural network work?

A deep neural network works by passing input data through multiple layers of interconnected nodes, called neurons. Each layer processes the input data and passes it to the next layer, with the final layer producing the output.

What are the applications of deep neural networks?

Deep neural networks are used in various applications such as image and speech recognition, natural language processing, autonomous vehicles, medical diagnosis, and financial forecasting.

What are the advantages of deep neural networks?

Some advantages of deep neural networks include their ability to learn complex patterns, handle large amounts of data, and make accurate predictions in various domains.

What are the challenges of deep neural networks?

Challenges of deep neural networks include the need for large amounts of labeled data, computational resources for training, and the potential for overfitting or underfitting the data.

Leave a Reply