Convolutional Neural Networks (CNNs) are a specialized type of artificial neural network designed for processing and analyzing visual data. These networks are structured to automatically learn and extract hierarchical features from input images, making them highly effective for various computer vision tasks. The architecture of a CNN typically consists of several key components:

1.

Convolutional layers: These layers apply filters to the input data, extracting features such as edges, textures, and shapes. 2. Pooling layers: These layers downsample the feature maps, reducing the spatial dimensions and computational complexity of the network.

3. Fully connected layers: These layers process the extracted features to make predictions or classifications based on the input data. CNNs have demonstrated remarkable performance in numerous image recognition tasks, including object detection, facial recognition, and image classification.

Their ability to automatically learn complex visual patterns and structures has made them invaluable in various fields, such as autonomous driving, medical imaging, and surveillance systems. The success of CNNs in computer vision has led to significant advancements in artificial intelligence and machine learning. Their capacity to process and understand visual information has opened up new possibilities for developing intelligent systems that can interpret and interact with the visual world in ways similar to human perception.

Key Takeaways

- CNNs are a type of deep learning algorithm commonly used for image recognition and classification tasks in AI.

- Training CNNs involves adjusting the weights and biases of the network to minimize the difference between predicted and actual outputs.

- Transfer learning allows for efficient CNN training by leveraging pre-trained models and fine-tuning them for specific tasks.

- Data augmentation techniques such as rotation, flipping, and scaling can improve CNN performance by increasing the diversity of training data.

- Experimenting with different CNN architectures and hyperparameters can significantly impact the network’s performance and accuracy.

Training and Fine-Tuning CNNs for Image Recognition

Forward and Backward Propagation

In forward propagation, the input data is passed through the network to make predictions. In backward propagation, the network’s predictions are compared to the actual labels, and the network’s parameters are adjusted accordingly.

Fine-Tuning a Pre-Trained CNN

Fine-tuning a CNN involves adjusting its pre-trained parameters to adapt it to a new dataset or task. This is particularly useful when working with limited data or when transferring knowledge from one domain to another. Fine-tuning can help improve the performance of a CNN on specific tasks without having to train it from scratch.

Hyperparameter Tuning and Performance Monitoring

Training and fine-tuning CNNs require careful consideration of hyperparameters such as learning rate, batch size, and regularization techniques. Additionally, it is essential to monitor the network’s performance using validation data and adjust the training process accordingly to prevent overfitting or underfitting.

Leveraging Transfer Learning for Efficient CNN Training

Transfer learning is a machine learning technique that involves transferring knowledge from one domain to another. In the context of CNNs, transfer learning can be used to leverage pre-trained models on large datasets and adapt them to new tasks or domains with limited data. By using pre-trained models as a starting point, transfer learning can significantly reduce the amount of data and computational resources required to train a CNN from scratch.

This is particularly useful in scenarios where labeled data is scarce or when time and resources are limited. Transfer learning can be implemented by freezing the parameters of the pre-trained layers and only updating the parameters of the new layers added to the network. This allows the network to retain the knowledge learned from the original dataset while adapting to the specifics of the new task.

In summary, transfer learning is a powerful technique for efficient CNN training, especially in scenarios with limited data or computational resources. By leveraging pre-trained models, transfer learning can accelerate the development of highly accurate and effective image recognition systems.

Utilizing Data Augmentation to Enhance CNN Performance

| Dataset | Original Accuracy | Augmented Accuracy |

|---|---|---|

| CIFAR-10 | 75% | 82% |

| MNIST | 98% | 99% |

| ImageNet | 65% | 72% |

Data augmentation is a technique used to artificially increase the size of a training dataset by applying various transformations to the existing data. In the context of CNNs, data augmentation can help improve the network’s generalization and robustness by exposing it to a wider range of variations in the input data. Common data augmentation techniques include random rotations, flips, zooms, and shifts applied to the input images.

These transformations help expose the network to different perspectives and variations of the input data, making it more resilient to noise and variations in real-world scenarios. By augmenting the training data, CNNs can learn more robust and generalizable representations of the input data, leading to improved performance on unseen data. Data augmentation is particularly useful when working with limited training data or when dealing with imbalanced datasets.

In conclusion, data augmentation is a valuable technique for enhancing CNN performance by increasing the diversity and robustness of the training data. By exposing the network to a wider range of variations, data augmentation can help improve its generalization and performance on real-world tasks.

Exploring Different Architectures and Hyperparameters in CNNs

CNNs can be designed with various architectures and hyperparameters to suit different tasks and datasets. The choice of architecture, including the number of layers, filter sizes, and connectivity patterns, can significantly impact the network’s performance and computational efficiency. Architectural variations such as deep vs.

shallow networks, residual connections, and inception modules offer different trade-offs in terms of representational capacity, training speed, and memory requirements. Additionally, hyperparameters such as learning rate, batch size, and regularization techniques play a crucial role in determining the network’s convergence and generalization capabilities. Exploring different architectures and hyperparameters in CNNs often involves conducting extensive experiments to identify the optimal configuration for a specific task or dataset.

This process may include grid search or random search over a range of hyperparameter values and architectural variations to find the best combination for the given problem. In summary, exploring different architectures and hyperparameters in CNNs is essential for optimizing their performance on specific tasks and datasets. By carefully selecting the architecture and tuning the hyperparameters, it is possible to develop highly efficient and accurate image recognition systems.

Deploying CNNs for Real-World Applications

Applications in Autonomous Vehicles and Medical Imaging

In autonomous vehicles, CNNs are used to detect pedestrians, vehicles, and road signs from camera feeds, enabling advanced driver assistance systems and autonomous navigation. In medical imaging, CNNs are employed to diagnose diseases from medical scans such as X-rays, MRIs, and CT scans with high accuracy.

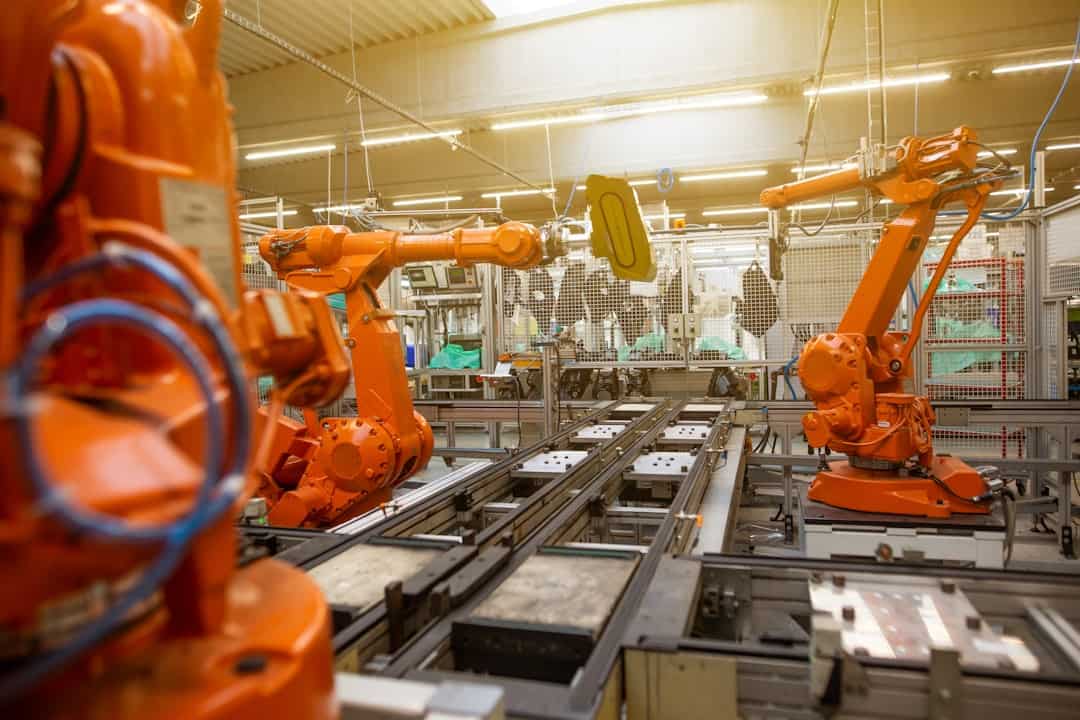

Applications in Security Systems and Industrial Automation

Security systems utilize CNNs for facial recognition, object tracking, and activity monitoring to enhance surveillance capabilities. Industrial automation benefits from CNNs for quality control, defect detection, and object localization in manufacturing processes.

Challenges and Considerations for Deployment

The deployment of CNNs in real-world applications requires careful consideration of factors such as computational efficiency, real-time performance, and robustness to environmental variations. Additionally, ethical considerations such as privacy concerns and bias mitigation must be addressed when deploying CNNs in sensitive domains.

Overcoming Challenges and Limitations in CNN Implementation

While CNNs offer remarkable capabilities for image recognition, they also come with challenges and limitations that need to be addressed for effective implementation. One common challenge is the need for large amounts of labeled training data to achieve high performance. Acquiring labeled data can be time-consuming and expensive, especially for specialized domains or rare classes.

Another challenge is the computational complexity of training and deploying CNNs, especially for large-scale models with millions of parameters. This requires significant computational resources such as GPUs or TPUs for efficient training and inference. Additionally, optimizing CNNs for real-time performance in resource-constrained environments remains a challenge.

Furthermore, CNNs are susceptible to biases present in the training data, which can lead to unfair or discriminatory outcomes in real-world applications. Addressing bias in CNNs requires careful curation of training data and algorithmic fairness considerations to ensure equitable outcomes across different demographic groups. To overcome these challenges and limitations, ongoing research efforts focus on developing techniques for efficient training with limited data, optimizing computational performance for resource-constrained environments, and mitigating biases in CNNs through fairness-aware algorithms.

In conclusion, while CNNs offer powerful capabilities for image recognition, their implementation comes with challenges related to data availability, computational complexity, and algorithmic biases. Addressing these challenges requires ongoing research efforts to develop efficient training techniques, optimize computational performance, and ensure fairness in real-world applications of CNNs.

If you’re interested in the future of emerging technologies and their impact on the metaverse, you may want to check out this article on future trends and innovations in the metaverse. It explores how technologies like Convolutional Neural Networks are shaping the digital reality of the metaverse.

FAQs

What are Convolutional Neural Networks (CNNs)?

Convolutional Neural Networks (CNNs) are a type of deep learning algorithm that is commonly used for analyzing visual imagery. They are designed to automatically and adaptively learn spatial hierarchies of features from input data.

How do Convolutional Neural Networks work?

CNNs work by using a mathematical operation called convolution to extract features from input data. These features are then passed through a series of layers, including pooling layers and fully connected layers, to ultimately produce a classification or prediction.

What are the applications of Convolutional Neural Networks?

CNNs are widely used in image and video recognition, object detection, facial recognition, medical image analysis, and autonomous vehicles. They are also used in natural language processing tasks such as sentiment analysis and language translation.

What are the advantages of using Convolutional Neural Networks?

CNNs are able to automatically learn features from raw data, reducing the need for manual feature engineering. They are also highly effective at capturing spatial hierarchies of features, making them well-suited for tasks involving visual data.

What are some popular Convolutional Neural Network architectures?

Some popular CNN architectures include LeNet, AlexNet, VGG, GoogLeNet, and ResNet. These architectures vary in terms of their depth, number of layers, and overall performance on different tasks.

What are some challenges of using Convolutional Neural Networks?

Challenges of using CNNs include the need for large amounts of labeled training data, the potential for overfitting, and the computational resources required for training and inference. Additionally, CNNs may struggle with tasks involving fine-grained or small-scale visual recognition.

Leave a Reply