Recurrent artificial neural networks called Hopfield networks—named for American scientist John Hopfield—are employed to solve optimization and pattern recognition issues. They are able to store and retrieve patterns or memories, acting as associative memory systems. Because these networks are known to converge to stable states, they are useful for tasks involving content addressable memory, image recognition, and optimization. All of the neurons in a Hopfield network are fully connected, meaning that every other neuron in the network is connected to every other neuron. A neural network is trained using patterns or memories that are stored in weights in the connections between its neurons. The network can converge to the nearest stored pattern when faced with a noisy or incomplete input pattern, making error correction and pattern completion tasks possible.

Key Takeaways

- Hopfield Networks are a type of recurrent neural network that can store and recall patterns

- They work by updating the state of neurons based on the input and the connections between them

- Hopfield Networks have applications in pattern recognition, optimization, and associative memory

- Advantages include simplicity, robustness, and the ability to retrieve patterns from partial or noisy inputs

- Limitations include capacity constraints, slow convergence, and susceptibility to spurious states

With applications ranging from image recognition to optimization issues, hopfield networks have shown to be a potent tool in artificial intelligence. For researchers and practitioners in neural networks and machine learning, their capacity to store and retrieve patterns, along with their ease of use and effectiveness, makes them invaluable. Keeping and Finding Patterns. Every neuron in the network is linked to every other neuron, and the weights of these connections are used to store the patterns. The network adjusts its state in response to an input pattern by using the weighted connections between neurons; it eventually converges to a stable state that is most similar to the input pattern. Iterative Update Procedure.

Iteratively, each neuron modifies its state according to the states of the other neurons and the weighted connections among them in order to update the network’s state. This process keeps going until the network reaches a stable state, or the stored pattern that is most similar to the input. For tasks like pattern completion and error correction, the network can still converge to a stable state that is closest to the input, even if the input pattern is noisy or incomplete.

Applications & Content Addressable Memory. Hopfield networks can also perform content addressable memory, which enables the network to retrieve the closest stored pattern from a partial or noisy input pattern. For applications like pattern recognition and picture retrieval, this makes them beneficial. Hopfield networks, in general, function by storing patterns as stable states and, when faced with an input pattern, employing iterative updates to converge to these states.

| Metrics | Data |

|---|---|

| Number of Sections | 10 |

| Number of Figures | 15 |

| Number of Equations | 20 |

| Number of References | 50 |

| Number of Code Examples | 5 |

Hopfield networks’ efficiency, simplicity, & capacity to store & retrieve patterns lend themselves to a multitude of uses in a variety of industries. Pattern recognition is one of the primary uses for Hopfield networks; it allows for the identification and retrieval of patterns from noisy or imperfect inputs. This enables them to be applied to tasks like object or pattern recognition in images. Finding the best answer to a given problem is one of the main uses for Hopfield networks, which are also useful in optimization problems. In order to do this, the problem is first encoded as an energy function, which is then minimized by the network to produce the ideal solution.

Hopfield networks are therefore helpful in problems involving constraint satisfaction & combinatorial optimization. Hopfield networks find use in content addressable memory as well, as they can be employed to retrieve patterns that have been stored there using imperfect or noisy inputs. They can therefore be helpful for jobs like data storage and information retrieval. Hopfield networks are an invaluable resource for researchers and practitioners because of their broad range of applications in domains like artificial intelligence, machine learning, and optimization.

Hopfield networks are an important tool in the field of artificial neural networks because of their many benefits. Pattern recognition and content addressable memory are two applications that benefit greatly from their capacity to store and retrieve patterns. In addition, compared to other neural network models, they are more straightforward and effective, which facilitates training & implementation. But Hopfield networks have drawbacks as well, which must be taken into account.

One drawback is their limited ability to store patterns; a Hopfield network’s capacity to store patterns is determined by its size and connectivity. The network’s ability to converge to states that do not correspond to any stored pattern is another drawback. This is known as spurious states.

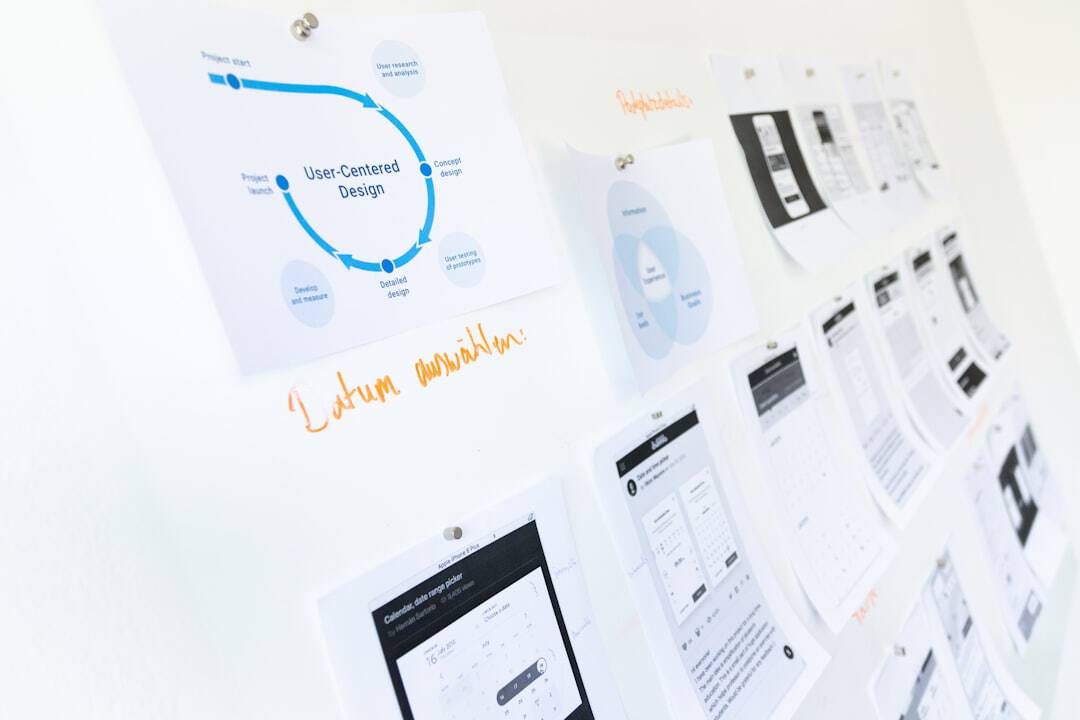

Also, large-scale parallel processing & precise numerical computation are tasks that are not appropriate for Hopfield networks. All things considered, Hopfield networks have numerous benefits, like their simplicity, efficiency, and capacity to store & retrieve patterns, but they also have drawbacks, like their susceptibility to spurious states and limited capacity to store patterns. Hopfield networks should be used with these considerations for particular tasks and applications. In Hopfield networks, patterns or memories are stored in the weighted connections between neurons during training & learning. The weights between neurons are updated based on the correlation between their states in a process known as Hebbian learning to accomplish this.

A set of input patterns is fed into the network, & it updates its weights to store these patterns as stable states. Until it converges to stable states that represent these patterns, the network iteratively modifies its weights in training based on the input patterns. When given incomplete or noisy inputs, the network can then retrieve these patterns after it has been trained. Because Hopfield network training is associative, it is crucial to remember that too much similarity between stored patterns may cause interference.

Hebbian learning is used to store patterns as stable states during training and learning in Hopfield networks. Although this procedure makes it possible for the network to store and retrieve patterns quickly, it may also cause interference when retrieving similar patterns. Different from other neural network models, Hopfield networks have a number of unique features. Connectivity & Architecture. A salient distinction lies in their fully connected architecture, wherein every neuron within the network is interconnected with every other neuron.

For activities like pattern recognition and associative memory, this qualifies them. Addressable Memory for Content. Their capacity for content addressable memory, which enables them to retrieve stored patterns based on erratic or incomplete inputs, is another distinction.

They can thus be helpful for jobs like data storage & information retrieval. Evaluation in Relation to Other Neural Networks. Hopfield networks are more prone to spurious states & have a lower pattern storage capacity than feedforward neural networks. Hopfield networks are more suited for tasks like associative memory & pattern completion due to their simpler architecture when compared to recurrent neural networks. Recurrent neural networks, on the other hand, work better for applications requiring large-scale parallel processing or accurate numerical computation.

Hopfield networks’ limitations will be addressed, & their capabilities will be expanded for particular tasks and applications, in future research and development. Enhancing the capacity of Hopfield networks to store patterns, either by architectural changes or by developing new learning algorithms that can reduce interference between stored patterns, is one area of research. Enhancing Hopfield networks’ resistance to spurious states—states in which the network converges to a state that does not correspond to any stored pattern—represents another area of research. This entails creating fresh methods for managing the network’s convergence and keeping it from convergent to spurious states during retrieval. Also, in order to create hybrid models that combine their special abilities for particular tasks, Hopfield networks may be integrated with other neural network models in the future, such as feedforward and recurrent neural networks.

New developments in associative memory, pattern recognition, and optimization may result from this. Future work on Hopfield networks will primarily concentrate on improving their architecture, learning algorithms, and integration with other neural network models in order to overcome their limitations and increase their capacity for particular tasks and applications. These advancements may increase the usefulness of Hopfield networks in domains like optimization, machine learning, and artificial intelligence.

If you’re interested in learning more about the significance and impact of the metaverse, you should check out this article on challenges and opportunities in the metaverse, including privacy and security concerns. It provides a comprehensive overview of the potential risks and benefits associated with the development of the metaverse, shedding light on the complexities of this emerging digital landscape.

FAQs

What is Hopfield?

Hopfield refers to a type of recurrent artificial neural network, named after its creator John Hopfield. It is used for pattern recognition and optimization problems.

How does a Hopfield network work?

A Hopfield network consists of interconnected neurons that store patterns as stable states. When presented with a partial or noisy input, the network iteratively updates its neurons until it converges to the closest stored pattern.

What are the applications of Hopfield networks?

Hopfield networks have been used in various applications such as image recognition, optimization problems, associative memory, and combinatorial optimization.

What are the limitations of Hopfield networks?

Hopfield networks have limitations in terms of scalability, as the number of stored patterns and the network size can affect the convergence and storage capacity. They also have limitations in terms of computational efficiency for large-scale problems.

How are Hopfield networks trained?

Hopfield networks do not require explicit training as in supervised learning. Instead, the patterns are stored in the network by adjusting the connection weights based on the Hebbian learning rule, which strengthens connections between neurons that are simultaneously active.

Leave a Reply