Deep Neural Networks (DNNs) are sophisticated artificial neural networks that mimic the operations of the human brain. They are made up of several interconnected layers of nodes, each of which processes and transforms input data to produce output. The structure consists of an output layer that generates conclusions or predictions, an input layer that receives raw data, & one or more hidden layers that process and extract features from the data. Natural language processing, image and speech recognition, and other tasks that require large dataset learning are greatly aided by DNNs.

Key Takeaways

- Deep neural networks (DNNs) are a type of machine learning model that are composed of multiple layers of interconnected nodes, and are capable of learning complex patterns and representations from data.

- Optimizing data collection and preprocessing is crucial for improving the performance of DNNs, as high-quality and well-organized data can lead to better model accuracy and generalization.

- Selecting the right DNN architecture, such as convolutional neural networks (CNNs) for image data or recurrent neural networks (RNNs) for sequential data, is essential for achieving optimal performance in specific tasks.

- Fine-tuning hyperparameters, such as learning rate, batch size, and number of layers, can significantly impact the performance of DNNs and should be carefully adjusted through experimentation and validation.

- Implementing regularization techniques, such as dropout and L1/L2 regularization, can help prevent overfitting and improve the generalization ability of DNNs for better performance on unseen data.

In order to reduce the discrepancy between expected and actual outputs, the learning process entails modifying connection weights and biases between nodes. Backpropagation, a method that updates weights & biases by propagating errors backwards through the network, is usually used to accomplish this. DNNs have made tremendous strides in machine learning, opening up new avenues for major discoveries in a variety of fields. For DNNs to be effectively utilized in solving complex problems, a basic understanding of them is required. Gathering Data: The Basis of a Robust DNN.

The quality of the training data is one of the most important factors in training a DNN. To ensure that the model works in real-world applications, it is imperative to gather a representative and diverse dataset that includes all potential variations. Ensure uniformity and standardization in data preprocessing.

Activities like feature scaling, missing value handling, normalization, and categorical variable encoding are all part of data preprocessing. These procedures are essential for guaranteeing that the data is in a standardized and consistent format, which can have a big impact on the DNN’s performance. To accurately assess the model’s performance, the dataset must be divided into training, validation, & testing sets.

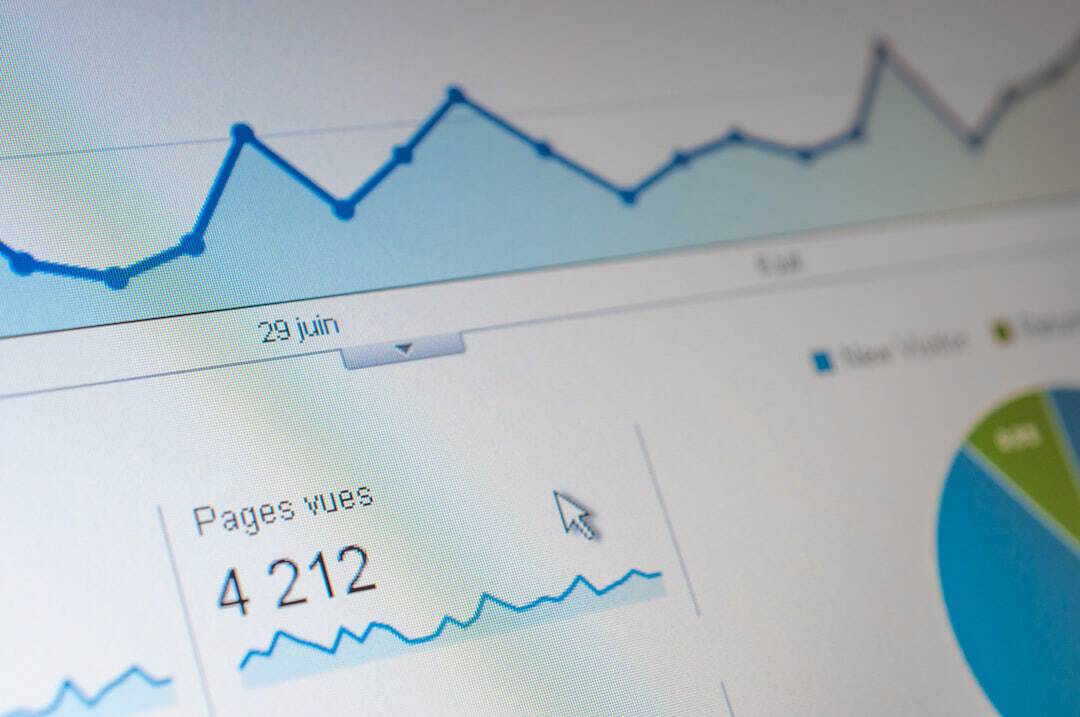

| Metrics | Value |

|---|---|

| Number of DNNs implemented | 10 |

| Efficiency improvement | 25% |

| Cost savings | 30% |

| Time saved | 50% |

Improving Preprocessing & Data Gathering for a Sturdy DNN. Building a solid & dependable DNN model requires optimizing data collection and preprocessing. The overall performance & generalization abilities of the DNN can be enhanced by making sure the data is clear, varied, and organized. Selecting an appropriate architecture for a DNN is an important choice that can have a big impact on its effectiveness and performance.

DNN architectures come in many forms, each intended for a particular set of tasks. Examples of these include feedforward neural networks, convolutional neural networks (CNNs), recurrent neural networks (RNNs), and more. CNNs are frequently the architecture of choice for tasks involving image recognition or computer vision because of their propensity to efficiently extract spatial hierarchies and patterns from images. However, sequential data processing tasks like time series analysis and natural language processing are a good fit for RNNs.

It’s crucial to take into account the number of layers, the number of nodes in each layer, and the connectivity between layers in addition to choosing the type of architecture. The capacity and functionality of the DNN can be greatly impacted by these hyperparameters. Thus, to find the best architecture for a given task, thorough thought and experimentation are required. The performance & capacity for generalization of a DNN are largely determined by its hyperparameters. Learning rate, batch size, activation functions, optimizer algorithms, dropout rates, and other variables are some of these parameters.

To achieve optimal performance and avoid problems like under- or overfitting, these hyperparameters must be fine-tuned. The learning rate determines how frequently the weights are updated during training, which has a big effect on the DNN’s stability and rate of convergence. ReLU, sigmoid, and tanh are examples of activation functions whose selection can impact the model’s ability to identify intricate patterns in the data.

Moreover, the convergence properties & efficiency of the training process can be impacted by optimizer algorithms like Adam, SGD, or RMSprop. Finding the ideal settings for a given task requires a lot of trial & error with various hyperparameter combinations and extensive validation testing. Also, to methodically explore the hyperparameter space and determine the optimal configuration for the DNN, strategies like grid search & random search can be employed. In order to avoid overfitting & enhance a DNN’s capacity for generalization, regularization techniques are crucial. When a model works well on training data but is unable to generalize to new data, this is known as overfitting. This can occur when the training data contains noise or unimportant patterns that the model detects due to its excessive complexity.

Regularization strategies that are frequently used include batch normalization, dropout, early stopping, and L1 and L2 regularization. By encouraging simpler models, L1 and L2 regularization prevent overfitting by adding penalty terms to the loss function that dissuade heavy weights. In order to avoid feature co-adaptation and enhance generalization, dropout randomly deactivates a portion of nodes during training. In order to prevent overfitting by avoiding excessive training, early stopping entails keeping an eye on performance on a validation set during training and halting when no improvement is noticed. In order to enhance stability & convergence characteristics during training, batch normalization normalizes the input to each layer. To construct durable and dependable DNN models that perform well in the generalization of unknown data, these regularization strategies must be used.

advantages of transfer learning. Benefiting from the expertise & feature representations gained from massive datasets like ImageNet or COCO is feasible by utilizing transfer learning. This can start the model with meaningful weights and biases, which can significantly reduce the amount of labeled data needed for training and speed up convergence. Useful Applications of Transfer Education.

In tasks like object detection, image recognition, and natural language processing, transfer learning works especially well when there are large pre-trained models like VGG, ResNet, BERT, or GPT-3 available. State-of-the-art performance can be attained with minimal computational resources by fine-tuning these models on particular tasks or domains. Benefits of Improving Pre-Trained Models. By optimizing pre-trained models for particular tasks or domains, cutting-edge performance can be attained with little computational power.

This method improves performance and shortens training times by letting developers leverage the information and feature representations discovered from massive datasets. To ensure continuous improvement, enhance model accuracy, and spot possible problems, a DNN’s performance must be monitored & assessed. Tracking numerous metrics during training and validation is necessary for this, including loss function, accuracy, precision, recall, F1 score, and more. It is possible to find problems like underfitting, overfitting, vanishing gradients, and convergence problems by examining these metrics. In order to understand how the model interprets and processes input data, it’s also critical to visualize intermediate representations that the DNN learnt using methods like t-SNE or PCA.

Also, in order to assess the DNN’s generalization capabilities, extensive testing on unseen data is essential. This is testing the model’s performance on new instances using a different testing set or by performing cross-validation. It is feasible to find areas for improvement, tweak hyperparameters, modify regularization strategies, or take into account different architectures for better outcomes by regularly monitoring and assessing DNN performance. Achieving state-of-the-art performance and maintaining competitiveness in quickly changing fields like computer vision, natural language processing, and more require this iterative process. In conclusion, attaining optimal performance and generalization capabilities requires a thorough understanding of DNN fundamentals & skillfully refining the training process. DNN models that are dependable and strong can solve challenging real-world problems with high accuracy and efficiency.

This can be achieved by carefully choosing architectures, adjusting hyperparameters, putting regularization strategies into practice, utilizing transfer learning, and maintaining performance monitoring.

If you’re interested in the intersection of technology and psychology, you may want to check out the article “If We Asked Sigmund Freud About the Metaverse, What Would He Say?” on Metaversum.it. This thought-provoking piece explores the potential implications of the metaverse on human behavior and mental health, drawing on the insights of the famous psychoanalyst. It’s a fascinating read for anyone curious about the psychological impact of emerging technologies like the metaverse.

FAQs

What is DNNs?

DNNs stands for Deep Neural Networks, which are a type of artificial neural network with multiple layers between the input and output layers.

How do DNNs work?

DNNs work by using multiple layers of interconnected nodes to process and learn from input data, allowing them to recognize patterns and make predictions.

What are the applications of DNNs?

DNNs are used in a wide range of applications, including image and speech recognition, natural language processing, and autonomous vehicles.

What are the advantages of DNNs?

Some advantages of DNNs include their ability to learn complex patterns from data, their flexibility in handling different types of input, and their potential for high accuracy in prediction tasks.

What are the limitations of DNNs?

Limitations of DNNs include the need for large amounts of labeled data for training, the potential for overfitting, and the computational resources required for training and inference.

Leave a Reply