Cross-validation is a fundamental technique in machine learning used to evaluate the performance of predictive models. It involves dividing the dataset into subsets, training the model on a portion of the data, and testing it on the remaining data. This process is repeated multiple times with different subsets to ensure the model’s performance is consistent across various data partitions.

K-fold cross-validation is a common method where the dataset is split into k equal subsets or “folds.” The model is trained on k-1 folds and tested on the remaining fold. This process is repeated k times, with each fold serving as the test set once. This approach provides a comprehensive assessment of the model’s performance across different data subsets.

Leave-one-out cross-validation is another technique where each data point is used as the test set once, while the rest of the data is used for training. This method is particularly useful for small datasets, as it maximizes the use of available data for both training and testing. Cross-validation is crucial for preventing overfitting, which occurs when a model performs well on training data but poorly on unseen data.

By using cross-validation, machine learning practitioners can assess how well a model generalizes to new data and identify potential overfitting issues. This technique helps ensure that the model’s performance is robust and not dependent on a specific subset of the data.

Key Takeaways

- Cross-validation is a technique used to assess the performance of machine learning models by dividing the dataset into subsets for training and testing.

- Cross-validation is important for model performance as it helps to prevent overfitting and provides a more accurate estimate of how the model will perform on new data.

- Implementing cross-validation techniques in AI models involves using methods such as k-fold cross-validation, leave-one-out cross-validation, and stratified cross-validation.

- Choosing the right cross-validation method for your AI model depends on the size and nature of the dataset, as well as the specific goals of the model.

- Evaluating model performance with cross-validation metrics such as accuracy, precision, recall, and F1 score can provide valuable insights into the model’s effectiveness.

- Overcoming challenges in cross-validation for AI models involves addressing issues such as data leakage, imbalanced datasets, and computational complexity.

- Best practices for mastering model performance with cross-validation include using multiple cross-validation techniques, optimizing hyperparameters, and interpreting cross-validation results in the context of the specific problem domain.

The Importance of Cross-Validation for Model Performance

Cross-validation plays a crucial role in assessing and improving the performance of machine learning models. It provides a more accurate estimate of a model’s performance compared to a single train-test split, as it evaluates the model on multiple subsets of the data. This helps to reduce the variance in performance estimates and provides a more reliable assessment of how well the model will generalize to new, unseen data.

Furthermore, cross-validation allows for better model selection and hyperparameter tuning. By comparing the performance of different models or different sets of hyperparameters across multiple cross-validation folds, machine learning practitioners can make more informed decisions about which model or parameter settings are likely to perform best on unseen data. This can lead to improved model performance and better generalization to new data.

In addition, cross-validation provides insights into the stability of a model’s performance. If a model’s performance varies significantly across different cross-validation folds, it may indicate that the model is sensitive to the specific subset of data used for training and testing. This can help identify potential issues with model robustness and guide efforts to improve the model’s stability and generalization capabilities.

Overall, cross-validation is essential for ensuring that machine learning models are robust, generalizable, and perform well on unseen data. It provides a more accurate assessment of model performance, facilitates better model selection and hyperparameter tuning, and offers insights into a model’s stability and generalization capabilities.

Implementing Cross-Validation Techniques in AI Models

Implementing cross-validation techniques in AI models involves several key steps. First, the dataset needs to be divided into subsets for training and testing. This can be done using different cross-validation methods such as k-fold cross-validation or leave-one-out cross-validation, depending on the specific requirements of the problem and the available data.

Once the dataset is partitioned into subsets, the AI model is trained on one subset and tested on another. This process is repeated multiple times, with different subsets used for training and testing in each iteration. The performance of the model is then evaluated across all iterations to obtain a comprehensive assessment of its generalization capabilities.

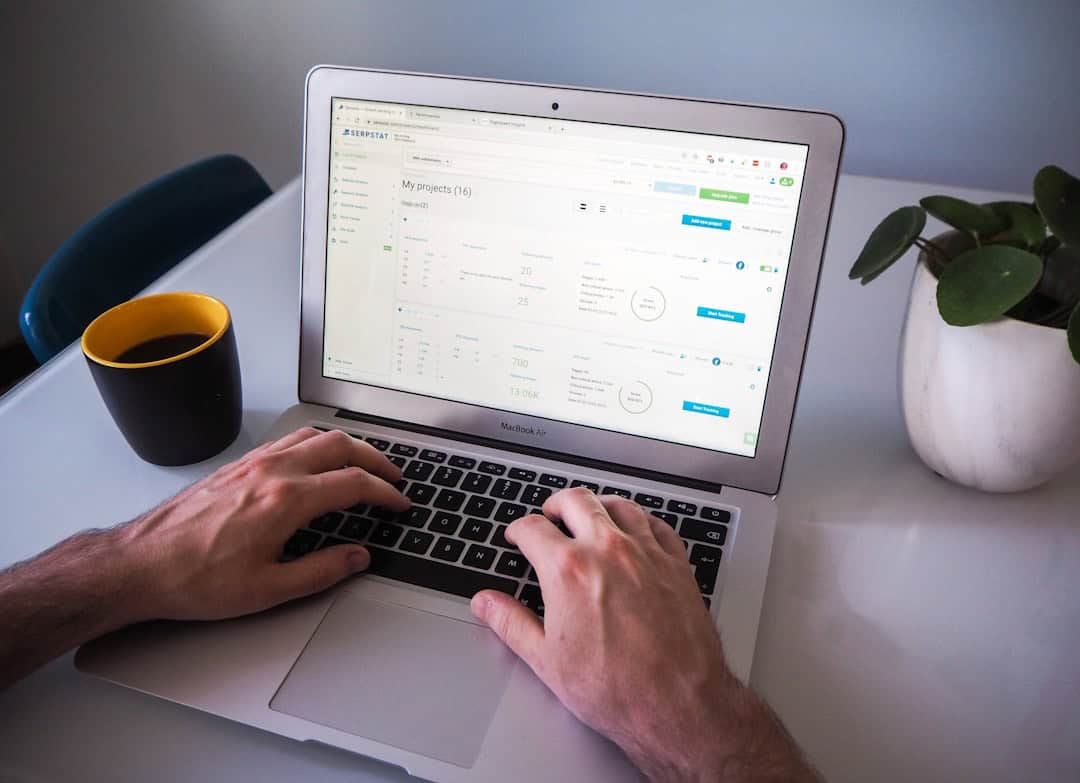

In practice, implementing cross-validation techniques in AI models often involves using libraries or frameworks that provide built-in support for cross-validation, such as scikit-learn in Python. These libraries offer convenient functions and classes for performing different types of cross-validation, making it easier for machine learning practitioners to incorporate cross-validation into their modeling workflows. Overall, implementing cross-validation techniques in AI models requires careful consideration of the specific requirements of the problem, the available data, and the desired level of model assessment.

By following best practices and leveraging available tools and libraries, machine learning practitioners can effectively incorporate cross-validation into their modeling workflows to assess and improve model performance.

Choosing the Right Cross-Validation Method for Your AI Model

| Cross-Validation Method | Description | Advantages | Disadvantages |

|---|---|---|---|

| K-Fold Cross-Validation | Data is divided into k subsets and the model is trained k times, each time using a different subset as the test set and the remaining data as the training set. | Provides reliable estimate of model performance, especially with small datasets. | Can be computationally expensive, especially with large datasets. |

| Leave-One-Out Cross-Validation | Each data point is used as the test set once while the rest of the data is used as the training set. | Provides the least biased estimate of model performance. | Can be highly variable and computationally expensive with large datasets. |

| Stratified Cross-Validation | Ensures that each fold is representative of the whole dataset in terms of the class distribution. | Useful for imbalanced datasets and classification problems. | May not be necessary for well-balanced datasets. |

Choosing the right cross-validation method for an AI model depends on several factors, including the size of the dataset, the nature of the problem, and computational resources available. One common method is k-fold cross-validation, which is suitable for medium to large datasets and provides a good balance between computational efficiency and robust performance estimation. For smaller datasets, leave-one-out cross-validation may be more appropriate as it maximizes the use of available data for both training and testing.

However, this method can be computationally expensive and may not be feasible for very large datasets. In such cases, stratified k-fold cross-validation or repeated random sub-sampling validation may be more suitable alternatives. It’s also important to consider any specific requirements or constraints of the problem when choosing a cross-validation method.

For example, if the dataset contains class imbalances, stratified k-fold cross-validation can help ensure that each fold maintains the same class distribution as the original dataset. Similarly, if there are temporal dependencies in the data, time series cross-validation methods such as forward chaining or rolling origin validation may be more appropriate. Ultimately, choosing the right cross-validation method for an AI model requires careful consideration of various factors such as dataset size, computational resources, problem requirements, and any specific characteristics of the data.

By selecting an appropriate cross-validation method, machine learning practitioners can ensure that they obtain reliable performance estimates and make informed decisions about model selection and hyperparameter tuning.

Evaluating Model Performance with Cross-Validation Metrics

Evaluating model performance with cross-validation metrics involves assessing how well a model generalizes to new, unseen data across multiple iterations of training and testing. One common metric used in cross-validation is accuracy, which measures the proportion of correctly classified instances out of all instances in the test set. However, accuracy alone may not provide a complete picture of a model’s performance, especially in cases where class imbalances are present in the data.

In such cases, precision, recall, and F1 score are commonly used metrics that provide insights into a model’s performance across different classes. Precision measures the proportion of true positive predictions out of all positive predictions made by the model, while recall measures the proportion of true positive predictions out of all actual positive instances in the test set. The F1 score is the harmonic mean of precision and recall and provides a balanced measure of a model’s performance across different classes.

In addition to these metrics, area under the receiver operating characteristic curve (AUC-ROC) and area under the precision-recall curve (AUC-PR) are commonly used for evaluating binary classification models. These metrics provide insights into a model’s ability to discriminate between positive and negative instances and can help assess its overall performance across different thresholds. Overall, evaluating model performance with cross-validation metrics involves using a combination of different measures such as accuracy, precision, recall, F1 score, AUC-ROC, and AUC-PR to obtain a comprehensive assessment of how well a model generalizes to new data across multiple iterations of training and testing.

Overcoming Challenges in Cross-Validation for AI Models

While cross-validation is a powerful technique for assessing model performance in machine learning, it also comes with its own set of challenges. One common challenge is computational complexity, especially when dealing with large datasets or complex models that require significant computational resources to train and test across multiple iterations. Another challenge is ensuring that cross-validation results are reliable and reproducible.

Randomness introduced by shuffling or splitting the data can lead to variability in performance estimates across different runs of cross-validation. To address this challenge, setting a random seed or using deterministic algorithms can help ensure that results are consistent across different runs. Class imbalances in the data can also pose challenges for cross-validation, as traditional k-fold cross-validation may result in folds with significantly different class distributions.

Stratified k-fold cross-validation can help address this issue by maintaining consistent class distributions across different folds and providing more reliable performance estimates for imbalanced datasets. Finally, temporal dependencies in time series data can present challenges for traditional cross-validation methods. Time series cross-validation techniques such as forward chaining or rolling origin validation are specifically designed to address these challenges by preserving temporal order and providing more reliable estimates of a model’s performance on future data.

By understanding these challenges and leveraging appropriate techniques and strategies such as stratified k-fold cross-validation or time series cross-validation methods, machine learning practitioners can overcome these challenges and obtain reliable performance estimates for their AI models.

Best Practices for Mastering Model Performance with Cross-Validation

To master model performance with cross-validation, it’s important to follow best practices that ensure reliable and robust performance estimates for AI models. One key best practice is to use multiple evaluation metrics to obtain a comprehensive assessment of a model’s performance across different dimensions such as accuracy, precision, recall, F1 score, AUC-ROC, and AUC-PR. This helps provide a more complete picture of how well a model generalizes to new data.

Another best practice is to carefully consider any specific requirements or constraints of the problem when choosing a cross-validation method. For example, if dealing with imbalanced datasets or time series data, using stratified k-fold cross-validation or time series cross-validation methods can help ensure that performance estimates are reliable and representative of real-world scenarios. It’s also important to pay attention to potential sources of variability in performance estimates across different runs of cross-validation.

Setting a random seed or using deterministic algorithms can help ensure that results are consistent and reproducible across different runs, reducing variability due to randomness introduced by shuffling or splitting the data. Finally, leveraging available tools and libraries that provide built-in support for cross-validation can help streamline the process and make it easier to incorporate cross-validation into modeling workflows. Libraries such as scikit-learn in Python offer convenient functions and classes for performing different types of cross-validation, making it easier for machine learning practitioners to implement best practices and obtain reliable performance estimates for their AI models.

By following these best practices and leveraging appropriate techniques and strategies such as using multiple evaluation metrics, choosing an appropriate cross-validation method, addressing sources of variability in performance estimates, and leveraging available tools and libraries, machine learning practitioners can master model performance with cross-validation and ensure that their AI models perform well on unseen data.

If you’re interested in learning more about the applications of artificial intelligence in the field of data science, you should check out this article on artificial intelligence on Metaversum. It discusses how AI is being used in various industries, including data analysis and predictive modeling, which are closely related to the concept of cross-validation.

FAQs

What is cross-validation?

Cross-validation is a technique used to assess the performance of a predictive model by dividing the data into two subsets: one for training and the other for testing. It helps to evaluate how well the model generalizes to new data.

Why is cross-validation important?

Cross-validation is important because it helps to assess the performance of a predictive model and determine how well it will generalize to new data. It also helps to identify and prevent overfitting, which occurs when a model performs well on the training data but poorly on new data.

What are the different types of cross-validation?

The most common types of cross-validation are k-fold cross-validation, leave-one-out cross-validation, and stratified cross-validation. K-fold cross-validation involves dividing the data into k subsets and using each subset as the testing set while the rest are used for training. Leave-one-out cross-validation involves using a single observation as the testing set and the rest for training. Stratified cross-validation is similar to k-fold cross-validation but ensures that each fold has a proportional representation of the different classes in the data.

When should cross-validation be used?

Cross-validation should be used when evaluating the performance of a predictive model, especially when the dataset is limited in size. It is also useful when comparing different models to determine which one performs better on new data.

What are the limitations of cross-validation?

One limitation of cross-validation is that it can be computationally expensive, especially for large datasets or complex models. It can also be sensitive to the way the data is divided into subsets, and the results may vary depending on the random selection of the training and testing sets.

Leave a Reply