In the ever-evolving landscape of data processing, Google Cloud Dataflow stands out as a powerful tool designed to handle both batch and stream processing with remarkable efficiency. Launched as part of Google Cloud Platform, Dataflow is built on the Apache Beam model, which allows developers to create data processing pipelines that can be executed on various execution engines. This flexibility is particularly appealing to organizations that require a robust solution for managing large volumes of data in real-time.

As businesses increasingly rely on data-driven insights, the need for a scalable and efficient processing framework has never been more critical. Google Cloud Dataflow simplifies the complexities associated with data processing by providing a fully managed service that automatically scales resources based on workload demands. This means that users can focus on developing their applications without worrying about the underlying infrastructure.

With its ability to seamlessly integrate with other Google Cloud services, such as BigQuery and Pub/Sub, Dataflow enables organizations to build comprehensive data solutions that can adapt to their unique needs. As we delve deeper into the intricacies of real-time data processing, it becomes evident that Google Cloud Dataflow is not just a tool; it is a transformative platform that empowers businesses to harness the full potential of their data.

Key Takeaways

- Google Cloud Dataflow is a fully managed service for real-time data processing and batch processing

- Real-time data processing allows for the analysis of data as it arrives, enabling immediate insights and actions

- Google Cloud Dataflow offers benefits such as scalability, reliability, and ease of use for streaming analytics

- Key features of Google Cloud Dataflow include unified batch and stream processing, auto-scaling, and integration with other Google Cloud services

- Use cases for Google Cloud Dataflow include real-time fraud detection, personalized recommendations, and IoT data processing

Understanding Real-time Data Processing

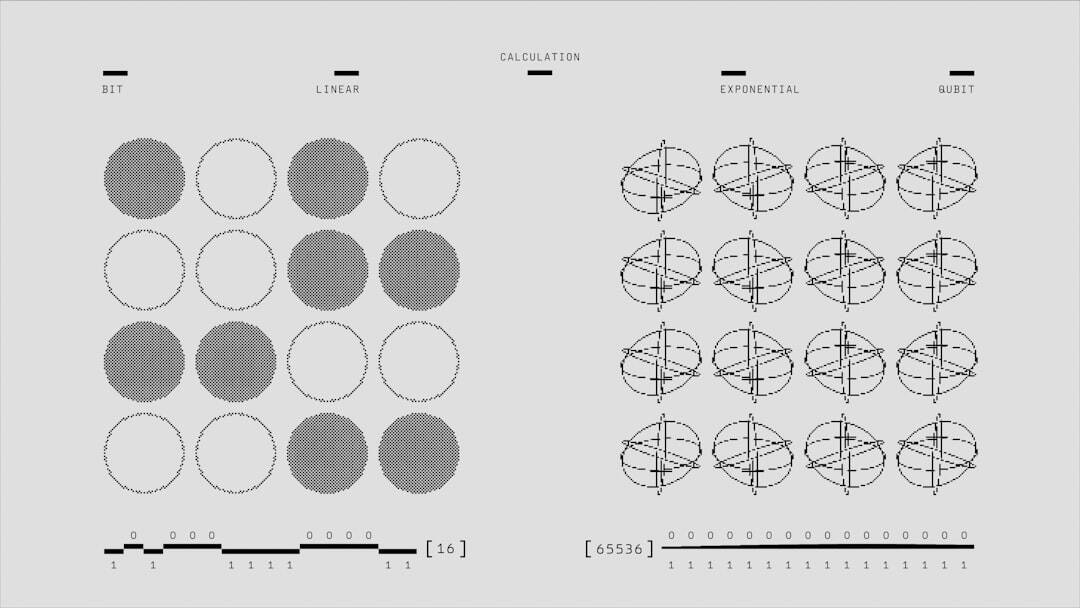

Real-time data processing refers to the continuous input, processing, and output of data with minimal latency.

This capability is crucial in today’s fast-paced digital environment, where timely insights can lead to competitive advantages.

Industries such as finance, e-commerce, and telecommunications are increasingly adopting real-time analytics to enhance decision-making and improve customer experiences. The architecture of real-time data processing typically involves a series of components that work together to ingest, process, and deliver data streams. These components include data sources, stream processing engines, and storage solutions.

The challenge lies in ensuring that each component operates efficiently and can handle varying loads without compromising performance. Google Cloud Dataflow addresses these challenges by providing a unified framework that supports both stream and batch processing, allowing organizations to build resilient systems capable of adapting to changing data flows.

Benefits of Google Cloud Dataflow for Streaming Analytics

One of the most significant advantages of Google Cloud Dataflow is its ability to simplify the complexities associated with streaming analytics. By offering a fully managed service, Dataflow eliminates the need for users to provision and manage infrastructure, allowing them to focus on developing their data pipelines. This not only accelerates the development process but also reduces operational overhead, enabling teams to allocate resources more effectively.

Moreover, Google Cloud Dataflow’s auto-scaling capabilities ensure that resources are dynamically adjusted based on workload demands. This means that during peak times, such as during major sales events or product launches, Dataflow can automatically allocate additional resources to handle increased traffic. Conversely, during quieter periods, it can scale down to minimize costs.

Leave a Reply