Artificial Intelligence (AI) has been a rapidly evolving field, with the development of various machine learning algorithms and techniques. One such algorithm that has gained significant attention in recent years is the Recurrent Neural Network (RNN). RNNs are a type of neural network designed to recognize patterns in sequences of data, making them particularly well-suited for tasks such as natural language processing, speech recognition, and time series prediction.

Unlike traditional feedforward neural networks, RNNs have the ability to retain memory of previous inputs, allowing them to exhibit dynamic temporal behavior. This makes them ideal for processing sequential data, where the order and context of the input is crucial for making accurate predictions. The concept of RNNs can be traced back to the 1980s, but it wasn’t until the early 2000s that they gained widespread popularity due to advancements in computational power and the availability of large datasets.

Since then, RNNs have been successfully applied to a wide range of AI tasks, from language translation to music composition. As the demand for AI applications continues to grow, the potential of RNNs in solving complex sequential problems has become increasingly evident. In this article, we will delve into the architecture, applications, training techniques, challenges, and future developments of RNNs, shedding light on their role in shaping the future of AI.

Key Takeaways

- Recurrent Neural Networks (RNNs) are a type of artificial intelligence (AI) that is designed to recognize patterns in sequences of data, making them ideal for tasks such as natural language processing and time series analysis.

- The architecture of RNNs includes a loop that allows information to persist, making them well-suited for processing sequential data. However, they are also prone to issues such as vanishing gradients and difficulty in capturing long-term dependencies.

- RNNs have a wide range of applications in AI, including language translation, speech recognition, and sentiment analysis. They can also be used for generating sequences, such as music or text.

- Training and optimizing RNNs can be challenging due to issues such as vanishing gradients and overfitting. Techniques such as gradient clipping and using long short-term memory (LSTM) cells can help address these challenges.

- Despite their potential, RNNs also have limitations, such as difficulty in capturing long-term dependencies and the need for large amounts of training data. Future developments in RNNs may focus on addressing these limitations and improving their performance in AI applications.

Understanding the Architecture of Recurrent Neural Networks

The architecture of a Recurrent Neural Network is characterized by its ability to maintain a state or memory of previous inputs while processing new ones. This is achieved through the use of recurrent connections within the network, where the output of a neuron is fed back as an input to itself or other neurons in the network. This feedback loop allows RNNs to exhibit temporal dynamic behavior, making them well-suited for tasks that involve sequential data.

The basic building block of an RNN is the recurrent neuron, which takes an input along with its previous state and produces an output and a new state. This allows the network to capture dependencies between elements in a sequence, making it capable of tasks such as predicting the next word in a sentence or generating music. Despite their powerful capabilities, traditional RNNs suffer from the “vanishing gradient” problem, where gradients diminish as they are back-propagated through time, leading to difficulties in learning long-range dependencies.

To address this issue, various RNN variants have been developed, such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks. These variants incorporate gating mechanisms that regulate the flow of information within the network, allowing them to retain long-term dependencies more effectively. As a result, LSTM and GRU networks have become the go-to choices for many sequential learning tasks due to their ability to capture and remember long-range dependencies in data.

Applications of Recurrent Neural Networks in AI

The applications of Recurrent Neural Networks in AI are diverse and far-reaching, spanning across various domains such as natural language processing, speech recognition, time series analysis, and more. In natural language processing, RNNs have been used for tasks such as language modeling, machine translation, sentiment analysis, and named entity recognition. Their ability to capture contextual information from sequential data makes them well-suited for understanding and generating human language.

In speech recognition, RNNs have been employed to transcribe spoken language into text, enabling applications such as virtual assistants and voice-controlled devices. Another prominent application of RNNs is in time series analysis, where they are used for tasks such as stock market prediction, weather forecasting, and anomaly detection. Their ability to capture temporal dependencies in data makes them ideal for modeling and predicting sequential patterns.

Additionally, RNNs have found applications in fields such as healthcare, finance, and robotics, where sequential data plays a crucial role in decision-making processes. With the advent of deep learning and the availability of large-scale datasets, RNNs have demonstrated remarkable performance in these applications, paving the way for advancements in AI technologies.

Training and Optimization Techniques for Recurrent Neural Networks

| Technique | Description |

|---|---|

| Backpropagation Through Time (BPTT) | An algorithm for training recurrent neural networks. It is a generalization of the backpropagation algorithm to the case of sequences. |

| Long Short-Term Memory (LSTM) | A type of recurrent neural network architecture that is designed to overcome the vanishing gradient problem. |

| Gated Recurrent Unit (GRU) | A variation of the LSTM architecture that is designed to be simpler and more efficient while still capturing long-term dependencies in the data. |

| Gradient Clipping | A technique used to prevent the exploding gradient problem during training by clipping the gradients to a maximum value. |

| Dropout | A regularization technique where randomly selected neurons are ignored during training to prevent overfitting. |

Training Recurrent Neural Networks poses unique challenges due to their sequential nature and the presence of long-range dependencies in data. Traditional training techniques such as backpropagation through time (BPTT) are prone to the vanishing gradient problem, making it difficult for RNNs to learn from distant past inputs. To address this issue, various optimization techniques have been developed to improve the training of RNNs.

One such technique is gradient clipping, which involves limiting the magnitude of gradients during training to prevent them from becoming too small or too large. This helps mitigate the vanishing and exploding gradient problems commonly encountered in RNN training. Another important optimization technique for training RNNs is the use of adaptive learning rate algorithms such as Adam and RMSprop.

These algorithms adaptively adjust the learning rate based on the magnitude of gradients for each parameter, allowing for faster convergence and improved training stability. Additionally, techniques such as dropout regularization and batch normalization have been adapted for RNNs to prevent overfitting and improve generalization performance. These techniques play a crucial role in training deep RNN architectures with multiple layers, enabling them to learn complex patterns from sequential data more effectively.

Challenges and Limitations of Recurrent Neural Networks in AI

Despite their widespread applications and capabilities, Recurrent Neural Networks are not without their challenges and limitations. One major challenge is the difficulty of capturing long-range dependencies in data, which can lead to poor performance on tasks that require understanding context over a large time span. This is particularly evident in tasks such as machine translation and speech recognition, where maintaining context over long sentences or utterances is crucial for accurate predictions.

Additionally, RNNs are prone to overfitting when trained on small datasets or noisy sequential data, leading to poor generalization performance on unseen examples. Another limitation of traditional RNNs is their computational inefficiency when processing long sequences of data. Due to their sequential nature, RNNs are inherently slow at processing inputs one at a time, making them unsuitable for real-time applications or tasks that involve processing large volumes of data.

Furthermore, RNNs are sensitive to the order of inputs and can struggle with handling variable-length sequences, requiring additional preprocessing steps such as padding or truncation. These challenges have spurred research into developing more efficient and robust architectures for handling sequential data, leading to advancements in areas such as attention mechanisms and transformer networks.

Future Developments and Trends in Recurrent Neural Networks

Attention Mechanisms: Focusing on Relevant Data

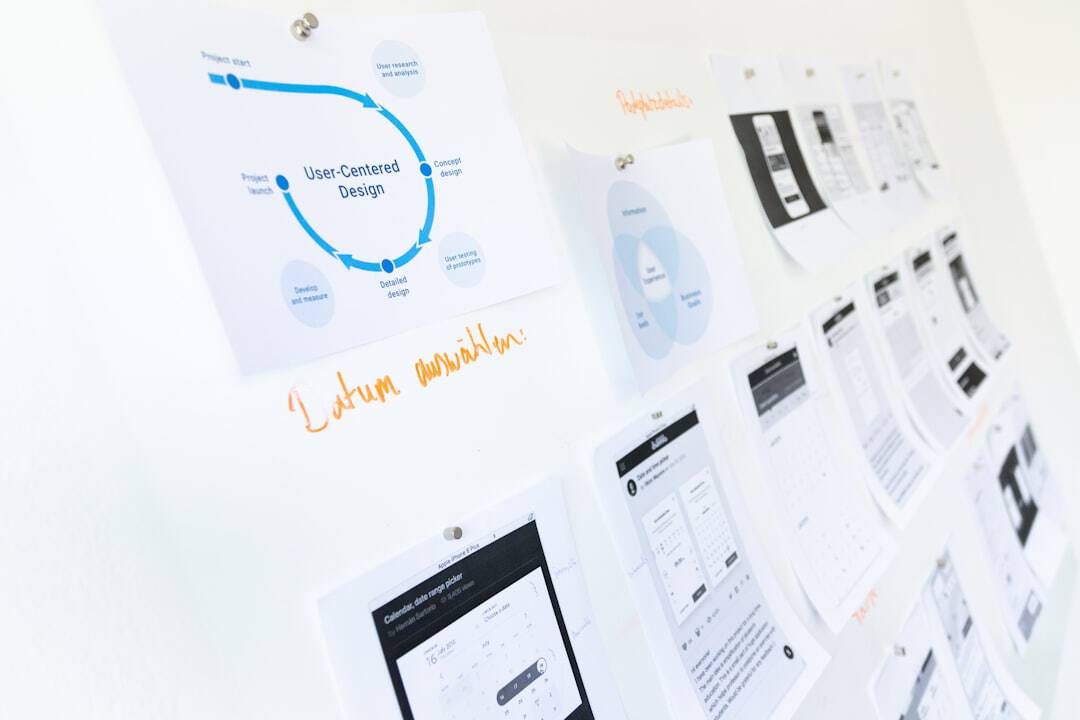

One prominent trend is the integration of attention mechanisms into RNN architectures, allowing them to focus on relevant parts of input sequences while processing data. This innovation has shown promising results in tasks such as machine translation and image captioning, enabling RNNs to capture long-range dependencies more effectively by attending to important elements in a sequence.

Hybrid Architectures: Combining Strengths

Another trend in RNN research is the development of hybrid architectures that combine RNNs with other types of neural networks, such as convolutional neural networks (CNNs) and transformer networks. These hybrid architectures leverage the strengths of each network type to handle different aspects of sequential data processing, leading to improved performance on tasks such as video analysis and document summarization.

Hardware Advancements: Enabling Breakthroughs

Advancements in hardware acceleration and parallel computing have enabled researchers to train larger and more complex RNN models more efficiently, paving the way for breakthroughs in areas such as natural language understanding and generative modeling.

Harnessing the Potential of Recurrent Neural Networks in AI

In conclusion, Recurrent Neural Networks have emerged as powerful tools for processing sequential data in AI applications, demonstrating remarkable capabilities in tasks such as natural language processing, speech recognition, and time series analysis. Their ability to capture temporal dependencies and retain memory of previous inputs makes them well-suited for understanding and generating complex sequential patterns. While they face challenges such as capturing long-range dependencies and computational inefficiency, ongoing research and developments are paving the way for addressing these limitations and unlocking new capabilities for RNNs.

As AI continues to advance, the potential of Recurrent Neural Networks in shaping the future of intelligent systems is undeniable. With ongoing developments in training techniques, optimization algorithms, and architectural innovations, RNNs are poised to play a pivotal role in enabling AI systems to understand and process sequential data more effectively. By harnessing the potential of RNNs and leveraging their capabilities in diverse domains, we can expect to see continued advancements in AI technologies that rely on understanding and generating complex sequential patterns.

As we look towards the future, it is clear that Recurrent Neural Networks will continue to be at the forefront of innovation in AI, driving new breakthroughs and applications that were once thought impossible.

If you’re interested in learning more about recurrent neural networks, you should check out the article “Understanding Recurrent Neural Networks” on Metaversum’s website. This article provides a comprehensive overview of how recurrent neural networks work and their applications in various fields. You can find it here.

FAQs

What is a recurrent neural network (RNN)?

A recurrent neural network (RNN) is a type of artificial neural network designed to recognize patterns in sequences of data, such as time series or natural language.

How does a recurrent neural network work?

RNNs have loops within their architecture, allowing them to persist information over time. This enables them to process sequential data and learn from previous inputs to make predictions about future inputs.

What are some applications of recurrent neural networks?

RNNs are commonly used in natural language processing tasks such as language translation, speech recognition, and text generation. They are also used in time series analysis, handwriting recognition, and other sequential data tasks.

What are the limitations of recurrent neural networks?

RNNs can struggle with capturing long-term dependencies in data, a problem known as the “vanishing gradient” problem. Additionally, they can be computationally expensive to train and may have difficulty with handling variable-length sequences.

What are some variations of recurrent neural networks?

Some variations of RNNs include Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs), which are designed to address the vanishing gradient problem and improve the ability of RNNs to capture long-term dependencies in data.

Leave a Reply