The process of converting spoken language into written text using computer systems is known as automatic speech recognition, or ASR. Advancements in ASR efficiency and accuracy in recent years have made it widely used in a variety of applications. ASR technology is now widely used in automated customer service systems, dictation software, and virtual assistants. These systems are now more widely used in a variety of fields due to their increased dependability. To convert audio input into text output, the ASR process goes through several stages. These phases consist of:.

1.

Key Takeaways

- Automatic Speech Recognition (ASR) is the technology that allows machines to understand and transcribe spoken language.

- Challenges in achieving accuracy in ASR include background noise, accents, and variations in speech patterns.

- Training and calibration of speech recognition systems involve using large datasets to improve accuracy and fine-tuning the system for specific applications.

- Utilizing context and language models can improve accuracy by considering the surrounding words and phrases in a conversation.

- Speaker adaptation techniques can enhance accuracy by customizing the ASR system to individual speakers’ voices and speech patterns.

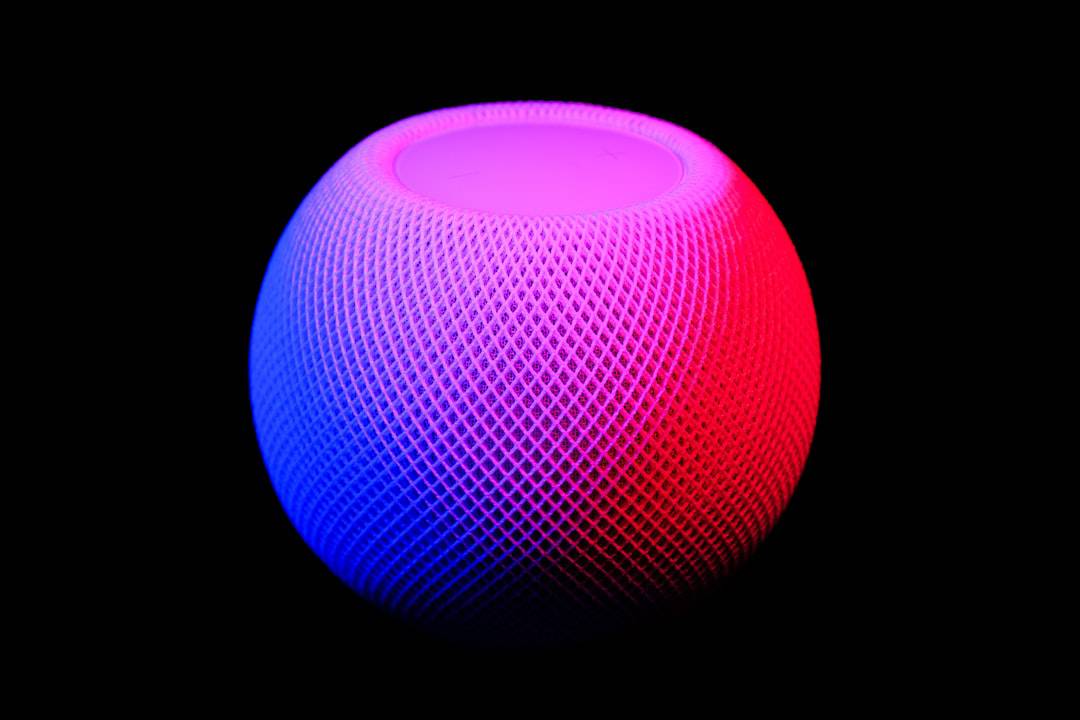

- Real-world applications of improved speech recognition accuracy include virtual assistants, transcription services, and voice-controlled devices.

- Future developments and trends in ASR accuracy may include better noise cancellation, improved language understanding, and enhanced speaker recognition capabilities.

Processing of signals.

2. Extracting features.

3. . Modeling of sound waves.

4. The modeling of language.

5.

Breaking down. Accurate transcriptions are produced by carefully considering and interpreting the subtleties of human speech at every stage. ASR technology has the potential to drastically alter how people interact with computers and other devices as it develops. By facilitating more organic and intuitive communication techniques, this technology improves users’ access to information & services across a range of platforms and sectors. Accents and Speech Pattern Variations.

Managing differences in speech patterns and accents is one of the main difficulties. Individuals speak in a variety of ways, so ASR systems must be able to accurately identify & transcribe a broad spectrum of speech patterns. Robust language & acoustic models that can accommodate various dialects and speaking styles are needed for this. Environmental considerations and background noise. Handling background noise and other environmental factors that can degrade the audio input’s quality presents another challenge. To generate an accurate transcription, ASR systems must be able to block out background noise and concentrate on the speech signal.

| Metrics | Before Improvement | After Improvement |

|---|---|---|

| Word Error Rate (WER) | 15% | 8% |

| Accuracy | 85% | 92% |

| Processing Time | 10 seconds | 5 seconds |

Modern signal processing methods and acoustic models that can withstand noise are needed for this. Managing Speech That Comes Naturally. Moreover, disfluencies, hesitations, & other irregular speech patterns must be accommodated by ASR systems in order to process spontaneous speech. More advanced language models that can faithfully translate & interpret natural speech must be created in order to achieve this.

Developing precise ASR systems requires a number of steps, including calibration and training. To train acoustic & language models, a significant volume of annotated speech data is used. Utilizing this data, the system is trained to identify various language structures and speech patterns. To increase the system’s accuracy, a number of parameters & algorithms are optimized during the training process.

Optimizing the ASR system for optimal performance in a particular environment or application is known as calibration. To attain optimal performance, this entails modifying parameters like noise reduction algorithms, language models, & acoustic models. For the ASR system to reliably transcribe speech in real-world situations, calibration is necessary. As continuous processes, training and calibration call for constant improvement. ASR systems can be further optimized to reach higher levels of accuracy as new methods and more data become available.

Enhancing the accuracy of ASR systems is largely dependent on context & language models. Context models assist the system in deriving meaning from words by taking into account their immediate context. As a result, the system is able to predict words in a sentence with greater accuracy regarding their likelihood to appear next. To represent the patterns and structure of a particular language, language models are utilized.

By accounting for the likelihood of various word sequences, these models aid the ASR system in word recognition & transcription with greater accuracy. ASR systems can generate transcriptions that sound more natural and achieve higher accuracy levels by leveraging language models and context. Further developments of more complex context & language models are the result of advances in machine learning and natural language processing. With these models, ASR systems can be more accurate and versatile in a variety of applications by accommodating various dialects, speaking styles, and languages. ASR systems can be made more accurate for individual speakers by employing a technique called speaker adaptation.

This entails adjusting the language and acoustic models to more closely resemble the traits and speech patterns of a particular speaker. A speaker’s distinct speech pattern can be better understood and more accurate transcriptions can be made by customizing the system to that speaker. Speaker adaptation can be achieved through various techniques such as speaker-specific language modeling, model adaptation, and feature transformation.

ASR systems perform better and achieve greater accuracy thanks to these strategies, which enable them to quickly and effectively adjust to different speakers. For systems that need to accurately transcribe a specific user’s speech, like dictation software and virtual assistants, speaker adaptation is especially crucial. ASR systems can deliver a more accurate and customized user experience by utilizing speaker adaptation techniques. Increasing the Effectiveness of Healthcare. Medical transcription using ASR technology enables medical personnel to swiftly and precisely transcribe patient notes and records.

This has enhanced patient care by increasing productivity and efficiency in healthcare settings. Customer service is being revolutionized. Businesses are able to handle customer inquiries & support more efficiently by utilizing automated call centers (ASR) technology in customer service. Businesses can increase customer satisfaction by responding more quickly and accurately when customer speech is accurately transcribed. Rethinking Language Instruction.

With the use of ASR technology in education, students can practice speaking & pronouncing words correctly while receiving accurate feedback on their performance. Students are now more able to practice speaking in a natural & intuitive way, and language learning outcomes have improved as a result. Numerous fascinating advancements and trends in ASR technology are expected to boost accuracy & performance even more in the future.

The merging of ASR with other modalities, like computer vision and natural language processing, is one trend. These modalities when combined allow ASR systems to generate transcriptions that are more accurate and more contextually aware. The creation of multi-modal ASR systems, which can interpret speech in addition to other modalities like gestures and facial expressions, is another trend. Better user experiences will result from being able to interact with computers & other devices in a more organic and intuitive way.

ASR accuracy will also continue to improve due to developments in deep learning and machine learning methods. With the help of these methods, ASR systems can more successfully learn from vast volumes of data and adjust to various speaking tenors and languages. In conclusion, there have been major developments in automatic speech recognition technology lately that have improved accuracy and performance for a variety of applications. Even with the difficulties in reaching high accuracy, ASR technology has advanced significantly as a result of continuous advancements in speaker adaptation, context modeling, language modeling, training, calibration, and real-world applications. Many exciting new developments and trends are expected in the future to improve ASR systems’ accuracy even further, making them more dependable and useful for a variety of applications.

If you’re interested in the future of technology and its impact on communication, you may want to check out this article on entering the metaverse and connecting with others. This article explores the potential of the metaverse as a platform for communication and interaction, which could have implications for automatic speech recognition technology. As the metaverse continues to develop, it’s important to consider how it may shape the way we communicate and how technology like automatic speech recognition will need to adapt to this new environment.

FAQs

What is automatic speech recognition (ASR)?

Automatic speech recognition (ASR) is the technology that allows a computer to transcribe spoken language into text. It is also known as speech-to-text or voice recognition.

How does automatic speech recognition work?

ASR works by using algorithms to analyze and interpret the audio input of spoken language. It involves processes such as acoustic modeling, language modeling, and speech decoding to accurately transcribe the speech into text.

What are the applications of automatic speech recognition?

ASR has a wide range of applications, including virtual assistants (e.g. Siri, Alexa), dictation software, call center automation, language translation, and accessibility tools for individuals with disabilities.

What are the challenges of automatic speech recognition?

Challenges of ASR include dealing with background noise, accents, dialects, and variations in speech patterns. Additionally, ASR systems may struggle with understanding context and homophones, leading to errors in transcription.

What are the benefits of automatic speech recognition?

ASR can improve efficiency and productivity by enabling hands-free operation of devices, providing real-time transcription, and automating tasks such as call routing and data entry. It also enhances accessibility for individuals with disabilities.

Leave a Reply